Is Facebook "killing people"? Only it can know how many

Justin Hendrix / Jul 17, 2021It must have been a rough week in Menlo Park. It started with the publication of An Ugly Truth, a book by New York Times journalists Sheera Frenkel and Cecilia Kang that chronicles years of scandals, obfuscations and in some cases outright lies by Facebook and its senior executives about its role in events ranging from Russian election interference to the genocide in Myanmar to the January 6 insurrection at the US Capitol.

The book, as its title should suggest, paints a story arc for Facebook that can only lead to one conclusion. “Throughout Facebook’s seventeen-year history, the social network’s massive gains have repeatedly come at the expense of consumer privacy and safety and the integrity of democratic systems,” write Frenkel and Kang. “And the platform is built upon a fundamental, possibly irreconcilable dichotomy: its purported mission to advance society by connecting people while also profiting off them. It is Facebook’s dilemma and its ugly truth.”

As reviews and headlines related to the new revelations in the book dominated coverage of the company, Facebook’s week was about to get worse.

On Thursday, U.S. Surgeon General Vivek Murthy took the stage to announce a warning about health misinformation. “As Surgeon General, my job is to help people stay safe and healthy, and without limiting the spread of health misinformation, American lives are at risk,” he said. His advisory included multiple recommendations to the social media companies, asserting that “product features built into technology platforms have contributed to the spread of misinformation” that the platforms “reward engagement rather than accuracy”.

The warning from the Surgeon General prompted more negative headlines and commentary for Facebook. “Facebook, Twitter and other social media companies need to be treated like Big Tobacco,” wrote Joan Donovan and Jennifer Nilsen, researchers at Harvard Kennedy School’s Shorenstein Center in an opinion for NBC News.

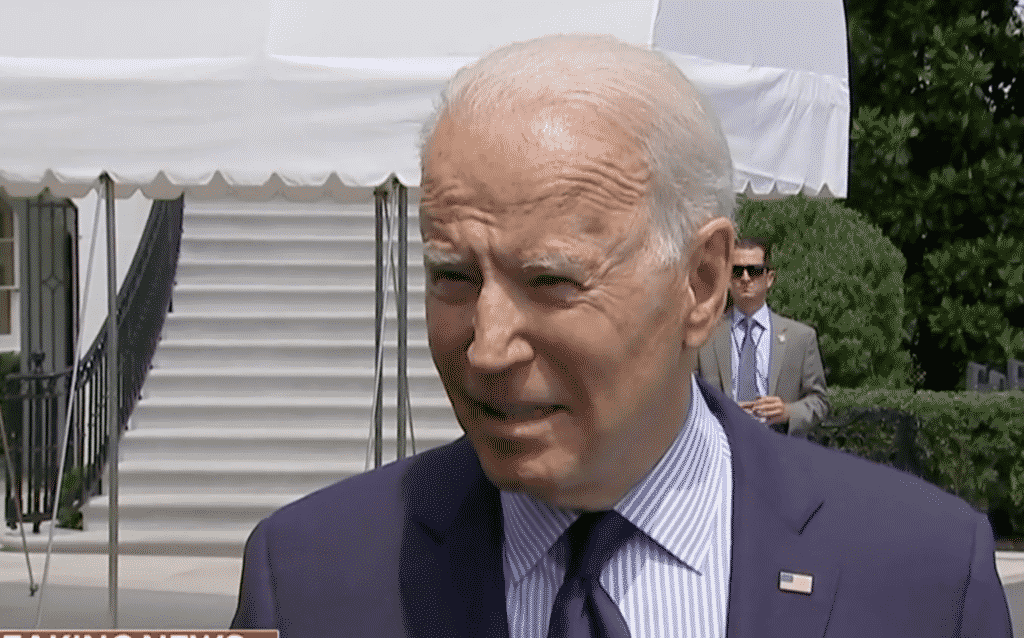

But what sent the company reeling is what happened Friday. With the blades of Marine One whirring behind him, ready to whisk him away to Camp David, President Joe Biden was asked what his message was to social media platforms with regard to COVID-19 disinformation.

“They’re killing people,” the President said. “Look, the only pandemic we have is among the unvaccinated, and that — and they’re killing people.”

In New York, a communications manager for Facebook- a former aide to Andrew Cuomo- quickly put together a statement:

“We will not be distracted by accusations which are not supported by the facts. The fact is that more than 2 billion people have viewed authoritative information about COVID-19 and vaccines on Facebook, which is more than any other place on the internet. More than 3.3 million Americans have also used our vaccine finder tool to find out where and how to get a vaccine. The facts show that Facebook is helping save lives. Period.”

Another Facebook executive anonymously told NBC media reporter Dylan Byers that "in private exchanges the Surgeon General has praised our work, including our efforts to inform people about COVID-19. They knew what they were doing. The White House is looking for scapegoats for missing their vaccine goals.”

But while Facebook may believe there is epistemic closure on whether it is contributing to human suffering or death- see that grumpy “Period” in the above statement- nothing could be further from the truth.

Certainly, Facebook is not the primary or the only source of COVID-19 misinformation. But the prevalence of false information- and the role of the affordances of the platform that convey and amplify it- is impossible to deny. In that way, it is no different from many other issues that appear to link Facebook and its platforms to a body count, from ethnic cleansing in Myanmar and Sri Lanka to groups linked to the WhatsApp lynchings in India to the Stop the Steal movement, about which Facebook’s own internal review concluded the company failed to recognize the “coordinated harm” that resulted from the use of its platform.

Despite this history, the company’s response fits the pattern across the arc of its history that Frenkel and Kang describe. Emphasize the denominator to obscure the numerator, and vice versa when it’s more advantageous. Point to big numbers for features where something positive is happening on the platform to deflect attention from something negative. Refer to the scale of the effort- technological or human- that is going in to solving the problem, and what an enormous investment of money, engineering and management attention it required.

Facebook’s confidence that it is, on balance, good for the world is always based on reference to a totality of ‘facts’ and ‘data’ to which it has nearly exclusive access. Independent researchers are very limited in their ability to access information across Facebook’s platforms, which leaves the outside world with no ability to judge the ultimate scale of problems such as COVID-19 mis- and disinformation, even as the evidence publicly available is damning. That means these questions cannot ultimately be reconciled.

This tension came to the fore in a Senate hearing in April. Senator Ben Sasse, R-NE, listened to testimony from social media’s critics and the testimony from social media executives. Senator Sasse noted that the answers the platforms were providing were simply “not reconcilable” with the positions of their critics. “You definitely aspire to skim the most destructive habits and practices off the top of digital addiction. But the business model is addiction, right? I mean, money is directly correlated to the amount of time people spend on the site,” Senator Sasse asked the representatives from Facebook, YouTube and Twitter.

The perfunctory denials and redirections that followed were no satisfactory answer to that question. Of course, only independent research and access to the data can break the impasse. On this subject, Facebook appears to be in conflict with itself. Some evidence suggests it would like to stifle access to information, while its stated policy is that it would like to provide more.

Another headline about Facebook this week that startled journalists and researchers that investigate what happens on the platform came from New York Times columnist Kevin Roose. Looking “Inside Facebook’s Data Wars,” he detailed the internal battle over data and transparency, in particular with regard to Crowd Tangle, a tool that “allows users to analyze Facebook trends and measure post performance, to dig up information they considered unhelpful”. The story suggests the company is considering ways to limit access to the tool in order to better control the narrative.

This episode “gets to the heart of one of the central tensions confronting Facebook in the post-Trump era,” concludes Roose. “The company, blamed for everything from election interference to vaccine hesitancy, badly wants to rebuild trust with a skeptical public. But the more it shares about what happens on its platform, the more it risks exposing uncomfortable truths that could further damage its image.”

The official position of the company is that it wishes to provide more information, not less. It crows about the access it provided to a handful of selected researchers to study the 2020 election, for instance, results of which are not due until 2022. But to provide even more access, Facebook says it needs governments to act.

“As society grapples with how to address misinformation, harmful content, and rising polarization, Facebook research could provide insights that help design evidence-based solutions,” wrote Nick Clegg, the company’s Vice President of Global Affairs, in March. “But to do that, there needs to be a clear regulatory framework for data research that preserves individual privacy.”

There are already sturdy ideas for how governments can do this. Nate Persily, a Stanford Law professor, has written convincingly on the subject, as has Rebekah Tromble, Director of the Institute for Data, Democracy & Politics at the George Washington University, who has participated in and led a variety of discussions on approaches for research access to platform data. The European Union seems a hair closer to resolving these issues than the United States.

It is urgent business. As a group of researchers from more than a dozen universities noted in a paper published earlier this month, social media platforms on the scale of Facebook have perturbed the way our species gets information and interacts in ways we do not yet understand. We cannot be certain it will just work out for the best “without evidence-based policy and ethical stewardship.” They lay out an ambitious road map for how multiple disciplines- from “sociology, communication studies, science and technology studies, political science, and macroeconomics” to “law, public policy, systemic risk, and international relations” must be brought to bear to help society justly determine how to govern the role of digital communications technologies and their effect on our collective behaviors.

What might this look like in practice, and how would it inform our assessment of the veracity of President Biden’s assertion that Facebook is “killing people”? Determining the role of the platform in the death of any one individual may be problematic, but observing a broader effect could very well be possible.

"We could understand the impact," said Brian Boland, former Vice President of Partnerships and Strategy at Facebook, on CNN's Reliable Sources Sunday. "There is this saying at Facebook, which is data wins arguments. You could understand if this is a massive problem or a smaller problem, if everyone was looking at the same data."

Former Facebook exec @BrianBoland, in his first TV interview since leaving the company, says Facebook isn't doing enough to understand what it has built. "It can impact communities large and small in ways that we don't understand," he says pic.twitter.com/pnXlL5PPOy

— Reliable Sources (@ReliableSources) July 18, 2021

With a Facebook-sized sample, social scientists, economists, epidemiologists and others could do the kind of statistical analyses necessary to compute the effects of phenomena such as COVID-19 misinformation on the population, and perhaps even calculate how many deaths can be partially or even fully attributable to it. We know such analyses are possible- recall the famous “emotional contagion” study that caused a stir in 2014, which looked at how changes to Facebook's news feed affected the emotional state of users, or more recent work that has observed a relationship between out-group animosity and engagement on Facebook and Twitter. Understanding the true scale of the effect could inform what to do about it.

Unless we can get the data, we’re left with the “irreconcilable dichotomy” of the status quo, and an endless future of frustration from politicians and Friday night denials from a trillion-dollar company that promises it has the evidence to prove it is, on balance, good for the world. That it is, on balance, enriching more lives than it is harming. Until there is resolution on these questions and a proportionate response from governments, there are more bad weeks ahead for Menlo Park- and for the rest of us. Period.

Authors