Interoperability in AI Governance: A Work in Progress

Angela Onikepe / Jul 17, 2024The views expressed here by the author are her own.

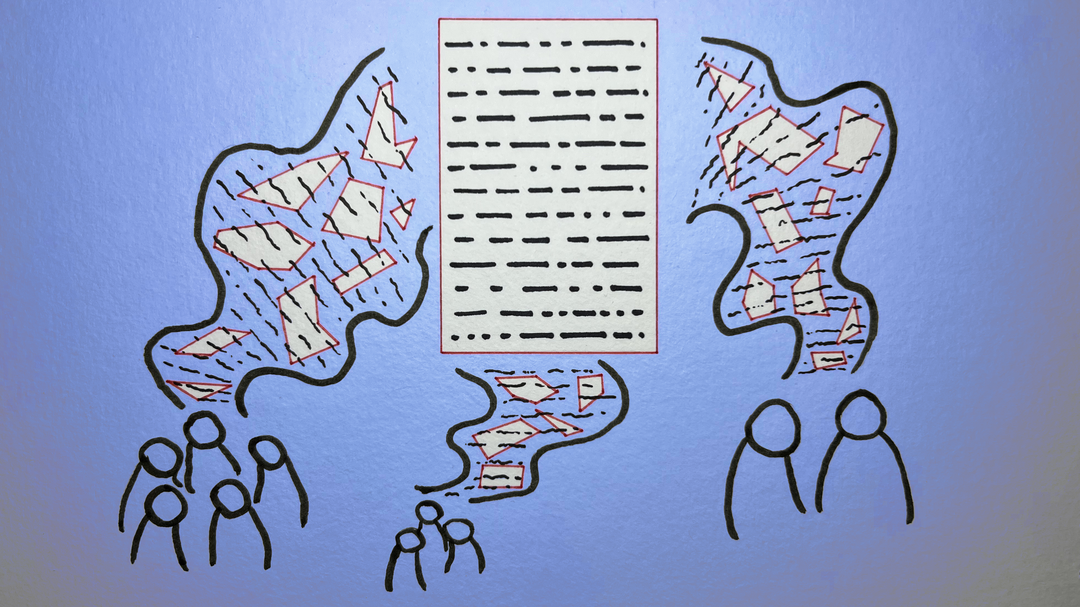

Yasmine Boudiaf & LOTI / Better Images of AI / Data Processing / CC-BY 4.0

In a rapidly evolving landscape, the impact of emerging technologies on society comes in waves, but the gripping intensity of recent advances and the integration of generative AI point to a unique momentum and level of urgency. Recognition of its transformative potential and risks highlights the present and complex challenges to come.

As the frenzy to develop and invest in artificial intelligence grows, so do calls for AI governance. This has spurred on policy innovation. We see serious efforts being put forth into regulations, policies, voluntary frameworks, ethical guidelines, principles, compacts, norms, consensus-gathering, and varied mechanisms – at the multilateral, global, regional, national, and cultural levels. We see different approaches to regulation, from Switzerland’s “tech neutral” approach to the EU’s AI Act risk-based and human rights approach, to the US and Japan’s sectoral approach, to China’s dual innovation-national security approach, Brazil’s development and implementation approach with its proposed AI law and African countries’ implementation of AI strategies and continent-wide initiatives.

Even so, it is clear that AI governance today is not yet mature. Moreover, the AI regulatory/policy regime remains fragmented. Many have acknowledged the need for AI governance interoperability—that is, openness and coordination—not solely for mitigating risks but also for fostering innovation, competitiveness, development, standardization, and potentially trust.

Traditionally, the demand for ‘interoperability’ has been technical in nature, referring to separate digital technology platforms and systems being able to work together (i.e., communicate or share data). Interoperability is now being applied to governance approaches. There is no global consensus or agreement on the meaning of the interoperability of AI governance. However, the establishment of trust, be it in the governance framework itself or in AI technology, has been put forward by various parties as the driver for the necessity of interoperability.

With such an impetus – of trust – one example of a proposed definition of AI governance interoperability can be instructive as one of the ways that interoperability could materialize. This definition comes from the United Nations expert multinational stakeholder Policy Network on AI (PNAI), which offers “three key interlinked factors” that form AI governance interoperability: “ (i) processes, comprising tools, measures, and mechanisms (ii) activities undertaken by multi-stakeholders and their interconnections and (iii) agreed ways to communicate and cooperate.”

Actors (states, entities, stakeholders) are grappling with how to carry out interoperability in AI governance frameworks. Questions abound. Interoperability between what elements: processes, risks frameworks, data, AI applications, regulations, or other related legal frameworks (privacy, data protection, etc..), to name a few? If we take the question a step further, interoperability at what level? At the international level? Within regions? Within different jurisdictions? Inside countries where governance initiatives are also taking place, the model of interoperability (between states, cantons, provinces, etc.…) has yet to be decided. There are no definitive answers, but we can observe that, at this stage in the evolution of AI governance across the globe, there is activity and development at all levels.

Although countries and entities are at varying phases of AI governance, strides are being made towards interoperability. Here is a roundup of the most recent efforts, showcasing the willingness to engage and the commitment to cooperation in different spheres.

The UN’s role in fostering global AI governance interoperability

In its December 2023 Interim Report on “Governing AI for Humanity,” the UN Secretary-General’s AI Advisory Body (Advisory Body) proposed recommendations for a global framework for AI governance. This framework would be embodied in an institution or a network of institutions (institution-network), built up from new and existing ones. The resulting framework would be anchored by seven governance functions to be executed by the institution network and five principles guiding its creation.

The five guiding principles are inclusive governance, public interest, alignment of data governance, a universal and networked collaboration, and an anchoring in international commitments. The institutional functions range from scientific assessments to compliance, accountability, and harmonization with standards, safety, and risk management. More pointedly, one of these institutional functions proposed by the Advisory Body is the reinforcement of the interoperability of governance efforts between jurisdictions.

This reinforcement is to be grounded in universal values and international norms (i.e., human rights) and established in a universal setting, such as the one offered by the UN and its existing agencies and fora (i.e., the ITU and UNESCO). The Advisory Body is currently in the input round phase, with ongoing public consultation and feedback from governments, civil societies, academics, companies, UN agencies, and individuals. It remains to be seen what forms the described functions will ultimately take. The final report will be published ahead of the UN Summit for the Future in September 2024.

The Bletchley effect: Anchoring AI safety institutes in international cooperation

In May 2024, the European Union and ten countries (Australia, Canada, France, Germany, Italy, Japan, the Republic of Korea, the Republic of Singapore, the United Kingdom, and the United States of America) signed the Seoul Declaration for safe, innovative and inclusive AI. The Declaration signaled a commitment to cooperation, transparency and interoperable AI governance frameworks by calling for not only the creation and expansion of AI safety institutes but also an international network between these institutes and other institutions/entities. This international network will specifically tackle “‘complementarity and interoperability’ between their technical work and approach to safety, to promote the safe, secure, and trustworthy development of AI.”

Countries like Japan, Singapore, the United States, the European Union (EU), and Canada have established their own AI safety institutes. The institutes are state-backed entities seeking to address governance challenges, human safety, economic equity, and misinformation, as well as quickly obtain new information about AI risks. Although they are all diverse in composition, focus, and depth of regulatory powers, it is clear that the idea of such institutes, pioneered by the United Kingdom (with the creation of its own AI safety institute in April 2023 following the first AI Safety Summit at the UK’s Bletchley Park in November 2023) is taking root.

Each of these national AI safety institutes is taking a different approach. For example, the UK AI Safety Institute’s role is to inform international and domestic policy-making and not to regulate or create policy. In contrast, the EU’s AI Safety Institute has regulatory powers and will focus on evaluating ‘frontier’ AI models. Canada’s AI Safety Institute will be established to focus on standards, research, and commercialization as part of its objective of becoming a leader in AI development.

At the June 2024 G7 Summit in Italy, G7 leaders reaffirmed and committed to increasing coordination with interoperability efforts in order to promote AI safety. This move has been defined as “deepening cooperation between the U.S. AI Safety Institute and similar bodies in other G7 countries to advance international standards for AI development and deployment.” A recent expert workshop convened by the Oxford Martin AI Governance Initiative explored how AI Safety Institutes could promote interoperability and the role they could play internationally, suggesting joint AI safety testing, mutual recognition of certification between the institutes, and aligning mandates (i.e., through a shared charter) in order to address priority safety topics. A key check of the G7’s renewed commitment to cooperation between these countries will be when their AI Safety Institutes come together to define compulsory AI safety provisions. That has yet to happen.

The above efforts illustrate initiatives that are taking place at the international level. There is also a surge of AI governance interoperability activity taking place within regions and in countries.

AI governance interoperability in regional and national settings

In Asia Pacific, countries have yet to pass robust AI regulations. Still, steps are being taken to ensure that countries cooperate on governance and ethics. Acknowledging the patchwork nature of AI governance approaches in the region, ASEAN countries (i.e., Singapore, Thailand, Malaysia, Indonesia, and Vietnam) have endorsed a guide that seeks to “facilitate the alignment and interoperability of AI frameworks across ASEAN jurisdictions.” The guide will be continuously developed, serving as a living document and practical guide for countries aiming to develop AI commercially, ethically, and responsibly. The guide is based on guiding principles that include safety and security, transparency, robustness, and explainability. It promotes a series of best practices, including the establishment of an AI Ethics Advisory Board, stakeholder participation, the development of trust, and human involvement in AI-augmented decision-making.

In Africa, Ministers adopted in June 2024 a landmark Continental Artificial Intelligence Strategy and African Digital Compact, connected to the African Union’s (AU) Agenda 2063 and Digital Transformation Strategy (2020 – 2030). These two initiatives are aimed at driving development and inclusive growth. Among key priorities, African ministers are focused on participation in the digital economy and Africa’s role in shaping global digital governance. The AU mandate for organizing a Continental African Artificial Intelligence Summit following the adoption of these two initiatives could be a signal of these governments' intentions to work on interoperability. The objective for the upcoming Summit is to “foster collaboration, knowledge exchange, and strategic planning among stakeholders across the continent.”

BRICS nations (originally Brazil, Russia, India, and South Africa and now expanded in January 2024 to include China, Egypt, Ethiopia, United Arab Emirates, and Iran) have also been working on collectively developing AI policies and capabilities. Coordination as it relates to AI governance frameworks is on the agenda. In late 2023, the BRICS group announced the creation of an AI Study Group to accelerate cooperation and innovation. The study group will “fend off risks, and develop AI governance frameworks and standards with broad-based consensus, so as to make AI technologies more secure, reliable, controllable and equitable.” Still, AI governance interoperability will be challenging due not only to divergent approaches to AI regulation but also to tensions between China and India.

In the US, with no prevailing federal statutes, states have taken an active role in developing their own AI regulations. Some are being passed into comprehensive law (i.e., the Colorado AI Act, which is the first US ‘AI-focused’ state law that will regulate AI developers and deployers), while others (i.e., Connecticut) have failed to garner crucial support. When it comes to interoperability, US states, at different junctures, have been working on having a coordinated approach to AI regulation. The most notable initiative is the multi-state working group on AI policy that is led by the state of Colorado. More than 60 state legislators from around 30 states make up the working group with the aim of “creat[ing] a forum to educate state lawmakers interested in this topic and to coordinate approaches across states to better allow for interoperability.”

Likewise, in June 2024, the Chinese AI Safety Network was launched to promote knowledge-sharing, dialogue, and interoperability among its national AI safety initiatives. The network reflects the previously described G7 initiatives around national AI Safety Institutes in those countries. Furthermore, the newly unveiled Shanghai Declaration on Global AI Governance at the World Artificial Intelligence Conference in early July 2024 outlines China’s position and call to collaboration. It will be interesting to see how their parallel developments (G7’s and China’s) could shape the evolving international tapestry around coordination and interoperability.

Many of the mentioned initiatives are in their nascent stages, with many countries and regions still in the information-gathering phase. Nevertheless, these recent developments reflect a deep awareness of the need for continued and reinforced efforts toward cooperation, coordination, and the jurisdictional interoperability of AI governance.

Authors