Internet Trolls Should Not Dictate the Terms of Public Exposure to Hate

Adi Cohen, Benjamin T. Decker / May 18, 2022Adi Cohen is COO and Benjamin T. Decker is founder and CEO of Memetica, a digital investigations firm.

On May 14, an 18-year-old white male killed ten people and wounded at least three others in a meticulously planned mass shooting at the Tops supermarket in Buffalo, New York. Prior to the shooting, which was livestreamed on Twitch, he pre-planned the release of a manifesto on Google Drive and posted a 589-page diary-style document to a Discord server in order to maximize the public impact of his crimes.

While he is not affiliated with any far-right organizations, he was heavily influenced by the Christchurch shooter and fringe message board culture-- the manifesto features countless mentions of the Great Replacement and a myriad of anti-Semitic and accelerationist conspiracies.

Much like prior far-right mass shootings, the shooter intricately planned his media operation alongside his mass casualty attack to achieve two gains: 1) kill members of a Black community he perceived as the enemy, and 2) inspire others to join a leaderless transnational movement and commit similar racist atrocities.

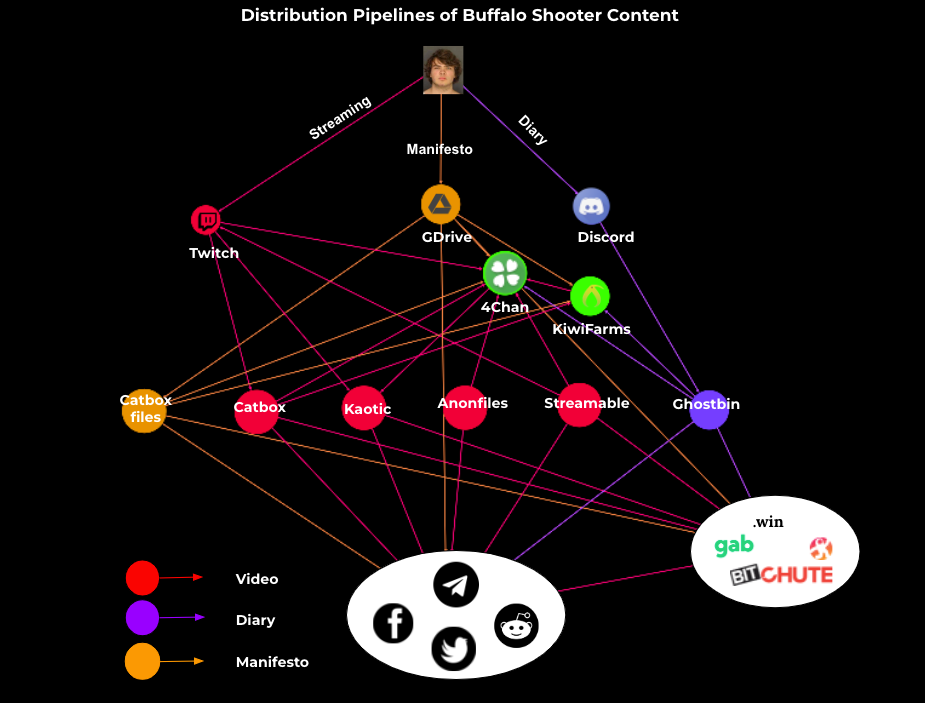

Hours after the shooting, video taken by the shooter, his manifesto, and “diary logs” were circulating online and gaining millions of views. Some platforms were quick to respond, but their efforts failed short of blocking the circulation of content. The shooter’s strategy, relying on collaboration within the 4chan community to help surface and disseminate the content despite efforts by the major platforms, proved to be successful.

Making the Ephemeral Eternal

The shooter’s Twitch livestream, which had approximately 22 viewers throughout the course of the mass shooting, was quickly taken down after the attack. Twitch issued a statement shortly after the attack, confirming that it “removed the stream less than two minutes after the violence began…taking all possible action to stop the footage and related content from spreading on Twitch”.

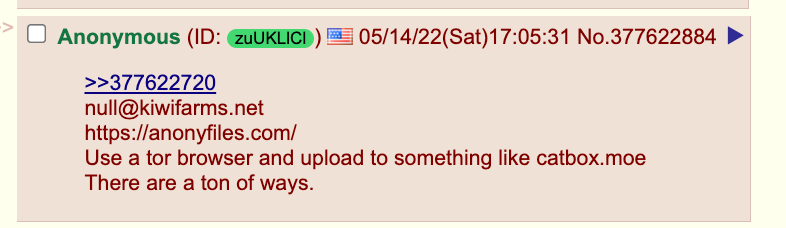

The rapid-response may have limited its distribution on the platform, but while Twitch referred to the content as ephemeral, on 4chan, users were already busy making it eternal. At least one of the 22 viewers happened to be a prolific 4chan user, who quickly went on 4chan’s /pol/ (politically incorrect) message board to distribute the content. This message board is where, according to his diary, the shooter was first radicalized, and it became the primary distribution vector for the livestreamed video and the Google Drive manifesto. Minutes after the attack took place, users shared advice about how to recover the footage and safely upload it online. The link to the manifesto was shared in the thread, along with screenshots from the Discord server giving more context about the shooter. All this activity happened while he was in custody at the crime scene.

Excerpts from 4chan/pol/ thread, May 14, 2022. Source: Memetica

In the following hours, multiple links were shared on 4chan and another site, KiwiFarms, before they reached mainstream platforms like Twitter, Reddit and Facebook, as well as other fringe platforms like Telegram, Gab, and Patriots.win. This pattern holds for most of the video links (shared through catbox.poe & streamable.com), the manifesto and the Discord diary (shared through ghostbin).

Google sent a notification to Google Drive users at approximately 1:30 PM EST on May 15 (almost 24 hours after it first surfaced) flagging the manifesto as violating the terms of service; however, it took other platforms several additional hours to take action on any links to the Google Drive version of the manifesto. By that time, the link had been shared over 1,100 times on Twitter alone. Once the manifesto was removed from Google Drive, another file became available through CatBox, and has since been shared at least dozens of times on Twitter, Reddit and Facebook, often by users putting broken links or through third parties to avoid moderation.

Some of the files containing the gruesome footage were accessible through Twitter and Facebook over 48 hours after the attack. While the most viral video– available on Streamable, it was shared over 12k times and according to The New York Times had around 3 Million views– was taken down on May 15, another video was accessible and shared on Facebook and Twitter until May 16 late afternoon, EST. As the initial wave of videos were taken down by Catbox and Streamable, 4chan /pol/ users encouraged each other to use VPNs to re-upload the video as many times as possible in order to ensure that it remained available online. Some links were shared on YouTube as well in the comments section of mainstream news organization videos.

* The graph above does not include data from Facebook. However, according to CrowdTangle, one of the links to the shooter’s video has been shared on the platform over 12,000 before being removed.

While the Global Internet Forum to Counter Terrorism (GIFCT) has made some improvements in working with platforms to moderate far-right violent extremism since the 2019 Christchurch Call, the widespread distribution of the Buffalo shooter’s manifesto and livestream across both fringe and mainstream social media platforms highlights the dangerous gaps that remain in content moderation.

From the social media distribution model to the aesthetics of the content itself, the Buffalo shooting bears all the hallmarks of a Christchurch copycat killing, marking the third such mass shooting to be directly inspired by the New Zealand mass shooter in the United States. In El Paso, Poway and Buffalo, all three shooters made explicit references to their consumption of the Christchurch massacre video, which speaks volumes about the tangible real-world harms associated with its continued proliferation online. The Buffalo shooter’s 180-page manifesto and accompanying 589-page diary provide a detailed account of both his radicalization process and his step-by-step attempts to plan the attack.

He understood the nuances of social media Terms of Service, which he studied and exploited, like many other card carrying members of toxic internet communities and aspiring influencers operating under the umbrella flag of hate. The sophistication of the shooter’s propaganda distribution strategy heavily relied on the use of anonymous file hosting platforms and on 4chan /pol/’s digitally literate and active user base, which proved to be effective in surfacing and circulating the shooter’s content, despite moderation efforts taken by mainstream media platforms.

A Content Moderation Nightmare

This latest incident highlights several potential challenges in moderation of this content:

1. Coordinated dissemination in a decentralized environment: The effort to disseminate the content produced by the shooter has been coordinated by ideologically aligned individuals, while attempts to take it down seem to be taken separately by the platforms. Thus, the coordinated effort has the advantage. . When Google took down the manifesto from its servers, users already used a new link on other platforms. When Facebook blocked the links to the videos, users posted link to third party websites (such as BitChute, which had the links available in the bio)

2. Virality based moderation: On May 15 access was blocked to a link to streaming video on streamable.com that had generated around 3 Million views. However, a copy of the same video, which was uploaded to Kaotic.com on May 14, was shared freely on Facebook and Twitter until 48 hours after the attack. This might hint that platforms took action due to virality of the content, rather than its existence. If that is the strategy, wide sharing of the next attacks will be inevitable.

3. From ephemerality to eternity: The choice of Twitch as a streaming platform, made by the shooter, proved to be successful. The ease with which he was able to go live and stream the act, even if only for a few minutes, was enough to record the content and enable others to upload it elsewhere. Twitch’s comment summed up the challenge it and other platforms are facing: the content streamed through Twitch might no longer be available on its platform, but hours later, it was available on multiple other platforms, indicating that communities online and off-line will have to live with the consequences for a long period of time.

The direct links to the Christchurch shooting make it painfully evident that more coordination between platforms is critical in preventing the spread of such hateful content online. The shooter’s propaganda package was modeled directly on the Christchurch shooter, combining ephemerality and hate, to bait troll communities into amplifying his manicured narrative into the mainstream. And it worked.

The 589-page diary, an ostensible archive of his messages and daily routines documented on Discord from November 2021 - May 2022, sheds light on the various platforms and associated content that drove him to mass murder. He described his full radicalization journey, gaining an interest in survival and guns after playing Apocalypse Rising on Roblox, which led him to hunting and shooting and a deeper interest in firearms on Reddit and 4chan. He was also an avid Discord user, participating in several servers populated by other fringe message board users - in multiple discussions on KiwiFarms and 4chan on Saturday evening, individuals posted screenshots purporting to show his Discord posts, leading to a degree of moderate dismay within those discussions for a brief period of time.

The shooter described entering into the world of 4chan during the early stages of the COVID-19 pandemic, lurking and participating in discussions on the /k/ (weapons) message board before making his way to the /pol/ (politically incorrect) message board. He said he frequently re-read the Christchurch shooter’s manifesto and watched the video, referencing the incident 30 times and “Great Replacement” 16 times throughout the diary.

As is evident from the amplification of violent propaganda after the shooting, hate speech is platform-agnostic, sometimes bursting from the darkest corners of the internet to the most open public squares too quickly for any one company to intervene. On Discord, he openly discussed his intentions with very explicit references to other far-right mass shooters, creating a collection of data signals that in theory, could have been flagged by Trust & Safety teams at Discord; however, Discord’s content moderation system, which includes volunteer moderators alongside a Trust & Safety team, unlikely had any visibility into the chats.

New Systems Are Needed

The model for volunteer moderation in particular is quintessentially problematic in that the hierarchical gatekeepers of Discord servers hold an asymmetric degree of both technical control and content influence across subcommunity user bases. In this context, the platform architectures in and of themselves hinder the Sysyphean labor tasked to Trust and Safety teams, who are often forced into a never-ending game of whack-a-mole -- shut down one server and a new one appears overnight.

While current content moderation policies are somewhat limited in scanning closed Discord servers due to user privacy, mechanisms which allow this information to surface in a timely manner, without compromising users’ privacy, might be essential in preventing more tragedies. For Discord, such a system could exist as a bit of a content moderation black box that the public or law enforcement can’t access directly, and the only outputs from the system to the platform’s managers would be severe user threats alongside relevant user data.

Such systems have been proposed in the context of encrypted messaging. Any implementation would require stakeholder buy-in from both civil society and law enforcement with respect to individual civil liberties. Such a system, a triage network of sorts, should only exist if it can be structurally aligned with documents such as the Charter of Human Rights and Principles for the Internet, and relevant laws and constitutional protections. Such an entity– similar to the GIFCT, or an evolution of the GIFCT itself– could facilitate a more robust cross-platform triage network and fusion center that doesn’t seemingly exist yet, where participating stakeholders could review flagged or concerning content and escalate it to all relevant parties.

Addressing the connection between this content and the promotion of real-world violence thereby requires a deeper understanding of the size and scope of the technical and logistical challenges we face. In this context, the anonymous file storage sites used to redistribute both the video and the manifesto created a virtually unstoppable redistribution cycle that can only be halted with more robust collaboration across the entire ISP ecosystem.

Authors