Imagining 2025 and Beyond with Dr. Ruha Benjamin

Justin Hendrix / Dec 22, 2024Audio of this conversation is available via your favorite podcast service.

This week’s guest is Dr. Ruha Benjamin, Alexander Stewart 1886 Professor of African American Studies at Princeton University and Founding Director of the IDA B. WELLS Just Data Lab. Dr. Benjamin was recently named a 2024 MacArthur Fellow, and she’s written and edited multiple books, including 2019’s Race After Technology and 2022’s Viral Justice. Last week, she joined me to discuss her latest book, Imagination: A Manifesto, which was published this year by WW Norton & Company.

What follows is a lightly edited transcript of the discussion.

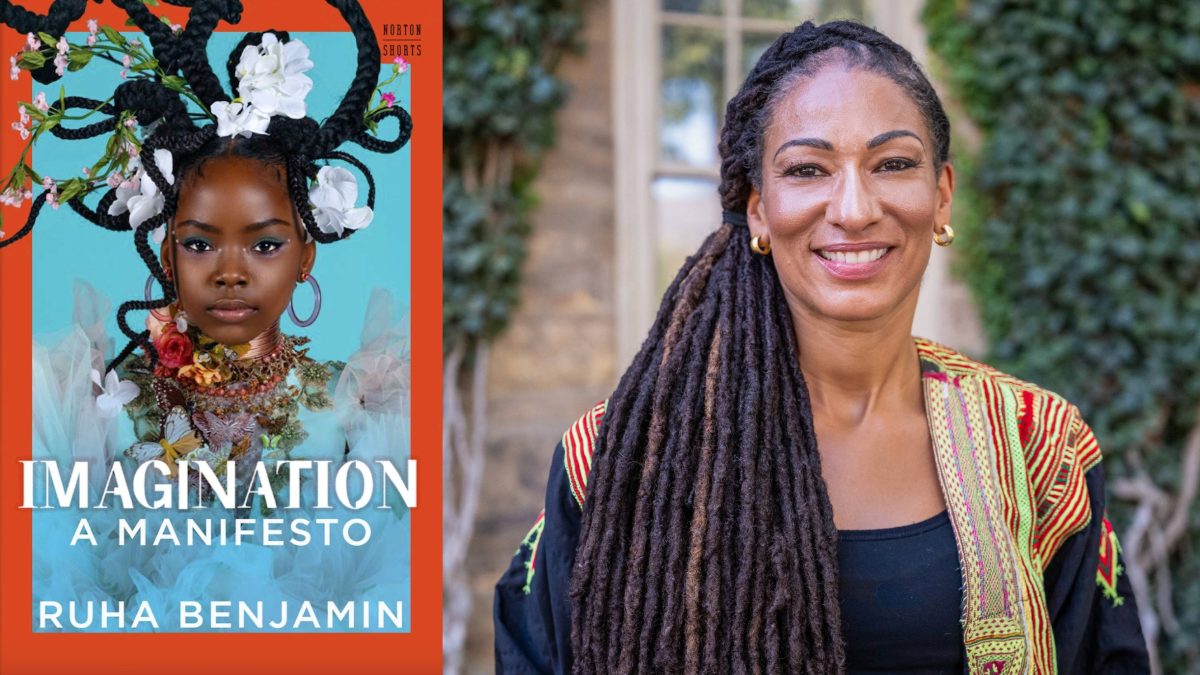

The cover of Imagination: A Manifesto (Norton Shorts, 2024) next to a photo of Dr. Ruha Benjamin. Photo © John D. and Catherine T. MacArthur Foundation–used with permission.

Dr. Ruha Benjamin:

I am Ruha Benjamin. I'm Alexander Stewart 1886 Professor of African-American Studies, the founding director of the IDA B. WELLS Just Data Lab, and author of several books, including my latest, Imagination, a Manifesto.

Justin Hendrix:

Professor Benjamin, I'm so pleased to have the opportunity to speak to you. This is the last podcast recording that I'm doing for the year 2024. I've put out almost 85 episodes, I think, this year. A good number of them have focused on books, and I'm quite pleased to make this one the last one that we'll talk about together. And I hope that it'll leave the listener both maybe reflecting but also looking ahead and thinking about next year possibly in an optimistic way, although we'll see where we get to in the course of the discussion. So we're going to mostly focus on imagination and manifesto, but I think I'll try to bring in reference to some of your other prior books as well. I want to start just with definitions. You say in this book, in most cases, you're talking about collective imagination as when we imagine different worlds together writing shared stories and plotting futures in which we can all flourish. Is there anything else that you'd want to say to define the term imagination?

Dr. Ruha Benjamin:

Yeah, I think partly what I want to do with, whether it's the definition or our common conception of imagination, is to insist that imagination is not a straightforward good. We often cluster imagination with words like play and creativity, and that brings kind of feel good vibes. What I want to say is there are very harmful forms of imagination, dominant imaginaries. One that I talk a lot about in the book is a eugenics imagination that looks at different groups, ranks them and considers some people more disposable than others. And so implicit in however you want to define imagination, I want us to leave room for the good, the bad, and the ugly and to invite us to invest more energy in cultivating forms of imagination that foster our collective well-being that reflect our inherent interdependence as people and planet. And I think to do that, we have to take imagination seriously. We can't dismiss it as flights of fancy or something that we kind of do if we have time when we daydream, but to say we need to carve out more space in our discourse, in our work, in our lives for imagination as a battlefield battle.

Justin Hendrix:

So imagination is a kind of force in the world, something that is in a competitive environment. We're seeing imaginations compete with one another. Sometimes, we call that politics, but it seems like it's other things as well.

Dr. Ruha Benjamin:

Yeah, I think partly why I like to zoom out and frame the kind of background noise or the foundation of all these other things that are more obvious and evident. The things that get codified in our laws and our policies and our infrastructure that are more evident to our eyes we can see it and point to is to say behind that there are other logics values, perceptions that occasionally materialize so that we can see them, but often say in the backdrop. Because in my view, the more powerful a force is in the world, the less evident the power comes from its invisibility, our inability to point to it and to direct our attention to it for critique or otherwise. And so, in naming the different forms of imagination that become encoded, that infect our institutions and our laws and our various social systems, that to me is a first step in putting those in their place and beginning to cast a critical light on them. So, the first step in any power struggle is to name the realities that we're struggling over.

Justin Hendrix:

I find that a lot of the conversations I'm in lately, especially after the US election, that a lot of folks are coming to grips with just how difficult to prospect the next few years will be, the next few decades. There's almost a sense of looking further out, thinking longer term. I don't know if you're feeling that in some of the conversations you're having as well, but one of the reasons I was excited to talk to you is I think your book invites us to think in a longer term. How has your relationship with the future changed in these last few months?

Dr. Ruha Benjamin:

Yeah, I think it's become, in many ways, as you describe, more directed to longer medium-term futures when you feel like you've lost a battle, as many people do. I don't necessarily, but I wasn't invested in this particular electoral struggle in the same way that many people around me were. I think it makes sense to say if, in the next three to four years, these things seem impossible to do or to engage in, then I need to think further out. And I do think it's helpful to think in that longer term in terms of how we invest our energies. One of the very basic struggles that I think one way to understand what we're struggling against is that there are powerful forces that want to make the very conception of a society seem like a foreign idea, like an impossibility. This idea that we are responsible for each other, that we need to care for one another, and that care needs to be encoded in our infrastructure, in our social safety nets in our institutions.

And there are powerful individuals, organizations, and parties that want us to revert back to this very anemic idea that we're just floating individuals. Our self-interest is what wins the day. And so over the long term, I think whether you think a hundred years or even the next 20, 25 years, we are struggling against some very basic, over the same very basic terrain. One of which is that we do live in a society where we are responsible for each other. And so one way to direct our energies is to think about how our own work, our own purpose here is to invest in society, building practices, things that actually foster these social connections, these safety nets. And while certain things might be impossible or seem impossible in the short term because of who happens to be in the White House, I think there's still so much we can do, whether some people think about it at the local level, whether some people think it at the transnational level, there are different scales in which we can be working to really foster these social connections and relationships and infrastructure.

Justin Hendrix:

So, you talk about this book as a proposal for exercising our mental and social structures of the tyranny of dominant imaginaries. You've already mentioned one of those, the eugenicist framework that you look at in the book. Are there other dominant imaginaries that you think the listeners should have in mind for this conversation?

Dr. Ruha Benjamin:

One is this idea of winner-takes-all all or the idea that for me to do well, you have to lose or vice versa, this zero-sum game as McGee talks about in her book. And so I think part of it is to shift from a model in which we see this hyper-competitiveness between groups as natural and inevitable and really to draw on both data and stories and traditions that counter that understand that for me to do well, you have to do well. And vice versa. It's a shift from a charity model of change where we're trying to help the underprivileged or the people the have-nots and to move to a solidaristic model, which is that my wellbeing is intimately bound up with yours in that light. I think for those who are motivated by data and empirical information, there's plenty of evidence to suggest that in context, whether at the local state, or national level where there's a wider gap between the so-called haves and have-nots, the haves in those places, the people who are supposedly privileged do not fare as well as the so-called haves in places where there's greater equity and justice, that means inequality makes everyone sick at some level, not just the obvious targets of harmful systems, but actually harms those who are supposed beneficiaries of that, whether increasing their anxiety, whether we have colleagues of mine have written about deaths of despair among white Americans, higher rates of substance abuse, suicide, mental health.

And one way to understand that is that it is unnatural and unsustainable to erect a society with these fault lines in which there's this monopolization of power and resources on the part of a very small group at the expense of everyone else. And so this is an invitation to me for us to think about the way forward being one in which everyone benefits by these changes, investing in greater equity and justice, not only those who are the obvious targets. So to recap, another very harmful idea is this idea that ‘winner takes all, zero-sum’ game and we can move to a more solidaristic understanding of our relationship with each other and the way forward.

Justin Hendrix:

You talk about deadly stories of entitlement and domination that are all around us. This book is not about tech alone. Some of your other books, of course, are, but when I read those words, I think about our current tech imaginaries, I think about the authoritarian imaginaries, all of which seem to be gaining ground around us. They're big marketing budgets and also sometimes guns and bullets behind these imaginaries. How does tech fit into the way you think about these things?

Dr. Ruha Benjamin:

Yeah, unfortunately, I get to speak to tech audiences quite often. And one of the recurring fallacies that comes up, again and again, is the pitting of innovation against these more social concerns around equity. The idea that we can't sacrifice innovation by doing X, Y, and Z that focuses on the world, the different groups, how they benefit, how they're harmed. In my view, we should not even consider new developments as innovative if they continue to exacerbate these social problems, if they require the surveillance of certain groups, if different predictive tools foreclose the futures of particular populations, that is to say built into our definition of innovation when it comes to technology, there needs to be a centering of the social, the political, economic foundations of technology. We can't think of the tech innovation as over and above these other contexts. And so this is an invitation for those in tech and also those who are advocating on the outside not to adopt that false binary between innovation and inequity and to say one sacrifices the other, but to say no, we need to completely redefine what counts as innovation, that it has to be human-centered, that it has to be attentive to the social equity inequities that it exacerbates, but could potentially counteract, et cetera.

And so I think that is one of the lessons or invitations in the book for those who are invested in tech innovation.

Justin Hendrix:

This reminds me that in one of your prior books, Race After Technology, you talk about the idea of recasting who we think of as innovators.

Dr. Ruha Benjamin:

Yes, absolutely. And so I'll give you a quick example that hits that point home. There's a group of researchers who were developing an AI prediction tool in the healthcare system and they realized that if they were trained this AI based on doctors' reports, specifically reports about pain treatment, pain diagnosis that is known to be deeply biased specifically against black patients, that is their pain is routinely underdiagnosed or ignored, and that's reflected in these doctor's reports. So if these researchers trained AI based on those reports, it was going to reproduce that same invisibility of black pain in this predictive tool. So what they did was instead of training it on the doctor's reports of pain, they trained it based on patient's own self reports of pain. They shifted where they went looking for the knowledge and the insight. When we continue to only look at the traditional sources of knowledge, ignoring the biases and forms of discrimination that are built into those records and data, then our so-called innovation reflects the past. It reproduces those patterns. And so to the credit of this team, they did their homework. They didn't just try to create something that was efficient technologically but was also just socially. And not only were the predictions more accurate, but they were also less biased. And so there are ways to approach this, but it requires us to shake out of these mental models that associate knowledge only with those who have the letters behind their names and to look to other sources of insight and knowledge that are often discounted or ignored.

Justin Hendrix:

On this podcast, we talk a lot about how we think about the role of technology firms of capital in defining the future. About halfway through this book, you talk about Sidewalk Labs, its ill-fated project in Toronto, probably one of the more, I guess, illustrative examples of a kind of tech imaginary for an entire city. Why does Sidewalk Labs stand out to you?

Dr. Ruha Benjamin:

So many reasons. It is really exemplary in that it ends up perpetuating so many of these tropes of innovation that are ungrounded, not grounded in reality. And so part of what's important to note is for any of these initiatives that are selling us on a future, they have to at least pay lip service here to be responsive to people's needs. There needs to be some aspect of what we might call democratic theater involved, where the architects of this plan need to seem like they're listening to the residents in this case, holding these kinds of town hall meetings in a way that's very self-serving. Because in one case with this Toronto example, they invited Indigenous members of the community to come to consult, to provide their insights, and then issued a report based on this series of consultations that completely ignored their recommendations. And so again, part of what's illustrative is the veneer of progress, the veneer of participation that's often surrounding many of these, the marketing of many of these smart city initiatives or other kinds of tools and technologies.

And when you look beneath the surface, it's the same kind of actors. It's like it's still Google. It's still the companies creating these visions and then trying to engender buy-in rather than from the ground up, starting with the insights, experiences, desires of people. Now in saying that about Sidewalk Labs, another set of initiatives that I didn't get a chance to write about because I've only been learning about it recently is a studio called Estudio Teddy Cruz + Fonna Forman based in Southern California. They're a team that collaborates deeply with the communities that they're designing for, taking their insights as a starting point rather than coming in with their own agenda. And they've created a number of projects in terms of housing, in terms of mixed use, in terms of skate parks for youth, in terms of transient settlements for migrants. So for those who want a different vision of how you do this, that's counter to Sidewalk Labs, they're out there and one of which is this studio, Teddy Cruz and Fauna Foreman that are showing us what it looks like to start with participation, deep, genuine consulting of the communities that you are collaborating with, not simply designing for.

Justin Hendrix:

You also point in the book to the People's Advocacy Institute in Mississippi. Having had the opportunity to go to Jackson, I remember leaving there thinking, if we thought about the future and we thought about Jackson, what could happen here? Maybe that would be a different way to imagine the future of this country. In many ways, Jackson seems like such an important place to me.

Dr. Ruha Benjamin:

Absolutely. Cooperation Jackson, this set of projects that are all connected, whether it's like farming, there's a tech maker space, there's recycling, there's housing, but all of this is also happening in a context of organized scarcity. That is the state of Mississippi really trying to cut the city off from the resources that it needs to thrive. And so, even under these conditions, people are able to innovate in the real sense and materialize their creativity in a bottom-up way. And so bridging what's happening in Jackson to what's happening on the US-Mexico border with the first example I described, one of the phrases that I think is useful that comes out of that work is that these everyday community-based practices of innovation and world-building need to trickle up into planning into policy. We often talk about things trickling down, but what the knowledge of the people in these places needs to trickle up to inform the so-called experts and bureaucrats and technocrats that think they know it all.

Justin Hendrix:

Maybe we just press you a little more on the border as a place where we can imagine the future. Another topic we've addressed recently on this podcast. We had an author called Petra Molnar who references your work many times in her book on border technologies. I just invite you to maybe a little more on why you think borders are so important.

Dr. Ruha Benjamin:

Borders are again, one of these sites where we're being sold technological fixes to these social and humanitarian crises where the idea of so-called smart borders is the corollary to smart cities that is technological fixes for urban problems that are often just encoding them in a different guise. So on the borders we're being sold robotic dogs that are more humane. We're sold drones and infrastructure that's out of sight to surveil populations by land and sea, and it's not just US-Mexico border, what some call Fortress Europe, which I'm sure Petra talked about in her comments, where it brings together all of these different governmental departments to create what they call the system of systems. That's really just even more elaborate surveillance. And so we have to ask ourselves, what is this a fix for? It's certainly not affixed for you. Think about the plight of migrants or people who are trying to escape certain forms of tyranny, economic depression, et cetera.

When you think about who is commissioning these systems, the tools reflect their self-interests. And so if we want different outcomes, we don't want different tools. We need to invest in what some call public interest technology that's created from the start with the public good in mind, not private interests and not just the select interests of a small sliver of the public who is buying elections and pushing politicians around. What's happening at our borders is not divorced from what's happening in our cities and what's happening in our neighborhoods because oftentimes the same infrastructure, the same tools are trafficking back and forth between these. So we need to care about what's happening even when it doesn't seem to affect us directly, because eventually it will.

Justin Hendrix:

I'm sure there are a lot of listeners to this podcast listening to you and saying, yes, agree with you on all of these things. How do we do something different? Yet I'm talking to you on a day that we know the Biden administration’s thinking about how it can relax rules on federal lands to allow for building massive data centers. I've just read last week industrial policy plan that OpenAI put out where they're imagining rebuilding American cities and of course data centers, artificial intelligence are at the heart of that. And many of the documents that have come out of the Biden administration, and I'm sure to come out of this next administration, the kind of role of private companies, especially the big ones, is blending with the kind of government interest in a way that it seems the deal's been made right? The future will be tech firms and their tools and their AI and the governments along for the ride. As long as we can kind of keep the thing together, I don't know, how do you think about this at the moment? What is the kind of opportunity for any of these potential different imaginations to break out in the face of these kind of powerful forces?

Dr. Ruha Benjamin:

I will admit that when we do focus solely on these sort of macro-level developments or looking up at what policymakers and politicians are doing, it seems very daunting. And the ability to think beyond this seems nearly impossible. And that's why I think in my own work and in my teaching, I really try to use the little bit of time I have people's attention to shine a light on what's happening beneath the surface that doesn't get headlines and hashtags. So for example, over the summer, I went to a gathering called Take Back Tech that brought together organizations and movement builders from different countries, including many across this country who are organizing on all of these different fronts from organizers from Virginia who are fighting the building of data centers to people who are trying to create more distributed platforms that aren't centralized and can evade surveillance all across the board.

Every aspect of this that we think of as the source of the problems that we're up against, there are individuals, groups, and movements that are working, and in some cases it actually benefits them to be under the radar. We don't want to share all of the strategies and struggles that are happening. But I do think, listeners, it's important to know that there are ways to plug into these counter-movements, counter imaginaries, to lend your own energy and insight, not to drive them because they're being driven by people on the ground who are most affected by the harms associated with them, but to lend our energy and our time to them. And so one stop for those who want to learn more is to go to the website of the Take Back Tech to see the different panels, organizations, speakers, where in the world they are, see what's closest to you, plug in, reach out to them, donate fund these efforts because they are out there.

And one of the things that I learned as a child from my grandma is that whatever you water grows. And so if we only focus on the harms, we focus on the things that we want to get rid of without naming and shining a light on the things we want to grow and have more of, then we're only doing half of the work. So we want to couple our criticality, the things we're critical of with creativity, with investing our time and energy on what we want to see more of. And it's out there. You'll see learn about some in the book, but lots more on the resources tab of my website. And also if you go to some of these websites like platform co-op dot org, there's an initiative that my colleague, Timnit Gebrul has started an organization called Distributed AI Research Institute. And one of the things that they are working on that I think is worth just noting here, we talk about a lot of the issues with the labor behind AI.

That is the people who make these systems appear magical, whether it's data workers in Kenya, content moderators in the Philippines who are really harmed by the constant sort of intake of the worst of the internet. But now there's an initiative called the Data Workers Inquiry, which is workers themselves doing research on their own conditions and advocating and organizing around that. And I got to a chance to attend one of the Zoom panels, which listeners can go in and listen to, they put “data workers inquiry,” go to their website. Again, it's the people most affected who are leading the charge who we have to learn from and listen to. It's not being done for them, but they are driving the changes that need to happen in terms of the workforce behind these AI systems. And so the good work is out there. We just want to invest, donate, and lend our energy and attention to those as well.

Justin Hendrix:

You've mentioned that you're an educator, that you spend a lot of time with students that you write about your childhood, how you used to play school on your front porch in the book and how that sort of stuck with you all the way through this book contains exercises, some useful thought starters and exercises that folks can go through themselves to think about the future differently, think about imagination differently. I can't help but ask, is there one we could do right now?

Dr. Ruha Benjamin:

Sure. Yeah. One of the intro exercises that's fun to both break the ice, but also to challenge the kind of credentialism and expert lay divide that often shapes our public discourse is called MSU. And MSU stands for making shit up. And what this exercise is we ask each other, what kind of degree do you have beyond the typical academic subjects do you have in terms of different interests, eccentricities quirks that would tell me something about you that isn't obvious from let's say your resume. And so if I had to give you an MSU diploma, what would be on the diploma, Justin? What would be your BA or ma, whatever?

Justin Hendrix:

I have to say I grew up in a Navy family, moved around a lot as a kid and for whatever reason, every house we moved into was substandard. And so my father spent a lot of time rebuilding and remaking and adding on and always plugging holes and variously doing stuff like that. And I have taken up that work in an old house in Brooklyn that's generally falling down around me, which has led me to also be somewhat of a resource for neighbors and others on the block who either need to borrow a tool or maybe battle tests, some cockamamie plan to fix something or other. Yes. So I guess that's one of my, absolutely my credentials beyond what tech policy press listeners would generally think of.

Dr. Ruha Benjamin:

Absolutely.

Justin Hendrix:

What about you?

Dr. Ruha Benjamin:

And so mine aren't as vital. That's like an essential skill you have there that we will need in the apocalypse. And so everyone note we go to Justin's house to figure out how to do that. What I would say since what often gets highlighted are the formal titles and so on, is that I have a BA in being an introvert who can still do public things. I have a master's degree in not answering my phone because I have a phone allergy and I have a PhD in sending just the right gif in response to a text thread. So I have a real skill at finding the rights little gif to send people. Again, not too serious, but it also gives you a sense of, I have a sense of you, you have a sense of me beyond the heady, weighty things that often get highlighted in our bios

Justin Hendrix:

And how does that open up for us? Then we can have a different type of conversation about imagining, I don't know if we had to rebuild institutions around us, you went to the word apocalypse. Is that kind of what you're thinking there?

Dr. Ruha Benjamin:

For me, it's a starting point. If we're sitting in a group and we start with something like this or a related exercise as we think of ourselves in Earthseed community, which is the writer Octavia E. Butler's, speculative fiction from Parable of the Sower, and we think about what skills do we all bring that would help us create this community from scratch, definitely yours would fit into that exercise, but part of it is to see one another in a different light beyond the rubrics of the institutions. And so oftentimes, the way we engage with each other are strictly through the titles, the expertise that have been granted to us by these other entities rather than those things that are self-fashioned, how we understand ourselves when no one is looking what we bring to the table. And I think that shift in perspective from the institutional gaze to the more community-oriented or bottom-up gaze opens up a different set of possibilities in terms of how comfortable we are expressing our ideas, our experiences.

It brings different histories into the room rather than starting a conversation, assuming we're starting this just from this point on, thinking about what and who we bring into the room with us. And so I think it just shifts the starting point to have a more robust, equitable conversation where let's say the people with the technical degrees don't get to Trump and what everyone else thinks just because they happen to study X, Y, and Z. There's other kinds of insights and knowledge that we want to foreground in any kind of world-building exercise or any kind of consultation period that moves beyond just those typical academic hierarchies.

Justin Hendrix:

Should we talk about the bees?

Dr. Ruha Benjamin:

Yes, absolutely. Yes. So right now, I'm looking out in my backyard and I have my hives sitting there that my family and I started with these bees at the beginning of the pandemic. And so I become a bee nerd learning everything I can about these little creatures. They have a lot to teach us about society, about human interaction. One of the many lessons, and I talk about several of them at the end of imagination, one that I think is particularly relevant always is the fact that the bees that were in my backyard over the summer working mightily, filling those highs with honey, buzzing, buzzing, stinging, anyone who was a threat, which was important, et cetera, those bees, their lifespans aren't long enough to survive now through the winter. So they did all of that work to ensure the resources would be there for the next generation of bees that are now sitting and working in those hives.

And so part of it is a lesson about what we owe the next generation and the fact that much of the labor that we're all putting in now, we're not going to necessarily see the fruits of that in our lifetime, or at least not mostly. And so we need to think about who did the work for us, who created the sweetness and the stings to make our lives possible, but also looking forward to think about that kind of intergenerational solidarity or what many Indigenous traditions think of as looking seven generations forward in terms of why we do what we do, what we're responsible for as opposed to just the here and now. And so those bees are teaching us what it looks like to do the work to create the sweetness, the stings, not out of self-interest simply, but out of collective wellbeing to ensure that the group thrives, not just they as individual little creatures thrive.

Justin Hendrix:

You talk in the book about the kind of disregard we have for pre-modern people and cultures and also generally the need to consult with older indigenous traditions and ideas. How do we get more of that back into the discourse?

Dr. Ruha Benjamin:

Yeah. One of the books that I read as early on in grad school that has stuck with me has a title. The title is what really sticks with me. It says, We Have Never Been Modern, by Bruno Latour. And part of the conceit there is that a lot of the things that we associate with modern life still has so much superstition, has so many stories and beliefs built into it, including so much of the hype around AI and what AI will be able to do. There's a kind of religiosity around the creation of these systems and the doom and the hype around them. And I've written a little bit about that in a new essay called The New Artificial Intelligentsia, which was in the LA Review of Books. But so part of it is to push past, again, that binary between what we consider modern and pre-modern.

And in doing so, we realize all of the things that we perhaps dismissed, cut off down, look down on. And that's pre-modern that we need today in terms of our ecological crises, definitely our relationship to the earth, to resources that precisely because we have fallen into the superstition of the tech elite or what I call the new artificial intelligentsia, that set of beliefs is precisely is behind the kind of extraction and commiseration of the planet and many people on it. In my view, the AI that we should be investing more in is not 'artificial,' it's 'ancestral' intelligence. It's the collective know-how, the wisdom that has been cut off from us in part because of who it's associated with. And so these hierarchies of knowledge map on these social hierarchies of race, gender, class, nationality, and religion that we associate some with authoritative knowledge and some with mere tradition, mere everyday forms of experience that are not privileged. And so I think AI, in the ancestral sense, needs to have a greater role in our deliberations and in the knowledge that we look to build the world that we want.

Justin Hendrix:

What's next for you? 2025? Is there another book on the way?

Dr. Ruha Benjamin:

It won't be out in 2025, but I am working on it now, tentatively titled Ustopia. And the subtitle, which I'm sure my editors will change, is A Bullshit Detective's Guide to the Future because I want us to be able to identify the bullshit so that we can also identify the brilliance that is in our midst. And 'ustopia' is a word that I'm borrowing from a science fiction writer, Margaret Atwood. But essentially this idea that we are being scared to fear dystopias and we're being excited and made to be enamored with Utopias brought on by technology. And what I suggest is that we move past both of those narratives and ideas of doom and hype and remember that the future is us. Whatever happens in the future, whether it's loathsome or loving, is going to be a reflection of who we are and what we make it to be. And so the book is both a critique of the bullshit and also a celebration of the brilliance if only we invest in it.

Justin Hendrix:

We needed a little bit of both, and I'll look forward to reading that one. Perhaps I'll even have the pleasure of having you back on this podcast sometime.

Dr. Ruha Benjamin:

Absolutely.

Justin Hendrix:

Dr. Ruha Benjamin, author of Imagination, a Manifesto, as well as many other titles. Thank you for joining me.

Dr. Ruha Benjamin:

My honor. Thank you for having me.

Related Reading

Authors