How Tech and Civil Society Are Nudging Trump on AI Policy

Cristiano Lima-Strong / Mar 25, 2025

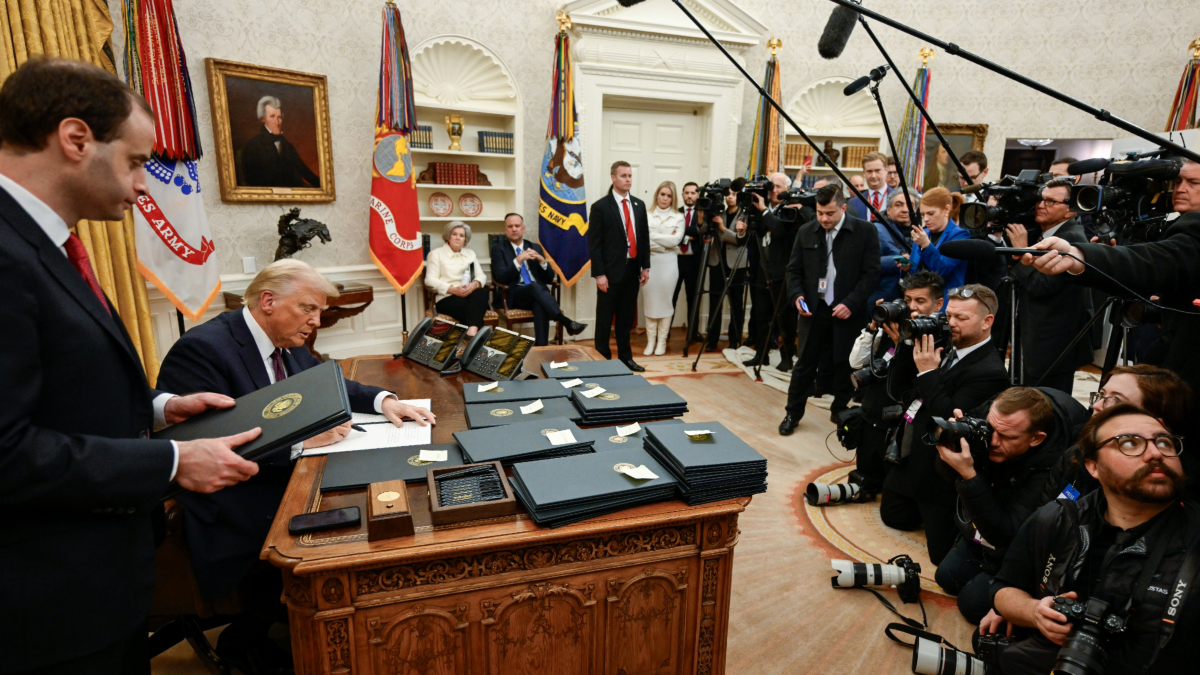

Washington, DC—January 20, 2025. US President Donald Trump signs a pile of executive orders. White House

In February, the Trump administration sought public comment for a federal “Action Plan” on artificial intelligence and gave outside groups until March 15 to offer input. In a statement, Lynne Parker, principal deputy director of the White House’s Office of Science and Technology Policy (OSTP), called it the “first step in securing and advancing American AI dominance.”

According to the Federal Register, the administration received over 8,000 submissions in response to the request for comment, but it has not yet made them public.

Tech Policy Press is collecting and summarizing key points across these submissions, some of which groups have publicly released or agreed to share with our team, building on earlier round-ups by Just Security, Axios, Punchbowl, Platformer and others.

(This piece will be updated. You can flag submissions via email.)

Nonprofits, think tanks, and advocacy groups

- Center for Democracy and Technology (CDT): The nonprofit said the action plan should urge the National Institute of Standards and Technology (NIST) to continue developing “voluntary” standards for AI risk management, follow six best practices for federal government use of the tools, invest in the National AI Research Resource (NAIRR) and take both “regulatory and non-regulatory” steps to ensure private companies are promoting individual rights in AI.

- Electronic Frontier Foundation (EFF): The privacy and digital rights group urged the administration to implement a “robust public notice-and-comment practice” for federal AI procurement and to favor technologies developed with open-source software. The group also warned against “overly broad regulations” that expand copyright law or embrace AI licensing.

- Open Markets Institute: The anti-monopoly think tank urges the administration to use merger control rules to scrutinize and potentially block mergers and partnerships in the space and to enforce existing privacy and consumer protection laws to “hold AI companies accountable” for violating their privacy policies or terms of service. It also calls for regulating cloud computing as a “public utility” and investing to develop “public computing capacity.”

- Hispanic Tech and Telecommunications Partnerships (HTTP): The Latino roundtable group called for creating “private-public AI skills partnerships” to boost training in the field, establishing a national apprenticeship program that incentivizes employers to participate and forming regional hubs in areas with high Hispanic population to “facilitate AI adoption.”

- Center for AI and Digital Policy (CAIDP): The nonprofit research center called for fully funding the AI Safety Institute, progressing an Office of Management and Budget (OMB) memo on AI procurement, ensuring that “human roles” in AI development are “clearly defined,” implementing federal oversight of “safety protocols for advanced AI design and development,” and the establishment of “red lines” against AI systems violating privacy, civil liberties or civil rights.

- Encode: The youth-led advocacy group called for fully establishing and funding NAIRR, formally designating frontier models as critical infrastructure to bring them under cyber incident reporting requirements, expanding protections for AI whistleblowers, growing the pool of visas for skilled AI workers, and criminalizing deepfake non-consensual intimate imagery.

- Other submissions: Center for Security and Emerging Technology (CSET), the Investor Alliance for Human Rights, and the Center for AI Policy.

Tech Companies, Trade Groups, and Venture Capitalists

- Google: The tech giant urged the administration to “supercharge” US AI development by investing in AI infrastructure to tackle “surging energy needs,” to develop frameworks that preserve data access for “fair learning,” to combat “foreign AI barriers that hinder American exports and innovation” and to “preempt a chaotic patchwork of state laws” on AI development. It also warns against exposing AI developers to “responsibility for misuse by customers or end users” and against “mandated disclosures that require divulging trade secrets.”

- OpenAI: The ChatGPT-maker called for the US to incentivize companies to partake in voluntary AI risk standards by creating a “glide path for them to contract with the government,” to preserve AI models’ “ability to learn from copyrighted material,” to revamp the AI diffusion rule, to create investment vehicles like a sovereign wealth fund to boost development, to “modernize” our energy grid and to preempt state bills like California’s SB 1047. The submission warns of competition from China on AI and the risks of foreign models like DeepSeek.

- Anthropic: The AI startup called for developing methods to vet AI systems for national security risks through the Commerce Department’s AI Safety Institute and through NIST standards, for expanding export controls on “computational resources” (like semiconductors), for amending the AI Diffusion rule, for fostering information sharing between AI developers against foreign threats and for rapidly building up energy allocation for the AI industry by 2027.

- Andreessen Horowitz (a16z): The venture capital firm calls for “establishing” the US federal government as the leader of AI regulation. It advocates regulating against harms while averting “onerous” restrictions against risks that “make it harder for Little Tech to compete with larger platforms,” clarifying that copyright law allows developers to train on copyrighted works, investing in education to strengthen the AI “pipeline” and supporting open-source models.

- IBM: The tech giant urged the federal government to use “AI-driven analytics” to crack down on fraud, to focus on deploying AI models in IT and cybersecurity, to centralize the use of AI assistants across agencies, and to “ruthlessly eliminate manual processes” via automation.

- NetChoice: The tech trade association is urging the Trump administration to “settle Biden’s antitrust cases against major AI investors and innovators,” including the Google search case, to roll back pre-merger notification requirements imposed under former President Joe Biden and to support a federal privacy law that preempts state measures. (Note: The Justice Department filed the Google search lawsuit during the first Trump administration.)

- Other submissions include: Palantir, SIIA, CCIA, CTA, and BSA.

Publishers, Telecoms, and Other Industries

- Digital Content Next: The digital content trade group urged the Trump administration to allow the courts to resolve issues around AI training and copyrighted works but called on it to “emphasize respect for US copyright law” as a core principle of its approach. It also said the plan should “endorse transparency obligations” that disclose when AI models are trained on copyrighted material and “encourage the free market licensing of content by AI companies that want to use protected content in their models or in AI-generated outputs.”

- News Media Alliance: The news media trade association also called for voluntary “free market licensing” between content producers and AI developers and for disclosures when copyrighted material is used for training, and likewise argued that intellectual property law “does not require revision” to settle disputes over AI training. The group warned of Big Tech “tying their services” to new AI offerings and locking out competitors.

- USTelecom: The telecommunications trade group urged the administration to cut down on “deployment barriers" for fiber connectivity, to clearly distinguish responsibilities and liabilities between developers and deployers of AI systems and to impose “upfront obligations” on developers to promote transparency and mitigate against discrimination.

Authors