How AI-Powered Emotional Surveillance Can Threaten Personal Autonomy and Democracy

Oznur Uguz / Oct 7, 2025

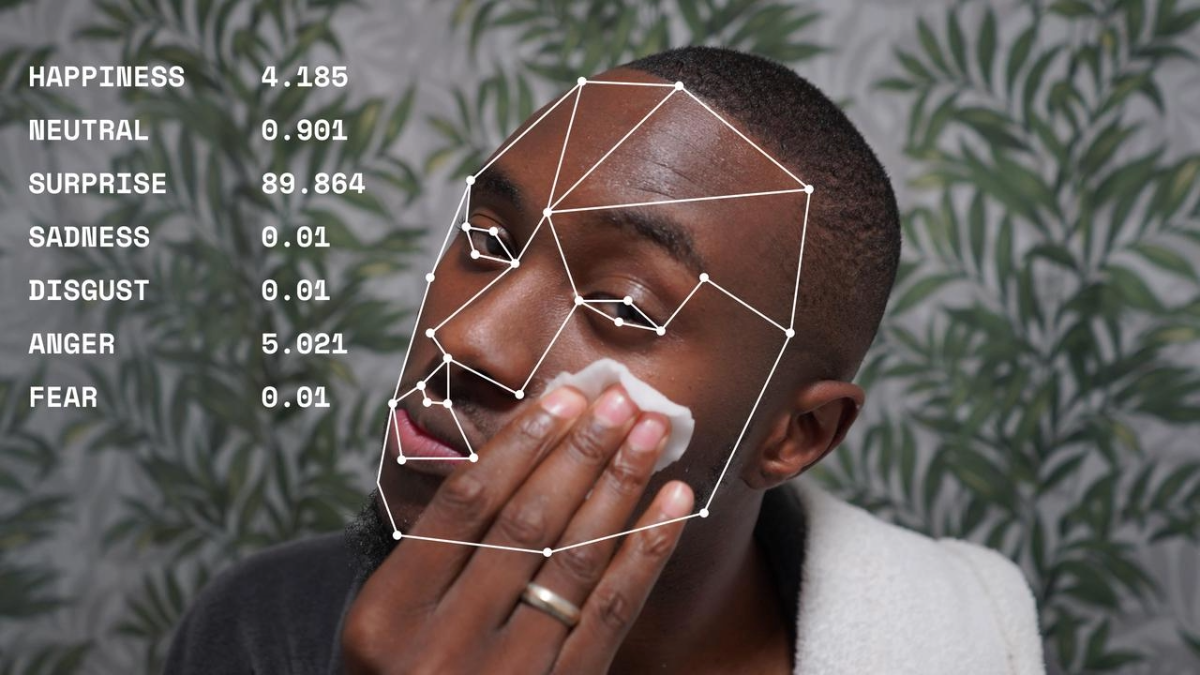

Mirror D by Comuzi / Better Images of AI / © BBC / CC by 4.0

Emotional surveillance applications powered by artificial intelligence (AI) technology have recently gained interest, with trials being conducted globally in areas from education and public services to border management. A few years back, brain-scanning headbands developed by an American start-up called Brainco were trialed on thousands of school children in China to detect those paying less attention, urging students to be attentive or at least “pretend” to be so. In the European Union, an AI border control system with emotion recognition capabilities called iBorderCtrl was tested in some EU countries to automate the border security process by working like a “lie-detector” for non-EU nationals who wanted to enter the EU. More recently, the transit agency Transport for London conducted a trial of emotional AI surveillance at certain stations of the London Underground with the claimed objective of preventing crimes, measuring satisfaction, and maximizing revenue by attracting more advertisers.

While the privacy implications of emotion recognition technology and its applications for surveillance have been widely debated, the potential impact of integrating AI-powered emotion recognition into surveillance practices on personal autonomy and democracy has received too little attention. Emotional AI surveillance is silently brewing behind the curtains and is signaling a transformative change coming soon to make things personal for each one of us. With the possibility of emotional AI surveillance becoming mainstream in the not-so-distant future hanging over our heads, a discussion on how emotional AI surveillance can threaten our autonomy and democracy as a society is long overdue.

What emotional AI surveillance systems can do goes beyond tracking and identifying people. They are more than facial recognition systems. They can sneak into your mind by reading your emotions from your facial expressions, voice, physiological signs, and words and expose it to those in power to control or manipulate you. What is at stake is more than your privacy. It is your mind, what is inside of it, and your agency to form your own authentic decisions and actions. Even more worrying is that these systems are not always accurate or free from bias, which means that you could face certain adverse implications also due to technical deficiencies in the system.

That said, improved accuracy or a reduction in bias would not necessarily make these technologies less frightening. In the simplest terms, emotions are a mirror of one’s identity. They could give others clues about one’s psychological state, preferences, opinions, and weaknesses. This sensitive information or even the possibility of its detection could be used to pressure, manipulate, or compel people into thinking or acting in a certain way, regardless of their will and despite their awareness. Whether done to avoid any repercussions – be it losing a promotion, being denied entry to a country, or getting detention – such restriction of self-expression would subvert the very core of an individual’s personal autonomy.

Our autonomy is – or is supposed to be – an inseparable part of us, which distinguishes us from other living beings and underpins our rights and freedoms. It entails not only the right to be free from external intrusions or constraints to express what we think or feel but also the freedom of being able to act on our own will and determination, including the discretion to keep our thoughts and emotions private. Emotional AI surveillance could deprive us of this discretion.

A potential mainstream application of AI-powered emotional surveillance could create a chilling effect in society, under which people feel compelled to suppress their emotions and change their behavior against their self-determination. At a societal scale, that would ultimately cause a democratic erosion and turn society into a cheap copy of Orwell’s Oceania, where any disapproved facial expression is considered an indication of a criminal mind and is punished under face crime. We might not, after all, have progressed much since Lombroso’s L’uomo delinquente, when people’s criminal tendencies were tried to be derived from their physical appearance. Only now are these practices likely to go beyond the area of criminal justice.

When it comes to what could be done to avoid this grim fate, that is where things get even more complicated.

Newly emerging AI laws fail to provide adequate safeguards against emotional AI surveillance, only imposing narrow bans and restrictions that contain loopholes and broad exemptions. The European Union (EU) AI Act prohibits the use of AI systems to infer emotions only in the areas of the workplace and education and exempts applications for medical or safety reasons from this prohibition. The use of emotion recognition systems in other areas, on the other hand, are permitted, subject to compliance with the requirements laid down for AI systems classified as high-risk under Article 6 of the AI Act.

Personal data protection laws are also of limited use with little applicability. The centerpiece of the EU’s personal data protection framework, the General Data Protection Regulation (GDPR), applies to “emotion data” only when it is considered “personal data” under Article 4(1) of the GDPR. That said, emotion data is not always regarded as personal data within the legal meaning of the Act, despite the strong link of emotions to one’s identity. Even when a piece of emotion data is legally considered “personal” under EU law, it might still be processed unless it falls under the category of “special data” described in Article 9(1) of the GDPR, which is not always the case for all types of emotion data.

Similarly, human rights instruments, such as the Universal Declaration of Human Rights (UDHR) and the European Convention on Human Rights, are only partially applicable in the sense that they broadly protect the rights we have over our emotions. This current legal gap creates an escape route for the harmful use of emotional AI surveillance, putting at risk not only our privacy and freedom of expression but also our autonomy, which might ultimately cost us our democracy. If we do not properly regulate emotional AI surveillance now, we might soon find ourselves in a world where we have to hide or fake how we feel to protect our privacy and mental integrity.

While this seems like a remote and highly pessimistic possibility, the continuous trials of emotional AI surveillance conducted across the world on several fronts suggest that they will become a part of our lives much sooner than we may want to think. Any hesitation now could tomorrow bring us one step closer to what Winston experienced in Oceania: the land of imprisoned minds.

Authors