Transcript: US House Subcommittee Hearing on the Harms of Deepfakes

Gabby Miller / Mar 13, 2024

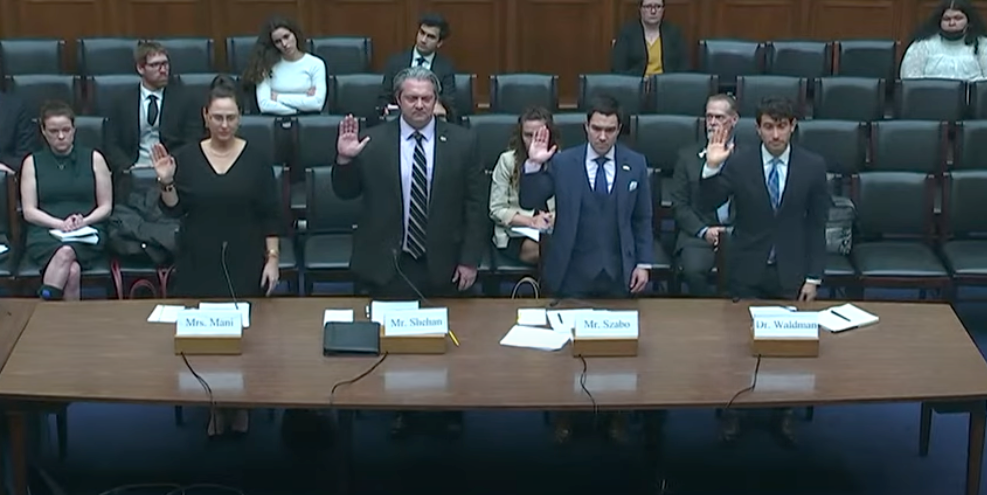

Witnesses from left to right: Dorota Mani, Parent of Westfield (NJ) High School Student; John Shehan, VP of the Exploited Children Division, National Center for Missing and Exploited Children; Carl Szabo, VP and General Counsel, NetChoice; and Dr. Ari Ezra Waldman, Professor of Law, University of California, Irvine School of Law. March 12, 2024.

On March 12, 2024, the House Committee on Oversight and Accountability hosted a hearing titled "Addressing Real Harm Done by Deepfakes." The subcommittee on Cybersecurity, Information Technology, and Government Innovation, led by Chairwoman Rep. Nancy Mace (R-SC) and Ranking Member Rep. Gerald Connolly (D-VA), invited the following witnesses:

- Mrs. Dorota Mani, Parent of Westfield, NJ High School Student (written testimony)

- Mr. John Shehan, Vice President of the Exploited Children Division at the National Center for Missing and Exploited Children (written testimony)

- Mr. Carl Szabo, Vice President and General Counsel, Netchoice (written testimony)

- Dr. Ari Ezra Waldman, Professor of Law, University of California, Irvine School of Law

What follows is a lightly edited transcript.

Rep. Nancy Mace (R-SC):

The subcommittee on Cybersecurity Information Technology and Government Innovation will now come to order and we welcome everyone who is here this afternoon without objection. The chair may declare a recess at any time, and I do want to ask for unanimous consent at this time for Representative [Joseph] Morelle from New York to be waived on to the subcommittee for today's hearing for the purposes of asking questions. So without objection, so ordered and thank you for being here today when we have a few extra members that come in as well this afternoon. I would like to recognize myself for the purpose of making an opening statement. First of all, I want to say thank you to all of our witnesses who are here today. AI, deepfakes, non-consensual photos, photography are only getting worse in this country and around the world because of the advent of technology, and we're very eager to hear from each and every one of you today.

If you didn't get a chance to watch ABC this week on Sunday and see the way George Stephanopoulos handled the topic of rape, I would encourage everyone watching this today to go and watch it. I've been working on women's issues for a very long time and it's only -- the body of work that I've been working on is only becoming more extensive because of the advent of technology. I wanted to point out today for my constituents back home, some of the legislation that I've been working on. For example, this is HR.5721. It has to do with rape is an issue that I care, near and dear to my heart. I don't believe in rape shaming rape victims, but I did a bill that would work on the backlog for rape kits in this country. There are over a hundred thousand rape kits that are sitting on shelves today that law enforcement have not processed, and I want women to know here today and the hearing those that are watching those around the country to know that Congress cares.

We care about victims of sexual crimes. And another bill, most recently there was the overturning of, there was a decision in Alabama about IVF and I've sponsored a resolution, I guess two weeks ago, house Resolution 1043 that talks about IVF and my desire to make sure that we one, condemn the Alabama ruling, but two, also, we do everything we can to protect women and their access to IVF. And it's not just a women's issue, it's a family issue. It's men and women alike who want to start a family and both sides of the aisle. I know that we both want to work to make sure that we protect women and men and their access to reproductive technology and the ability to have a family. I recently rolled out last week a deepfake bill, an initiative that would take a look at it from a criminal perspective.

We have a lot of laws in this country that talk about -- some states talk about revenge porn. Some have obviously peeping tom laws, surreptitious recording laws, but really the advent of deepfakes and technology and AI is really a new frontier and we'll hear from you all today about this, but I filed a bill with some of my colleagues last week that would take deepfakes if they are in the likeness of a real person and make it a crime. This is not a crime yet today, and when the FBI or when you're looking to charge someone or indict someone for criminal behavior, it's got to be under Title 18. So we looked at Chapter 88, Title 18 of the Federal Code of Laws and looked at how we can make it a crime. I also recently co-sponsored a bill by Alexandria Ocasio-Cortez on deepfakes, but it was related to civil torts.

I've learned a lot in the last hundred days or so due to some experiences that I've recently had about our nation's laws and how poor they are on non-consensual recordings of people, whether they're real or whether they're deepfakes. I'm going to be introducing a bill next week, I believe, on voyeurism. Again, looking at Title 18, when the Violence Against Women Act was done, there was a tort for, a civil tort, enabled for women who are victims of voyeurism at the federal level, but there was no crime. It's not a crime to do that at the federal level. I'm sort of astonished that there isn't, but those are just a smattering of things that I've been working on up here in Congress. I did want to enter into the record this afternoon and wanted to ask unanimous consent to enter into the record. Two articles. One is out of People Magazine, State House Rep. Brandon Guffey.

His son committed suicide. His son was 17. The title of the article is "His Son, 17, Was Sextortion Victim, Then Died by Suicide. Now, SC Dad Protects Other Kids from Same Fate." This is difficult for me to read, but Brandon Guffey was typing on his phone at his home in Rock Hill, South Carolina. All of a sudden you heard a sound, it sounded like a bowling ball falling and crashing through shelves. Brandon told People Magazine, he yelled for his son Gavin Guffey, who was in the bathroom with the door locked. When the 17-year-old failed to answer, Brandon kicked in the door and found his oldest child lying on the floor bleeding. He thought that he fell and hit his head after the shouting from his wife. They called 911. Brandon said he could smell the gun and the taste of gunpowder. Brandon's son committed suicide because of being shamed and blackmailed over photographs on Meta on social media, and it's very hard for me as a mom to hear these stories that have had kids affected by online scammers on social media, but it gets worse because with the advent of deepfakes and AI and technology, it's not just real videos you have to be worried about.

It's the fake ones now that can be easily created. The second article I wanted to ask unanimous consent to be entered into the record is a recent article in the Post and Courier and the title of it is "Member of Aiken Winter Colony Family still Facing Voyeurism Charges. "This guy had a hidden camera in Airbnb, I guess, and under South Crown of State Law Section 16-17-470 where it's illegal to record anybody. This first offense, voyeurism is a misdemeanor. It's only a misdemeanor. The fine is $500 and you face only up to three years in jail. This guy, I believe allegedly had thousands of videos of unsuspecting victims and recently and disturbingly, I learned of a real incident in my district where multiple women appeared to be recorded without their knowledge or their consent. Over a dozen women in my district and disturbingly included in these videos and these photographs that I've been made aware of included sexual assaults.

As a rape victim, to learn about these things is deeply, deeply disturbing, and as I just mentioned, these are the real stories of real women that are victims, but it's worse because with the advent of deepfakes and AI and technology, it's not the real videos. I mean, obviously we're worried about that, but now it can be created out of thin air and that fake videos of real people are out there. We're going to hear your stories today and some of you, I hope will touch on legislative options. How do states address this? How does the federal government address this? How do we take care of this criminally? How do we take care of this civilly? Because women who are victims of such a disturbing thing, whether it's real or fake or deepfakes, they ought to get justice in this country at the federal and the state level with AI technology moving forward very fast here in this subcommittee today, we're going to talk about this from policymakers and people and family and moms who've experienced this horrific thing called deepfakes. We're going to hear about child pornography, something. I can't even talk about what's happening in the deepfakes and AI world with child porn. It's all deeply disturbing and I look forward to hearing everyone's testimony today and how do we move forward from here and make sure that everyone who's been a victim has their voices heard and that they get justice when this happens. Thank you, and I yield back to my colleague from Virginia, Mr. Connolly.

Rep. Gerald Connolly (D-VA):

Thank you for acknowledging the importance of this hearing during Women's History month. I'm grateful that we're gathered here today to highlight a sensitive but very deeply troubling subject. A 2023 study found that while 98% of all online deepfake videos were pornographic, women were the subjects in 99% of them. Our hope is that today's discussion will underscore the need for policy solutions that end the production, proliferation, and distribution of malicious deepfakes. Earlier this year, artificial intelligence- generated pornographic images of American Pop star Taylor Swift rapidly spread on social media. Formerly known as Twitter, or X, that platform and that company proved very slow to act and the images received as a result more than 47 million views in a matter of hours before X finally got around to removing them. Despite the images of removal, the explicit deepfakes of the singer remain elsewhere online and no laws exist to stop other malicious actors from reposting that material.

Again, the fact that Ms. Swift, a globally recognized icon who built a $1 billion empire cannot remove all non-consensual deepfakes of herself emphasizes that no one is safe. Deplorably, children have also become victims of deepfake pornography. Last December, the Stanford Internet Observatory published an investigation that identified hundreds of images of child sexual abuse material, also known as CSAM, in an open dataset that AI developers use to train popular AI text image generation models. While methods exist to minimize CSAM in such a dataset, it remains challenging to completely clean or stop the of open data sets as the data are gathered by automated systems from a broad cross section of the web and they lack a central authority or host. Therefore, tech companies, leaders, victims, advertisers, and policy makers must come together to build a solution and address the issue head on. Mrs. Dorata Mani, thank you for coming here today and bravely sharing your family's story.

You and your daughter Francesca have proven to be fierce advocates against the creation and proliferation of non-consensual deepfake pornography. You're providing a stalwart voice for countless others victimized by AI generated deepfakes. I know President Biden has heard your heartfelt request for help because during his State of the Union address just this last week, he explicitly called upon Congress to better protect our children online in the new age of AI. I also want to thank my multiple democratic colleagues who requested to wave onto the subcommittee today to speak out against harmful deepfakes. One of those members, Representative Morrelle, introduced the Preventing Deepfakes of Intimate Images Act, which would prohibit the creation and dissemination of non-consensual defects of intimate images. As a co-sponsor of this bill, I see that legislation as a great first step to preventing future wrongs that echo the flight of your family, Mrs. Mani.

Recent technological advancements in artificial intelligence have opened the door for bad actors with very little technical knowledge to create deepfakes cheaply and easily. Deepfake perpetrators can simply download apps that undress a person or swap their face onto nude images. That's why if we want to keep up with the rapid proliferation of deepfakes, we must support federal research and development of new tools for the detection and elimination of deepfake content. In addition, digital media literacy programs which educate the public about deepfakes have demonstrated effectiveness, investing individuals with skills to critically evaluate content they consume online. But we cannot have a fulsome discussion without acknowledging that some of our colleagues, including members of this very committee, have actively worked against rooting out the creation and dissemination of fakes. This Congress, the House Judiciary Committee Select subcommittee on the Weaponization of the Federal Government has relentlessly targeted government agencies, nonprofits, and academic researchers who are in the frontline of this very work.

These members have stifled the efforts of individuals and advocacy organizations actively trying to combat deepfakes and disinformation. For example, the Select Subcommittee accused the Federal Cybersecurity and Infrastructure Security Agency, CISA, of colluding with Big Tech to censor certain viewpoints. These members argued that CISA's work to ensure election integrity, which in part includes defending against deepfake threats, is censorship. They've also attempted to undermine the National Science Foundation's efforts to research manipulated and synthesized media and develop new technologies to detect. Most recently on February 26th, Chairman Rep. Jim Jordan subpoenaed the NSF, the documents and information regarding its research projects to prevent and detect deepfakes and other inauthentic information sources. He issued this subpoena even though the directive originated from a 2019 Republican champion law Chairman Jordan has also targeted many of the academic researchers across the country who provide valuable research findings to the public and policy makers, such as the Stanford Internet Observatory, which led the investigation into CSAM's very questionable inclusion in AI training data sets. I'm proud of the Biden-Harris administration secured voluntary commitments from seven major tech companies, promising to work together with us and with government to ensure AI technologies to develop responsibly. But we know our work is not done. I urge my colleagues on both sides of the aisle to set aside partisan fishing expeditions and redirect our focus toward crafting bipartisan solutions to stop the creation of and dissemination of harmful deepfakes. I look forward to the hearing today and I yield back.

Rep. Nancy Mace (R-SC):

Thank you. I'm pleased now to introduce our witnesses for today's hearing. Our first witness is Mrs. Dorata Mani, a mother whose high school daughter was a victim of deepfake technology. We appreciate you being here today, Ms. Mani, to share your and your daughter's experience and message with us. Our second witness is Mr. John Shehan, Senior Vice President, of the Exploited Children Division and International Engagement at the National Center for Missing and Exploited Children. Our third witness is Mr. Carl Szabo, Vice President and General Counsel of NetChoice, and our fourth witness today is Dr. Ari Ezra Waldman, professor of law at the University of California Irvine School of Law. We are welcome to have you and pleased to have you all here this afternoon. Pursuant to Committee Rule 9(G). The witnesses will please stand and raise your right hands. Do you solemnly swear or affirm that the testimony you're about to give is the truth, the whole truth, and nothing but the truth, so help you God? Let the record show the witnesses answered in the affirmative?

We appreciate all of you being here today and look forward to your testimony. Let me remind the witnesses that we have read your written statements and they will appear in full on the hearing record. Please limit your oral arguments to five minutes. As a reminder, please press the button on the microphone in front of you so that it is on and members up here can hear you when you begin to speak. The light in front of you will turn green after four minutes. The light will turn yellow when the red light comes on. Your five minutes has expired and we're going to ask you to wrap up and I'll use the gavel nicely. So now I would like to recognize Mrs. Mani to please begin her opening statement.

Dorota Mani:

Thank you so much for having me here. On October 20th, 2023, a deeply troubling incident occurred involving my daughter in the Westfield High School administration and students. It was confirmed that my daughter was one of several victims involved in the creation and distribution of AI deepfake nudes by her classmates. This event left her feeling helpless and powerless, intensified by the lack of accountability for the boys involved and the absence of protective laws, AI school policies, or even adherence to the school's own code of conduct and cyber harassment policies. Since that day, my daughter and I have been tirelessly advocating for the establishment of AI loss, the implementation of AI school policies and the promotion of educational regarding AI. Despite being told repeatedly that nothing could be done, we find ourself addressing this esteemed committee today highlighting the urgency and significance of this issue. Our advocacy has brought to light similar incidents from individuals across the globe, including Texas, DC, Washington, Wisconsin, Australia, London, Japan, Germany, Greece, Spain, Paris, and more indicating a widespread and pressing concern.

We have identified several loopholes in the handling of AI-related incidents that demand attention from government bodies, educational institutions, and the media. However, our greatest disappointment lies in the school's handling of the situation, which we believe is indicative of a broader issue across all schools, given the ease and allure of creating AI generated content. Here I wish to outline the mishandling of the situation by Westfield High School. One, the school inappropriately announced the names of the female AI victims over the intercom compromising their privacy. The boys who were responsible for creating the nude photos were discretely removed from the classroom. Their identities were protected. Only one boy was called over the intercom when my daughter sought the support of a counselor during a meeting with the vice principal who was questioning her, her request was denied. The administration claimed the AI photographs were deleted without having seen them, offering no proof of their deletion.

My attempts to communicate with the administration about the case have been constantly ignored. A harassment, intimidation and bullying report submitted in November of 2023 has yet to yield a conclusive outcome which we should receive within 10 days of submission. The interviews carried out at the school with underage suspects in the presence of police, but without their parents have made their statements inadmissible in court. Despite our submission of updated policies created by our lawyers at McCarter and English to the Westfield Board of Education, the school's cyber harassment policies and code of conduct remained outdated, referencing Walkmans, pagers, and beepers with no mention of AI to this day, the school's communication focus on only one boy involved, ignoring the others. The accountability imposed for creating the AI deepfake nudes without girls' consent was a mere one day suspension for only one boy. This incident and the school's response underscores the urgent need for updated policies and a more responsible approach to handling AI generated content and cyber harassment at schools.

In light of the recent incident at Beverly Hills Middle School from this month, superintendent Berge not only released a statement that the school's investigations is nearly completed one week after the incident, but also took crucial steps of contacting congress to emphasize the urgency of prioritizing the safety of children in the United States. And today I learned from The Guardian that he expelled five students. This proactive stance demonstrates a commendable commitment to facing uncomfortable truths heads on with a focus on educating and advocating for essential changes in law. Such incidents are handled. In contrast, my expectations for similar leadership and responsiveness from the principal at Westfield High School, Ms. Asfendis, have been met with disappointment. Given that the principal, like myself, is both a mother and an educator, I had hoped for a stronger stance in defending and supporting the girls at Westfield High School. Instead, there appears to be an effort to minimize the issue, hoping it'll simply pass and fade away. This approach is not only disheartening, but also dangerous as it fosters an environment where female students are left to feel victimized while male students escape necessary accountability. The discrepancy in handling such serious issues between schools like Beverly Hills and Westfield High is alarming and calls for immediate reevaluation and action to ensure all students are protected and supported equally in United States schools.

Rep. Nancy Mace (R-SC):

Thank you. I now recognize Mr. Shehan for his opening statement.

John Shehan:

Good afternoon, Chairwoman Mace, Ranking Member Conley and the members of the subcommittee. My name is John Shehan and I'm a senior Vice President at the National Center for Missing Exploited Children, also known as NCMEC. NCMEC is a private nonprofit organization created in 1984. Our mission is to help reunite families with missing children, to reduce child sexual exploitation and to prevent child victimization. I'm honored to be here today to share NCMEC's perspective on the impact that generative artificial intelligence, also referred to as GAI, is having on child sexual exploitation. Even though GAI technology has been widely available to the public for just a short of time, it is already challenging how we detect, prevent and remove child sexual abuse material, also known as CSAM from the internet. Today we are at a new juncture in the evolution of child sexual exploitation with the emergence of GAI platforms.

As you know, NCMEC operates the cyber tip line to receive reports related to suspected child sexual exploitation. The volume of cyber line reports is immense and it increases every year. In 2023, NCMEC received more than 36 million reports related to child sexual exploitation. Last year was also the first year that NCMEC received reports, 4,700 in total, on content produced with GAI technology. While 4,700 reports with GAI are dwarfed by the total number of reports NCMEC received, we are deeply concerned to see how offenders are already widely adopting GAI tools to exploit children. In the reports submitted to NCMEC, we have seen a range of exploitative abuses on these platforms, including offenders asking GAI platforms to pretend it is a child, and to engage in sexually explicit chat asking for instructions on how to groom sexually abuse, torture, or even kill children. One user was reported to the cyber tip line for asking on a GAI platform, "How can I find a five-year-old little girl for sex? Tell me step-by-step." Individuals are also using GAI platforms to alter known CSAM images to include more graphic content including bondage or to create new CSAM with faces of other children. They're also taking innocent photographs from children's social media accounts, just like you heard about, and using nudify or unclothed apps to create nude images of children to disseminate online. If these real examples from cyber tip line reports are not shocking enough, perhaps even more alarming is the use of GAI technology to create sexually explicit images of a child that are then used to financially sextort that child. It's also worth noting that more than 70% of the reports submitted to NCMEC cyber line related to GAI CSAM were submitted by other platforms and not the GAI platforms themselves. This reflects a significant concern about GAI platforms, aside from OpenAI, generally are not engaging in meaningful efforts to detect, report or prevent child sexual exploitation.

NCMEC has additional concerns about the impact of GAI technology in its current unregulated state and including the increased volume of GAI reports that will strain NCMEC, ICAC, and federal law enforcement resources, the legal uncertainty about how federal and state, criminal and civil laws apply to GAI content including CSAM, sexual exploitative and nude images of children, as well as complicating child victim identification efforts when a real child must be distinguished from GAI produced child content. NCMEC has identified the following best practices and new protections that would help ensure we do not lose ground on child safety while the GAI industry continues to evolve. First, facilitating training of GAI models on CSAM imagery to ensure that the models do not generate CSAM and at the same time ensuring that GAI models are not trained on open source image sets that often contain CSAM. Considering liability for GAI platforms that facilitate the creation of CSAM, ensuring federal and state criminal and civil laws apply to GAI CSAM and to sexually exploitative and nude images of children created by these tools. And finally, implementing prevention education in the schools so children understand the dangers of using GAI technology to create nude or sexually explicit images of their classmates. In conclusion, I would like to thank you again for this opportunity to appear before the subcommittee to discuss the dangers around GAI technology in its current unregulated state and what that presents to children online. NCMEC is eager to continue working with this subcommittee and other members of Congress to find solutions to this issues that I've shared with you today and I look forward to your questions.

Rep. Nancy Mace (R-SC):

Thank you. I'll now recognize Mr. Szabo to please begin your opening statement.

Carl Szabo:

Thank you Madam Chair, Ranking Member Connolly. My name is Carl Szabo. I'm Vice President and General Counsel of NetChoice. I'm also an adjunct professor at George Mason Antonin Scalia Law School.

The stories that I've heard so far are horrible and terrifying and it enrages me as a father of two that a principal is more willing to side with the perpetrators of a bad action than the victim. I think that's a little outside what I'm here to talk about, but fundamentally, we should support principals who enforce rules, not principals who try to escape responsibility. Just kind of jumping in, I do want to kind of disagree a little with my colleague over here. AI is heavily regulated today. It is heavily regulated today. Every law that applies offline, applies online. So when it comes to harassment, we need to enforce harassment law. When it comes to fraud, we need to enforce fraud law. Good example is Sam Bankman Fried went to prison not because of crypto, but because of fraud. So the notion that AI is some escape clause for criminals I think is incorrect and we need to do more law enforcement and more prosecution of bad actors.

Simple example, and I kind of outlined this in my testimony. So there's a famous situation this past couple of months where President Biden up in New Hampshire allegedly sent out a bunch of robocalls saying he was dropping out of the race. They used AI to generate the robocalls. Well, it turns out that Pin Drop, a company that detects AI generated content, detected it, identified it was created by ElevenLabs, contacted them. Law enforcement then got the name of the perpetrator from ElevenLabs and arrested him, and they arrested him not under any new law, but New Hampshire law, for example, makes it a crime to engage in such fraud. The Telecommunications Privacy Act, TCPA makes it illegal. We have federal laws with prison sentences up to 20 years for such criminal activity. So I don't care if you use a robot or you do it yourself or you get an impersonator from Saturday Night Live.

Fraud is fraud and we need to be willing to prosecute it. But that's not saying that there aren't gaps in the law. I think you are correct when it comes to things like child sexual abuse material, there are existing gaps in law and we've been working at NetChoice with lawmakers across the country to close those gaps. Under existing CSAM law, you actually require an actual photo, a real photograph of child sexual abuse material to be prosecuted. So bad actors are taking photographs of minors, using AI to modify them into sexually compromised positions, and then escaping the letter of the law, not the purpose of the law, but the letter of the law. So this is an example where legislation that is before Congress that Chairwoman Mace has introduced, and many others can help fill those gaps and make sure that bad actors go to prison.

Looking to the issue of nonconsensual deepfakes, this is something we are also working with state lawmakers across the country to make sure that we enact laws. And one of the things that we did in NetChoice, we sat down, we looked at First Amendment laws. The last thing we want to do is create a law that doesn't hold up in court. We don't want a criminal to get prosecuted and then have a get out of jail free card because we didn't artfully address some of the constitutional challenges. So when we sat down and drafted, and it's included in the backend of our testimony, some of our proposed recommendations, we identified the constitutional issues and then filled in those gaps. Finally, when it comes to artificial intelligence, deepfakes, anything like that, we need to make sure we get the definitions correct. One of the challenges that we are seeing across the country, many states have introduced legislation, well-intentioned, but unfortunately their definition of artificial intelligence is written so broadly it would apply to a calculator or refrigerator. And so we need to make sure when we are drafting definitions and we are writing legislation that we need to hit the target directly. Otherwise we risk creating a law that is unconstitutional and an unconstitutional law will protect no Americans.

Just to close out, the last thing that I'll chime in on, I'm happy to answer your questions about what's going on at the state level, challenges we can address, but legislation must come from the legislative branch of government. One of the things that truly scares me is when we see executive overreach try to seize control of certain sectors of the government. And the fundamental problem is like what we've seen in the latest executive order on AI as well intentions, it may or may not be, it will violate the major questions doctrine. So once again, unconstitutional laws will protect no one. Laws must be written by the legislature and enforced by the executive branch. And to that end, I fully welcome the opportunity to work with this legislature on creating laws to protect everyone from AI deepfakes.

Rep. Nancy Mace (R-SC):

Thank you. I'll now recognize Dr. Waldman to begin your opening statement.

Ari Ezra Waldman:

Thank you, Chairman Mace, ranking Member Connolly and members of the subcommittee, thank you for the opportunity to provide testimony about the dangers of and possible responses to deepfakes here today. My name is Ari Waldman and I'm a Professor of Law at the University of California at Irvine, where I research among other things the impact of new technologies on marginalized populations. Given my commitment to these issues and my own personal experience with image-based abuse, I also sit on the board of directors of the Cyber Civil Rights Initiative or CCRI, the leading nonprofit organization dedicated to combating image-based sexual abuse and other technology facilitated harms. Although I sit on the board of CCRI, I'm here in my own capacity as an academic and as a researcher. As we've already heard, AI means, in this context, we have a proliferation problem in which images or fake images and synthetic images and videos about anyone, whether it's Taylor Swift or a young teenage girl, can be sent throughout the internet within moments.

Technology, of course, didn't create this problem, but it certainly made the problem bigger, harder to identify and dismiss and vastly more common. But common does not mean that the harm is evenly distributed. Deepfakes cause unique harms that are disproportionately experienced by women, particularly those who are intersectionally marginalized, like black women and trans women. So much of the history of modern technology begins with men wanting to objectify and sexualize women. It's no wonder that recent advances in deep learning technology is reflected, is reflecting our cultural and institutional biases against women. Even here, the story Mrs. Mani tells us about how a school is inappropriately ending up putting the female victim at risk reminds me of how so many schools approach the harassment of women, of trans women, of black women and queer folk generally. The people who create, solicit and distribute deepfake porn of women and girls have many motives, but what they all have in common is a refusal to see their victims as full and equal persons.

Like other forms of sexual exploitation, deepfake porn is used to punish silence and humiliate mostly women, pushing them out of the public sphere and away from positions of power and influence. Let's be clear, this isn't mere speech. This isn't protected by the First Amendment. The harm caused by artificial non-consensual pornography is virtually indistinguishable from the harm caused by actual non-consensual pornography, extreme psychological distress that can lead to self-harm and suicide, physical endangerment that include in-person stalking and harassment and financial professional and reputational ruin. There are new deepfake porn apps and web services that launch every month and platforms don't seem willing to do anything about them. These services produce thousands of images every week, and those images are shared on websites that Google and other platforms list in their results and prominently do so. And as we know, deepfakes go viral, even for someone as famous as Taylor Swift. It's always the last bastion of those who want to do regulatory agenda to say that we need to enforce current laws and we don't need any new laws, but we already know and have examples and many examples of current laws not even working, simply enforcing the laws that we have is insufficient.

Although most of us around the world relate to Taylor Swift's music, almost none of us have the same resources at our disposal as she does. If digital forgeries get out there, we are often powerless. That isn't just because we can't all afford lawyers, nor is it just because we don't have lawmakers or platforms listening to us. It's because just like with real non-consensual pornography, it's extremely difficult to mitigate the harm of deepfakes after the fact. This means we need deterrence. We need to stop this particularly non-consensual deepfake pornography before it starts, and that's where Congress can step in. The First Amendment does not stand in the way of Congress acting. There's long standing precedent in First Amendment law for regulating false harmful expressions that are perceived by others to be true. While false expression is clearly not harmful intended or likely to be mistaken.

For real depictions of individuals such as parody or satire, enjoy considerable first amendment protection, there's nothing about defamation and fraud that has been historically considered protected by the First Amendment. So I'm not sure what the deep, difficult conversation is here about trying to pass a law that passes First Amendment scrutiny because nothing that we're talking about here is protected by the First Amendment. There are criminal prohibitions against impersonation, against counterfeiting and forgery, and these have never raised serious constitutional concerns. I would argue that the intentional distribution of sexually explicit photorealistic visual material that appears to depict an actual identifiable individual, without that individual's consent should be prohibited. Civil penalties are a step forward toward deterrence, but insufficient deepfakes offer a liar's dividend. As the legal scholars, Danielle Citron and Bobby Chesney have argued in a world where we can't tell the difference between true and false, those that are lying have a leg up. Thank you.

Rep. Nancy Mace (R-SC):

Thank you all. I'll now recognize myself for five minutes for questioning and to piggyback on Dr. Waldman, that Taylor Swift video got 45 million views before it was ever taken down. And there are people today who don't know that it was a deepfake, probably that it was still real because they don't know. They don't know the difference and didn't know it was taken down because it was a deepfake. So appreciate everyone's points today. My first questions will go to Mrs. Mani. And first of all, I just want to say as a mom of a 14-year-old girl, it's horrifying to know what your daughter went through and the fact that they released the names. I didn't have that detail, but it really pains me to hear that. I was raped at the age of 16 by a classmate of mine in high school. I dropped out of school shortly thereafter, and I can only imagine as a mom what my mom felt at the time.

It's a deeply painful experience, and I'm really sorry that it happened to you. And any woman or young girl that's gone through this, I hate what they have felt and the shame that they've gone through. And on that point, when George Stephanopoulos rape shamed me on Sunday on ABC news this week, I want to make sure that no women or girl is ever treated that way. And I hope that your daughter, that we can put a stop to that. So first of all, my first question to you in your written testimony about what was done to your daughter, you state this event left her feeling helpless and powerless as a mom. Can you talk to us a little bit about what this has done to your family?

Dorota Mani:

Yes. So I probably will not share what you want to hear, but the moment when Francesca was informed of her council and her vice principal, that she was one of the AI victims, she did feel helpless and powerless. And then she went out from the office and she has noticed group of boys making fun of group of girls that were very emotional on the hallway. And that second, she turned from sad to mad. And now because of you, all of you, we feel very empowered because I think you guys are listening and just like you pointed out, you're a father. I think we are all human beings. We all have children, and we all have brothers and sisters that we want to protect, and we should sit down together and figure out a way how to fix it. And I am so sorry that Mr. Stephanopoulos shame you. I think that's the narrative that must change in media. It's besides upsetting, it's just irresponsible and dangerous. The narrative needs to be changed. Instead of talking about girls and how they feel as a victim, we should be talking about the boys and how are they being empowered by the people in power, especially in my case in education, by being left unaccountable, walking the hallways with the girls. My daughter doesn't mind. I do.

Rep. Nancy Mace (R-SC):

Right. And I want to thank you for you and your daughter's advocacy too. Your voice is very important. This is so early on in terms of the technology and what laws we're looking at at the federal and the state level. It's very important to hear voices of moms and dads and parents and the kids who've been affected, quite frankly, because those voices have to be a part of the conversation. And I hope that your advocacy will help change the policies, not just at her school, but every school. So we really admire and appreciate you being here today. I have less than two minutes, and I did want to, while I have you Mr. Szabo, talk about legislatively, policy wise, because I am very, very, very, very tuned into one, as a victim of sexual trauma and assault, and then seeing the things that I have seen, especially over the last couple of months, the advent of technology and then non-consensual pornographic images and videos, et cetera. And then digital forgeries at the federal and the state level. I just at the state level, how many states have updated their laws so far?

Carl Szabo:

So right now we've been working with states across the country. Wisconsin is about to enact the two recommended pieces--

Rep. Nancy Mace (R-SC):

Are they the first?

Carl Szabo:

I don't want to say they're the first, but they're definitely one of the leaders on this. They're going to actually enact both the recommended Stop Deepfake CSAM Act as well as the Stop Non-consensual Artificially Generated Images Act. California right now, we are working with lawmakers out there to make sure that their introduced legislation doesn't get thrown out by a court when a bad actor gets arrested. And so we are seeing many states across the country start to adopt this. To your home state of South Carolina. I would love to see that--

Rep. Nancy Mace (R-SC):

That's what I was going to bring up before--I have 40 seconds left--is talk to you about South Carolina as laws, and I'm going to look up impersonations and forgeries. I'm not quite as familiar with state law, obviously that's not my jurisdiction. But when I looked at what was going on, when I found out about these women in my district that had been recorded without their knowledge or consent, I looked at state law. State law. 16 dash 17 dash 470 is under pPeeping Tom Voyeurism laws. A $500 fine and up to three years in jail for the first offense is offensive. It's not a felony until the second offense, but it clearly, it's not enough. But there is not digital--there's nothing that would include, I believe, deepfakes in there. So I would love to talk to you about, and actually even work with our state legislature, who I know our state legislature is working on revenge porn laws, but I also want to strengthen state law in ways with non- consensual images and videos. So I'd love to talk to you afterwards. So thank you. I'm going to yield back to my colleague from Virginia and Mr. Connolly.

Rep. Gerald Connolly (D-VA):

Thank you, Mr. Shehen. I just want you to know you couldn't pay me a million dollars a month to have your job. I cannot imagine dealing every day with violence against abuse against children. It's horrifying and very hard to listen to or contemplate and salute you for doing what you're doing and protecting children. Dr. Waldman. Well, it's almost St. Patrick's State. A little leprechaun on my shoulder. You will forgive this question, but is deepfakes mentioned in the Constitution of the United States?

Ari Ezra Waldman:

No.

Rep. Gerald Connolly (D-VA):

No. So according to [Justice] Samuel Alito logic, we have no ability to regulate deepfakes because it's not mentioned in the Constitution. Isn't that kind of what he did in the Dobbs decision with respect to abortion?

Ari Ezra Waldman:

Yeah. There's no history in tradition of regulating deepfake technology under his theory of interpretation.

Rep. Gerald Connolly (D-VA):

Thank you. So much for originalism. Of course, we saw a lot of originalism with respect to the 14th Amendment just recently. I think it's kind of outside the window. Antonin Scalia Law School being named after an originalist notwithstanding. Mr. Szabo, if I understood him correctly, was suggesting we don't really need a lot of new laws. There are some gaps, but how about enforcing what we got both at the state level and the federal level? What's your sense of that? We're all about? I'm addressing you, Dr. Waldman. I mean, Congress is all about passing laws. Do we need to pass more laws? I mean, are there in fact some significant gaps that allow malign things to happen that we could perhaps prevent?

Especially in this situation? We've seen it before with non-consensual pornography. We needed new laws on the books because existing defamation or existing tort law had too many gaps, especially when victims originally allowed the image to be taken or video to be taken in the context of a consensual relationship, but then distributed without their consent. So we needed new laws and we've seen some progress. My colleague at GW Law School, Mary Anne Franks, has been a leader in working with legislatures to pass legislation all across the country. That's just one example. There are so many gaps here as well.

Rep. Gerald Connolly (D-VA):

I invite you to provide us a list of those gaps because we'd be glad to work on filling those legislative gaps. I know my colleague and friend, Mr. Raskin has to go to another committee. I yield the balance of my time to Mr. Raskin.

Rep. Jamie Raskin (D-MD):

Well, thank you very much, Mr. Connolly. I just had one question for Mr. Waldman. Can you tell us what is the experience of deepfake regulatory legislation in the states and have they survived First Amendment attack and what is the best model for creating a statute to deal with the problem?

Ari Ezra Waldman:

So we don't have a lot of examples of state legislation focusing specifically on deepfakes, but we have examples of state legislation focusing on non-consensual pornography that has gone to be challenged on First Amendment grounds, and they have been upheld. Minnesota is a really good example. The state Supreme Court handed down and Illinois handed down excellent decisions saying that just as I discussed in my written testimony, there's no reason why this kind of non-consensual harmful activity has ever been protected by the First Amendment. And Congressman Connolly are talking about history and tradition. Here we have an example. There's no history and tradition of allowing this type of content, this type of behavior to be protected by the First Amendment.

Rep. Jamie Raskin (D-MD):

Thank you very much. I yield back and thank you Mr. Connolly.

Rep. Gerald Connolly (D-VA):

Thank you, Mr. Raskin. Mr. Szabo, I would invite you, I represent George Mason University, though I would never have named the law school the way it got named, it's public university. But I invite you to do the same thing I've invited Dr. Wallman to do, which is where there are gaps, or for that matter where there are enforcement issues, please alert us. You may want to comment.

Carl Szabo:

Yes, thank you. So first of all, with respect to the gaps, I highly suggest we take a look at making sure that our laws clearly and address AI, deepfake CSAM is one such example. The other one, it comes to law enforcement. So this is something that, there's a group called Stop Child Predators, put out a report recently. Of 99% of reports of child sexual abuse material don't even get investigated. Yeah, I know. Only 1% of reports of child sexual abuse material get investigated, and that's a lack of resource. So there's currently legislation both on the House and Senate side from both parties called the Invest in Child Safety Act, which would give law enforcement more tools to put bad actors behind bars. And that's something I suggest taking a look at as well.

Rep. Gerald Connolly (D-VA):

And I would just say in closing, when I was chairman of Fairfax County, we had a police unit that looked at child predation and sex trafficking and crimes against children. But that was 15 years ago. And what's happened with technology is it's just exploded. And often I think it just goes beyond the resources of local law enforcement to monitor, let alone entirely enforced. So I think that's something we're going to look at in terms of how we can find better ways of addressing the issue at that level. Thank you so much.

Rep. Nancy Mace (R-SC):

Thank you. I'll now recognize Mr. Timmons for five minutes.

Rep. William Timmons (R-SC):

Thank you, Madam Chair. Mr. Szabo, you said that we don't really need a lot of new laws. And Dr. Waldman, you've taken a pretty different stance on that. I mean, it seems to me that there are indeed holes and there's gaps, whether it's revenge porn, non-consensual porn, child porn. I mean, I think Mr. Szabo, you would agree that we do need to address that. I mean, even there's just a lot of gray areas surrounding causes of action and ways to be made whole, whether it's using civil law to extract financial benefits or criminal law in certain, but you would agree that we do need to address the holes as it relates to those areas?

Carl Szabo:

A hundred percent. I mean, you have laws like FCRA, HIPAA, all these laws. Rohit Chopra, the director of the CFPB [Consumer Financial Protection Bureau], he and I probably disagree on not much. Even he recognizes that you cannot hide behind a computer because existing laws apply. But here we do have gaps that do need to be filled.

Rep. William Timmons (R-SC):

So I guess to that, are you familiar with the Coalition for Content Providence and Authenticity that Adobe has founded?

Carl Szabo:

Yes.

Rep. William Timmons (R-SC):

Okay. So it seems that one of the big problems is that anybody can use the internet and create deepfakes of any kind, and there's no way of knowing who created it. And that's a big challenge.

Carl Szabo:

Exactly. So what we need to do is better identify the perpetrators. I completely agree that it is a challenge, but you can reverse engineer. You can look at IP addresses. That's kind of what happened with the Biden deepfake call. Once they--

Rep. William Timmons (R-SC):

Wanted to go to that. So they were able to charge them because it was fraudulent in that he wasn't actually pulling out of the race. And there's all kind of laws associated with that. What if instead of--would it be illegal if the same individual, instead of saying that the president was pulling out of the race, did dozens of videos of him falling upstairs or stammering or stuttering, I mean, those will have equally adverse impacts on a campaign. What law would apply if somebody did a video of him falling into Marine One or falling out of Marine one? I mean, is that illegal?

Carl Szabo:

So it is a complex--

Rep. William Timmons (R-SC):

The answer is no. I don't think it is.

Carl Szabo:

It depends is kind of the problem. Because you have the public figure doctrine, you have satire. There's a lot that goes into that. States have tried to look at this by requiring campaign videos that use altered images to have a disclosure. But the challenge there again, is in the definition. So if a politician were standing in front of a green screen, that would be defined as an altered image and all of a sudden you have to put at the bottom of your campaign ad. There are fake images in the campaign ad.

Rep. William Timmons (R-SC):

Again, there's so many different bizarre media outlets. I mean, you couldn't put a video of a fake video of the president falling, which again, there's many that exist that are not fake. But I mean, if you had a fake one, you couldn't put it on television because it gets vetted through legal. You couldn't theoretically run an ad on a radio that is fabricated because the radio station has liability. If they're going to release an ad that's fake. But again, the internet is such a wide area of media consumption that none of these laws really have any enforcement mechanism. So what would you say if, I mean, how would you address a deepfake that would be detrimental to someone's political campaign or life short of non-consensual pornography, but still is equally bad. We don't have laws for that.

Carl Szabo:

Well, so you could bring an action under existing tort law for defamation of character misappropriation.

Rep. William Timmons (R-SC):

Why is it defamation if you're falling over?

Carl Szabo:

Because if it's not a real image, it is a–

Rep. William Timmons (R-SC):

What if I fell over in a different image?

Carl Szabo:

So I was going to say it--

Rep. William Timmons (R-SC):

Truth again. Truth is the ultimate defense to defamation.

Carl Szabo:

When it comes to non-consensual disclosures, for example, you have the Hulk Hogan versus Gawker example that played out under existing privacy laws. So there's potential. There are a lot of laws out there that can be enforced today. And to the extent that we do find gaps, we need to make sure that when we fill them, that we do so in a constitutional way.

Rep. William Timmons (R-SC):

I agree with you on that, and I think one thing that we're not talking about is disparity resources. Dr. Waldman, you touched on this. Taylor Swift has unlimited resources. She can sue whoever she wants. If a similar situation to Ms. Mani happened, you got to be able to file technically under the VAWA civil cause of action, you could probably allege that it was non-consensual pornography. And you could, I mean, but it would cost tens of thousands of dollars. I mean, I like lose your pays across the board, but could we look into some sort of lose your pays funding mechanism to address civil causes of action for revenge porn, non-consensual porn, all of these things. Dr. Walden, is that something?

Ari Ezra Waldman:

Yeah, absolutely. I believe Chairwoman Mace's proposal includes a fee shifting provision for civil damages that would, if it is found to be indeed non-consensual deepfake pornography that the perpetrator would have to pay. But still, even Taylor Swift still has the problem of those images and videos are still out there. And even she can't--

Rep. William Timmons (R-SC):

It also goes back to the provenance. How do you know who did it? And anyways, okay, I'm over. Thank you. I yield back.

Rep. Nancy Mace (R-SC):

Thank you, honor, I'll recognize Ms. Pressley for five minutes.

Rep. Ayanna Pressley (D-MA):

Thank you to our witnesses for being here today, including Ms. Mani. As a survivor of intrafamily childhood sexual abuse myself, I must say I really do look forward to a day where families and children do not have to weaponize and relive their trauma in order to compel action from their government. But I'm grateful for those who do it time in time again. Frederick Douglass once said, it is easier to build strong children than to repair broken men and women. That is why in the 116th Congress as a freshman member and serving on the Oversight Committee, I convened the first ever hearing on childhood trauma in the history of this committee. Children across the country and in my district, the Massachusetts Seventh, are facing layered crises, shouldering unprecedented emotional burden from challenges in their homes, classrooms, and now more than ever online. Our young girls, our young girls, and we must see them as all of our children. Our girls are targeted and victimized the most. Just last year, the CDC released a report that teenage girls are experiencing record high levels of violence, sadness, and suicide ideation. The trauma backpacks that they carry across the thresholds into our schools every day only grow heavier. I ask unanimous consent to enter this youth risk behavior survey into the record.

Rep. Nancy Mace (R-SC):

Objection.

Rep. Ayanna Pressley (D-MA):

As a black woman who was once a black young girl, I know intimately what it is for your body to be criminalized, your hair, to be criminalized, for your body to be banned, objectified, and as a survivor violated. And as this report makes plain in this hearing has confirmed our girls are being traumatized. I worry for my 15-year-old daughter who will think that it is normal, a conflated part of her identity as a girl or a woman in this country to experience these indignities and these violations. Professor Waldman, in what ways does non-consensual deepfake pornography contribute to the growing crises of childhood trauma?

Ari Ezra Waldman:

I need more than two and a half minutes to describe all those ways. But very briefly, the non-consensual deepfake pornography does more than just non-consensual pornography in that not only does it objectify and make someone at risk of someone who's always looking over their shoulder of every image, every social encounter that they engage in, which deters them from engaging with other people, which is necessary at any age of life. But also it allows for this to happen even if you don't have any images out there, because these images can be created even with a simple instruction to an AI generator. Essentially what it does is it creates perpetual trauma and perpetual risk of trauma.

Rep. Ayanna Pressley (D-MA):

Thank you. Further, a traumatized child certainly has a decreased readiness to learn. Advances in AI have made it easier for people to create sexual content that intimidates, degrades, dehumanizes, and traumatizes the victims. And this technology is becoming more present in our K-12 schools. Ms. Mani, can you describe what the psychological damages for teenagers who are victims of this type of harassment? And once again, thank you for the courage you and your daughter continued to display. In the face of these reprehensible acts.

Rep. Nancy Mace (R-SC):

Turn your microphone or speak into it.

Dorota Mani:

So I'm not trained to really talk about the repercussions of those images. All I can tell you is that we are not the majority. We took a stand and my daughter took back her dignity, but not many girls can be in the same position because of multiple of layers and factors. Most importantly, it's a shame that in 2024, we are still talking about consent. Consent in regards to our body, know that should be taught as a sentence, and our girls that are not empowered, but rather falling through the cracks because of the educational system laws. Laws, do they have to be put in place? A hundred percent. School policies, a hundred percent. And then we all should sit down and figure out ways of how to make it better without pointing fingers as well, but rather because it's ethical and the right thing to do.

Rep. Ayanna Pressley (D-MA):

Absolutely. I think we should start with trauma-informed schools. Thank you a hundred percent.

Rep. Nancy Mace (R-SC):

I'll now recognize Mr. Langworthy for five minutes.

Rep. Nick Langworthy (R-NY):

Thank you, Chairwoman Mace. It seems now every single week that goes by, we see another story about a bad actor using AI unethically. And while I strongly support innovation and will always work to make sure that this country does not lose its edge to China in the AI race, I think that we all must hold accountable, unethical creators, criminal actors, and especially those who are creating child pornography in child sexual abuse material. Emerging technology should always be used in ethical ways, and tech companies alongside Congress need to ensure that this happens. That's why I'm very proud to be working on legislation with attorney generals from all 50 states and four territories that would create a commission examining generative AI safeguards, assess current statutes and recommend legislative revisions to enhance law enforcement's ability to prosecute AI related child exploitation crimes. And I'd like to enter into the record a letter signed by 54 attorneys general calling for this commission-based approach.

Rep. Nancy Mace (R-SC):

Without objection.

Rep. Nick Langworthy (R-NY):

I want to start today by talking about law enforcement's approach to generative AI. Mr. Shehan, how is law enforcement reacting to the uptick in AI generated sexual or child sexual abuse material CSAM? Has that approach been reactive as in waiting for images to circulate or are there ways law enforcement can be more proactive?

John Shehan:

Excellent question and in a lot of the scenarios, these are reactive because where I outlined earlier that many of the generative AI technology companies, they're not taking proactive measures to identify and stop the creation of that material on the onset. It's often after the content has already made its way into the wild that you have social media companies and the such that are finding these types of material and reporting it into our cyber tip line, and we in turn provide that information to the Internet Crimes against Children task force members who are actively investigating these cases. One quick example, in the fourth quarter of last year, we had a report that came through. It was made by Facebook regarding an adult male who was talking through Messenger to a minor using Stable Diffusion to create child sexual abuse content, send it to the minor. It was detected and reported that Wisconsin ICAC investigated that case. Found out not only was he creating content, possessed child sexual abuse material, and through the forensic interviews also realized he was abusing his five-year-old son. State and local law enforcement are having to deal with these issues because the technology companies are not taking the steps on the front end to build these tools with safety by design, we're getting this content out into the wild far too early and something has to be done about this.

Rep. Nick Langworthy (R-NY):

It's chilling. Thank you. I'd like to point out that the sheer volume of cyber tips has oftentimes prevented law enforcement from pursuing proactive investigation efforts that would efficiently target the most egregious offenders. In only a three month period. From November 1st, 2022 to February 1st, 2023, there were over 99,000 IP addresses throughout the United States that distributed known CSAM and only 782 were investigated. Currently, law enforcements, in no fault of their own, they just don't have the ability to investigate and prosecute the overwhelming number of these cases. Mr. Szabo, there's been several bills introduced as Congress to adjust the current legal framework to protect those exploited by generative AI and even more that look to combat deepfakes all at once. While many of them are well-intentioned, my concern is that the Department of Justice has not had much success in prosecuting a number of these cases because of the fine line that needs to be walked with the First Amendment right. So I wanted to ask you, what are the biggest gaps in the current legal framework that need to be filled?

Carl Szabo:

So there's a case called Ashcroft v. Freedom of Speech Coalition and basically what it got into is an overly broad law. Well-intentioned to prohibit these types of activities, but it applied to non-actual victims of fake images and the US Supreme Court shot that down. They said it's a violation of the First Amendment. So one of the gaps is the type of legislation that we've been talking about here, whether it is the Chairwoman's legislation as well as some of the other bills that have been proposed from all sides of the aisle to kind of fill that gap and make crystal clear that AI created content if it has the image of an actual or identifiable child, is CSAM material as opposed to the way the laws are currently written, which requires an actual photo. So we are seeing time and time again, the bad actors are escaping justice at the same time. The Invest in Child Safety Act, I think is a really important one to give law enforcement the tools it needs. One other thing to address is groups like NCMEC are taking on tons of information but not necessarily having enough time to process it and they have a mandatory deletion time for content, so giving them a bit more time to process and prosecute content that they receive and tips that they receive I think would be helpful as well.

Rep. Nick Langworthy (R-NY):

Thank you very much and I'm out of time. Thank you Chairwoman for having this hearing and I yield back.

Rep. Nancy Mace (R-SC):

Thank you. I'll now recognize Mr. Garcia for five minutes.

Rep. Robert Garcia (D-CA):

Thank you very much, Madam Chair and thank you for allowing me to wave onto the committee today. I want to thank all of our witnesses, particularly to Ms. Mani. My heart goes out to you and everything that obviously you've experienced. I also want to just note a couple other cases I think are important. A few weeks ago, fake images were circling online that put real students' faces on artificially generated nude bodies from Beverly Vista Middle School and Beverly Hills. We're talking about middle school students. We know that that's completely predatory and unacceptable. I know a lot of examples have been discussed today. Also, just weeks ago, AI generated pornographic deepfake images of Taylor Swift were viewed more than 45 million times now. Media investigations showed how easy it was to get AI software guard rails to post these images and how platforms struggled to prevent people from sharing them.

Rep. Nancy Mace (R-SC):

Will the gentleman yield for one second. We need to waive you on as unanimous consent to have Representative Garcia from California on the subcommittee for today's hearing and without objections so ordered.

Rep. Robert Garcia (D-CA):

Thank you. Now I'd like to ask unanimous consent to introduce this article entitled that "Taylor Swift deepfake debacle was preventable." And these are all really serious issues. Now, fortunately, someone like Taylor Swift had millions of fans that came out to defend her to protect her online. They flooded social media with junk posts to very abusive content. The phrase 'Protect Taylor Swift' was over on 36,000 posts that were shared. But we know that Taylor Swift fans and Swifties can't protect everyone and certainly not people that don't have that platform. And so Congress has responsibility to act. Now deepfake pornography accounts for 98% of deepfake videos online and 99% of all deepfake porn features women while only 1% feature men. A 2019 study found that 96% of all deepfake videos were non- consensual pornography. And it doesn't matter of course, whether you're a billionaire, one of the most powerful women on earth, whether you're Taylor Swift or a middle school student, deepfake pornography and the manipulation of images is deeply troubling and predatory particularly to women across this country and girls now we know that deepfake images can also be used to intimidate, harass and victimize people and oftentimes you're targeted. There's nowhere to turn. And Ms. Mani, I know in your situation you received little to no support and I just wanted to ask you, did you feel you got any support from the actual school itself?

Dorota Mani:

I received zero support and it is disappointing that I have to sit down in here and fighting for the girls of Westfield and other girls of United States because my school didn't have the balls to do so. Also, in contrast, as you mentioned, the Beverly Hills incident that principal or a superintendent withdrew, the boys from the school completed HIB investigation within 10 days he did the right thing. Was it an easy choice? No, but it was the right thing and I think our girls should not be solo gladiators fighting for the rights. It's shameful that in 2024 we need to fight still for our rights.

Rep. Robert Garcia (D-CA):

That's exactly right. And I want to note that the Beverly Hills Middle School case, I mean students were actually expelled in that case. The principal acted quickly and it's important to note, I mean all young girls deserve equal protection. That also happens to be a school that's very well resourced with parents that are constantly advocating. It's in Beverly Hills and so it shouldn't matter where the school is or the resources parents may have, but every girl deserves protection at every single school or in any student period. I also just -- Professor Waldman, we know also just briefly with the remainder of my time, we know that Russia and China and other hostile actors are targeting our elections. We've seen deepfakes already used to do that, whether it's targeting President Biden or other elections that are happening as well. Can you explain how what's happening right now is undermining our election security and as well as our national security?

Ari Ezra Waldman:

Sure. So I think it can boil down to what deepfakes do is, as I said during my testimony, they create a liar's dividend, which means that when anything could be false, then anything, everything is presumed to be false. So we lack, therefore, when we know that AI can be used by Russia and hostile countries to undermine our democracy, then we start disbelieving everything. We don't start just disbelieving the things that are actually false. We start then allowing people to say, well, how do I know that it's true? It could be a deepfake. And when we disagree on even just the basic things, democracy ceases to work.

Rep. Robert Garcia (D-CA):

And I think I appreciate that. I think obviously deepfakes are oftentimes being used for entertainment purposes. I mean, look, I've seen deepfakes on funny videos on things that could be entertaining, but it's also deeply troubling when it affects our elections and certainly when it's affecting people and young people in our country. Before I close, I just want to introduce a written statement into the record from Dr. Mary Anne Franks. Dr. Franks is the president and legislative tech director of the Cyber Civil Rights Institute and Eugene l and Barbara A. Bernard professor in intellectual property technology and civil rights at George Washington Law. So I'd like to just--

Rep. Nancy Mace (R-SC):

Without objections, so ordered.

Rep. Robert Garcia (D-CA):

Thank you and thank you all to our witnesses.

Rep. Nancy Mace (R-SC):

Alright, I'll now recognize Ms. Luna for five minutes.

Rep. Anna Paulina Luna (R-FL):

Chairwoman, if I could submit this poster into the record.

Rep. Nancy Mace (R-SC):

Without objections. So ordered.

Rep. Anna Paulina Luna (R-FL):

So there's been a lot of talk today on CSAM, but for those who might be tuning in that might not know what that is, it is the creation of child sexual abuse materials from lifelike images of children. This situation has not only perpetuated the occurrence of child sexual exploitation in this country, but has also created a new legal question about how to effectively crack down on the practice to protect our children. And I bring this up because this was actually something that the FBI in talking to them about cyber crimes asked us to specifically look at because they're having issues currently prosecuting these really, really gross sick individuals because technically a child is not hurt in the process because it is a generated image. According to recent reports, thousands of AI generated child sex images have been found on forms across the dark web. Some of these forms have even been found to have instructions that detail how other pedophiles can create their own AI generated sex images.

And I just want to point to the poster behind me. If you see, after COVID in 2020 and then you see this spike, this also in my opinion correlates with the rise and the – I think evolution of AI getting better and better and better at generating these graphics and images. And you can see it's clearly not good for our kids. This says increase the speed and scale which pedophiles create new CSAM. One report explained that in the creation of new images, pedophiles superimpose the face of children onto adult bodies using deepfakes and rapidly generate many images through one single command. The importance of raising awareness of this problem speaks for itself. In one study in an online forum with over 3,000 members, over 80% of respondents stated that they would use or intended to use AI to create child sexual abuse images. This is concerning for child safety and makes law enforcement efforts to find victims and combat real world abuse much, much worse. First question is from Mr. John Shehan. He previously stated in a report of CSAM online platforms grew from 32 million in 2022 to 36 million in 2023. What factors do you think have contributed to this trend?

John Shehan:

That's an excellent question and much of it is around just the global scale and ability to create and disseminate child sexual abuse material. This is truly a global issue. The 36 million reports last year involved more than 90% were outside the United States individuals using US servers, but we're also seeing a massive increase in the number of reports that we're receiving regarding the enticement of children for sexual acts. In your chart there in 2021, we had about 80,000 reports regarding the online enticement of children. Last year it jumped up to 180,000 reports, not even through the first quarter of this year. We're already over a hundred thousand reports regarding the enticement of children. Many of these cases are involving generative AI, others are financial sextortion. So there are individuals in countries like Nigeria and the Ivory Coast and it's all about the money. They're blackmailing young boys to create sexually explicit image, just that one image and then they're after the money, the deepfakes, significant amounts of money.

Rep. Anna Paulina Luna (R-FL):

Just out of curiosity in regards -- because we have legislation, I know that I'm co-sponsoring Representative Mace's legislation in regards to deepfakes, but in regards to the number one platform that you're finding that this is being circulated on, and I know that this has been a question of how do these online platforms moderate this content? What is the number one platform that you're finding that's distributing this?

John Shehan:

Well, so GAI and deepfakes, it's a difficult question because some of the companies that are reporting the most are doing the most. I mentioned earlier OpenAI, they are setting the bar for what every single other generative AI company should be doing in this space. I also gave an example just a minute ago about stable diffusion, which is owned by Stability AI. They're not even registered to report to the cyber tip line. So we have a huge gap in some of these providers who are enabling individuals to create child sexual abuse content and they're not even set up to report. So it's difficult to give a top provider when there are so many that aren't even doing a bare minimum.

Rep. Anna Paulina Luna (R-FL):

So what would you say the bare minimums are?

John Shehan:

Well, certainly taking proactive steps that if someone's trying to use these tools to create child sexual abuse material or modify it or text prompts to create, they shouldn't be allowing that to happen. We started off this session ranking Member Connolly had mentioned the Stanford Internet Observatory research that was done, that discovered that there was child sexual abuse material in the training set of these data that was given to the OpenAI models to train on. How did that even happen? How is their child sexual abuse material in the content that they're training on? So there's so many things that we work backwards on to rectify the situation that we're in right now.

Rep. Anna Paulina Luna (R-FL):

Okay. Well, I know parents might be tuning in, so I just asking, I'm sure you'd agree, but maybe not post pictures of your children on the web because right now it's the wild west out there and they can be exploited.

John Shehan:

There certainly are situations where even the benign photos, the clothed photos as you heard earlier, are being run through these tools and turned into nudity and pornographic content. So it is a troubling time to be posting content online with some of these tools that are not built safety by design.

Rep. Anna Paulina Luna (R-FL):

Thank you for your time. Chairwoman, I yield my time.

Rep. Nancy Mace (R-SC):

Okay, well now finally, Mr. Morelli I recognize you and thank you for being here this afternoon. Thank you for waiting so patiently.

Rep. Joe Morelle (D-NY):

Thank you Madam Chair. And let me start by thanking you for holding this incredibly important hearing and thank ranking member Connolly and both of you for allowing me to participate in this conversation as well as thanking our witnesses for sharing their perspectives on this growing and very, very dangerous issue. An issue that has been noted overwhelmingly, disproportionately affects women. And I also want to acknowledge some familiar faces on the witness panel. I first of all want to thank Mrs. Mani, a mother of powerful advocate and partner in the war to help prevent innocent people from being harmed by non- consensual deepfake images. I've had the pleasure of meeting both Dorota and her 14-year-old daughter Francesca several times, including here in Washington where they both courageously participated in a conversation myself and Congressman Tom Kean on this topic. I also want to thank the Cyber Civil Rights Initiative represented here by Dr. Waldman for their assistance in drafting of my legislation, the Preventing Deepfakes of Intimate Images Act, which was originally introduced in 2022, long before anyone heard of what happened to Taylor Swift. And we reintroduced the bill in May of 2023 on this subcommittee alone. Members Connolly, Lynch, Langworthy Moscowitz, our co-sponsors, and as I listen and learn from our witnesses panel this afternoon, the need has been clearly demonstrated for a comprehensive and what I hope will be a bipartisan solution to address the unique pain caused by the distribution of non-consensual intimate deepfake images and has been said, and I think bears repeating, made so much easier today by advances in both generative AI as well as hardware and the capability of even laptops to be able to do this years ago, you'd need to have some sophistication nowadays, frankly, I think teenagers will be able to do it with very, very little training and very little time and energy.