Governing Access to Synthetic Media Detection Technology

Jonathan Stray, Aviv Ovadya, Claire Leibowicz, Sam Gregory / Sep 7, 2021Tools to detect deepfakes and other synthetic media are beginning to be developed. Civil society organizations and journalists have expressed a need to have access to those tools. Perversely, wide access to this technology may make it less effective because it would also provide adversaries with important information. Detection access is the question of who has access to these tools and under what terms, but it can trade off against detection utility, meaning how well the tools work. Detection equity is how access to tools and the capacity to use them is provided equitably to avoid perpetuating systemic inequalities.

Partnership on AI (PAI), in collaboration with WITNESS, recently hosted a workshop with roughly 50 organizations from civil society, academia, and industry to align on principles for releasing synthetic media detector tools. This builds upon our work investigating: 1) how we mitigate the spread and impact of harmful synthetic and manipulated media, and 2) how we strengthen opportunities for AI to help create content which is socially beneficial.

PAI is working to address these challenges through various work streams within the AI and Media Integrity Program. Following is a set of key considerations for those concerned with the problem of manipulated media and AI.

Access Questions

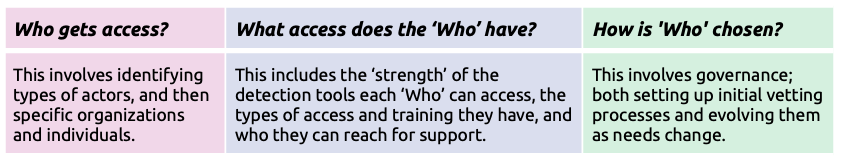

Three questions must be answered to define an access protocol:

The questions above must be addressed within understanding of power structures and while avoiding perpetuation of systemic inequalities.

The Detection Dilemma

The more broadly accessible detection technology becomes, the more easily it can be circumvented. This is because one of the most effective ways to neutralize a detection system is to test altered images to see which ones it does not successfully detect. Recent adversarial AI research has demonstrated that sometimes altering only a few pixels can fool image classifiers. Although specific vulnerabilities can be fixed, detector evasion is also evolving. We will be in an arms race between synthetic media creation and detection for the foreseeable future.

This means that detection access can counterintuitively decrease detection utility & equity, because the most widely available detectors may also be the most easily circumvented. This dilemma is also an operational security problem: even if detection technology is only provided to trusted organizations, it may make its way to malicious actors if it is not sufficiently secured. Software can be copied, and accounts can be hacked.

Most detection technology is either:

(1) Privately held, primarily by large companies, and used to protect their users, e.g. Facebook’s COVID misinformation detector,

(2) Available as open source software, typically developed by researchers, e.g. FaceForensics++, or

(3) A commercial product, which is available to almost any customer

The technology sector is generally a detector creator while civil society is generally a detector user, and this creates a power imbalance both between sectors and between the Global North and South. Privately held tools are out of reach, while more broadly available tools can be more readily circumvented. This means that crucial ‘public benefit actors’ such as journalists, civil society organizations, courts, etc. are less likely to have access to the most effective detection tools, and this access is unevenly distributed around the world. This is particularly true for the more marginalized, who tend to lack good training in technical forensics technology, and do not have relationships with others who might have greater detection capacity.

Tools and Support

Providing access to detection requires more than access to tools. We believe it is possible to dramatically increase detection utility & equity by considering three dimensions of access:

Technical strength: Detection systems vary in ‘strength’ -- the type and percentage of manipulations they can detect -- due to algorithmic choices, and the quality of training datasets, and public availability. Different levels of detection quality and access could be provided to different stakeholders, balancing equity and security concerns.

Support networks: Detection software must be supported by training, both specific technical training and integration with more general media forensics practices. There is also a need for organizations to run servers, maintain the software, and act as vetting organizations, indicating that other organizations are trusted to get access to tools and training (e.g. First Draft could help vet and train journalism stakeholders).

Escalation and aggregation: Explicit mechanisms can be provided which enable those with less access and training to request detection from those with more access and training. This provides detection outcomes for a much broader range of people, beyond those with relevant expertise and support.

Beyond the immediate challenges of detection, institutionalizing these approaches also supports resilience to many other problems. These approaches are also directly relevant to detecting CSAM (child sexual abuse material) and algorithmic text generation. Going forward, if there is a new tactic deployed by disinformation actors, or a new form of harassment that is growing in prevalence, the institutional capacity developed by these support networks, vetting organizations, and escalation pathways can help address it.

Levels of Access: Between Open and Closed

There are several different ways to provide access to detection technology, each with a particular set of governance and security implications. From most permissive to most restrictive, these include:

1) Complete access to source code, training data, and executable software. This provides unlimited use and the ability to construct better versions of the detector. In the case of unauthorized access, this would allow an adversary to easily determine the detector algorithm and potential blind spots which are not included in the training data.

2) Access to software that you can run on your own computer, e.g. a downloadable app. This provides vetted actors unlimited use. In the case of unauthorized access, such an app provides adversaries the opportunity for reverse engineering, and an unlimited number of “black box” attacks which attempt to create an undetectable fake image by testing many slight variations.

3) Detection as an open service. Several commercial deepfake detection services allow anyone to upload an image or video for analysis. This access can be monitored and revoked, but if it is not limited in some way it can be used repeatedly in “black box” fashion to determine how to circumvent that detector.

4) Detection as a secured service. In this case the server is managed by a security-minded organization, to which vetted actors are provided access. If an adversary were to gain access to an authorized user’s account, a suspicious volume or pattern of queries can be detected and the account suspended.

5) Detection on demand. In this case a person or organization which does not normally do digital forensics forwards an item (escalates) to an allied group which has one of the access types described above..

6) Developer access only.

Getting to Governance

One key challenge to realizing this more nuanced vision of controlled access is the question of who decides, and on what basis. This is a complex, global, multi-stakeholder governance problem. For this reason, we are conducting workshops to deliberate on the three central questions going forward: Who gets access? What access does the ‘Who’ have? How is 'Who' chosen?

See here for a more detailed and concrete version of this document. The authors invite feedback or questions at aimedia@partnershiponai.org.

Authors