Follow the money: to rein in Big Tech, lawmakers are right to focus on business models

Ellery Roberts Biddle / Apr 6, 2021Facebook and Google have made broad-based commitments to protect human rights and the public interest, but only to the extent that this won’t interfere with their ad-based profit models. Lawmakers are right to follow the money.

At the March 25th congressional hearing on disinformation, members of the House Committee on Energy and Commerce highlighted some of the more serious harms brought on by targeted advertising and content curation systems, including the January 6 attack on the U.S. Capitol and viral disinformation about COVID-19 vaccines. The CEOs of Facebook, Google, and Twitter deflected lawmakers’ questions about how their technologies actually work and drive profits. Instead, they touted their efforts to weed out disinformation and extremism with things like fact-checking labels and abuse-detecting algorithms.

These efforts are a sideshow, and members of congress finally seem to understand this. The real problem at hand, as our research group has argued for some time, was succinctly described by Committee Chairman Frank Pallone: “it’s the business model.” Companies are choosing profit over the public interest and deliberately concealing how they build their algorithmically-driven ad systems. This is not just about trade secrets or bad actors. It is about their fundamental goal: growth.

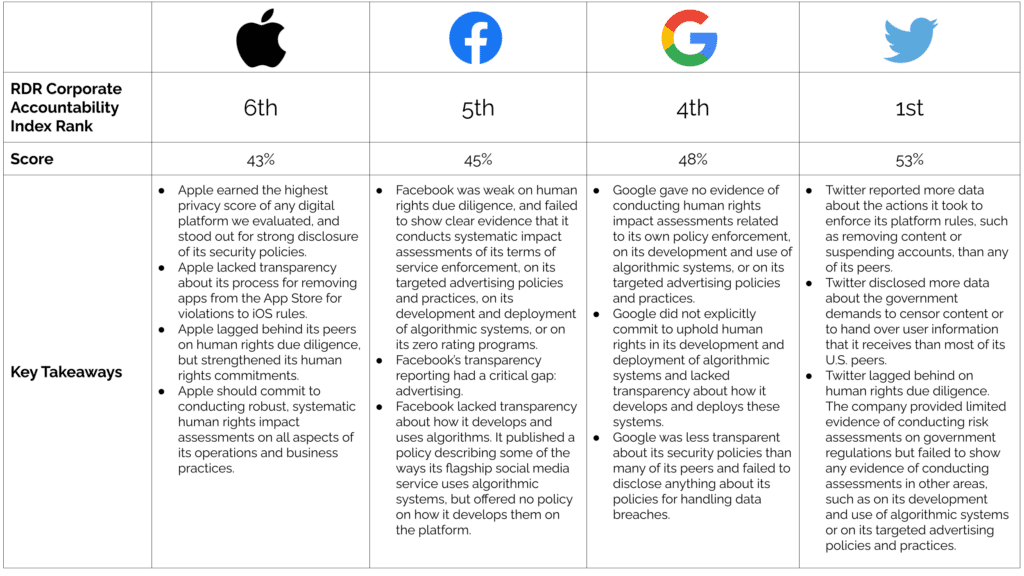

Our team at Ranking Digital Rights studies the public-facing policies of the world’s most powerful tech platforms and evaluates them against human rights-based standards, covering issues like freedom of expression, privacy, and discrimination. Google and Facebook stood out in our most recent evaluations because both companies have made broad-based commitments to respect users’ rights. And both seem determined to keep their algorithmically-driven ad targeting and data inference systems under lock and key. Twitter has not made such sweeping commitments, nor does it offer as many services or earn as much revenue as the other two firms.

Facebook’s new commitment to protect human rights across its operations, published on March 16, provides a great example. The policy has a thorough look to it, but makes not a single mention of advertising, even though it accounts for nearly 99% of Facebook’s revenue. If the company really cares about human rights and public interest, it should uphold these commitments in the core of its business model.

There’s plenty of evidence to indicate that this is needed. Alongside anecdotes cited in last week’s hearing, independent research by groups like the NYU Ad Observatory and The Markup has shown just how precisely Facebook targets ads that in turn have huge impacts on how people share, read, and understand information—and misinformation—on the platform.

We know that algorithms are the engines for ad-targeting systems, and we know that the company is very concerned about the public image of its algorithms. So this seems like a great time to ask: With the new policy in place, will Facebook try to respect human rights in its algorithms? It’s hard to say. The policy gives some high-level assurances, but offers little detail on implementation. It points to Facebook’s “Responsible AI” efforts, which focus on “understanding fairness and inclusion concerns.”

This is an important consideration, but it’s worth reading between the lines. We know the company is especially concerned about bias for two reasons: First, after ProPublica proved that Facebook was allowing advertisers to exclude users based on their “ethnic affinity”, civil society advocates and the US Department of Housing and Urban Development took the company to court. The terms of the resulting settlements included a civil rights audit that urged the company to consider these issues when building algorithms for advertising. Second, thanks to reporting from BuzzFeed and MIT Tech Review, we know that bias became an especially high priority at Facebook during the Trump years, after Republican lawmakers began accusing the company of over-censoring conservative voices on the platform.

And bias is hardly the only issue that Facebook users are up against here. What about other kinds of harms? In her recent investigation of these issues, MIT Tech Review’s Karen Hao interviewed multiple Facebook engineers who had sought to change algorithms so that they would be less likely to promote hateful and violent content (and might therefore reduce user engagement) but were then sidelined by their superiors. Why not let engineers test more ways to reduce algorithmic harms, that go beyond bias? Because this could reduce “engagement”, and in turn, profit.

A cornerstone technique that companies can use to identify and mitigate a broad range of possible harms is a human rights impact assessment. Before launching a new product or service, companies should do a thorough review and consider the kinds of harms it could cause.

Facebook is no stranger to this approach. Yet in 2020, Facebook offered no public evidence that it assessed the impacts of its ad targeting systems on users’ rights to privacy or free speech. The company made a limited effort to assess how ads might result in unlawful discrimination within the U.S., but this was in direct response to the aforementioned lawsuits.

When confronted with pressure from powerful political actors (or actual lawsuits), Facebook is willing to make some concessions. But the company seems to draw tight lines in its responses, fixing problems only to the extent that they will appease the relevant parties and help to reduce bad press. To us, this looks like an attempt to protect its core profit model.

Then there’s Google. Despite the company’s general commitment to respect human rights, its more recent “ethical AI” principles, and the fact that it dwarfs its competitors in both the search engine and video platform markets, Google has published little information about how its algorithms are actually built. The company’s website offers a detailed overview of how its search algorithm works, but offers much less detail for YouTube.

This is troubling, given what independent researchers have unearthed about YouTube’s propensity for recommending “extreme” content. A search for political information can lead surprisingly quickly to violent extremist content. And people searching for child pornography, which is illegal and banned on the site, have nevertheless found their way to videos of children playing in swimming pools.

When researchers sounded the alarm about the predatory tendencies of users searching for videos of children, the company made a series of small systemic tweaks to videos including children, but would not turn off sidebar recommendations on these videos, in spite of pleas from child safety advocates.

On March 25th, Rep. Anna Eshoo (D-CA18) asked CEO Sundar Pichai if he was “willing to overhaul YouTube’s core recommendation engine” to correct for the spread of misinformation and hate on the platform. In keeping with a major theme of the hearing, Pichai refused to give a yes or no answer.

When we looked for Google’s commitments and transparency around targeted advertising and its impacts on users, it did even worse than Facebook. We found no public evidence that it assessed the impacts of its ad targeting systems on users’ rights to privacy, free speech, or non-discrimination.

Although Apple was not represented at the hearing, it’s important to recognize the mammoth role it plays, alongside Google, when it comes to collecting and monetizing data on our mobile devices. Both companies have recently introduced new approaches to data collection, inference, and monetization in a pivot towards using first-party data to target ads. Both have nevertheless framed the move as a consumer-oriented embrace of privacy protections— see new policies for Apple and Google.

In particular, Apple’s iOS 14 requirements for third-party apps to obtain people’s consent to collect their data upon download has triggered public criticism from Facebook, owner of Facebook Messenger, Instagram, and WhatsApp, which combined maintain upwards of three billion users worldwide. Both companies have leaned heavily into public interest arguments, with Apple claiming it wants only to protect user privacy, and Facebook masquerading as a sort of Robin Hood for small businesses, saying they will suffer the worst economic blow from the changes.

But the real battle here is for our data. Apple’s new anti-tracking measures will make it harder for third parties, including Facebook, to capture our information. And in so doing, the measures will put Apple in an even more powerful position to capture, make inferences about, and monetize our data, and experts surmise that this may enable the company to prioritize its own ads network.

In spite of Apple’s lofty promises to protect our rights, we know far too little about how the company is doing these things. In our research this year, we could not find a single piece of public documentation on whether or how Apple conducts user data inference, a key ingredient in monetization of user data. And just like Google, while Apple has published policies acknowledging that it engages in targeted advertising, we found no evidence that it conducts human rights impact assessments on any of these activities.

In the absence of real transparency from these companies about how they build their technology and what they do with our information, we have only a set of clues about what is happening behind our screens. Company policies and statements are useful to a point, but we have to look at them next to things like leaked documents from inside the companies and first-hand accounts from former employees -- an increasingly popular exit move. Independent research efforts seeking to shed light on how the technology actually works are also paramount, and we know that they are hitting a nerve -- this became clear for the aforementioned NYU Ad Observatory when it received a cease-and-desist letter from Facebook last fall.

It is gratifying to see lawmakers finally striking at the root of these problems, rather than focusing only on content. There is much to say about harmful content online, and how companies moderate or remove it, and we think this is really important. But when it comes to legislation, our money is on the business model. Until they are made to do otherwise, tech CEOs will continue to push through these hearings by deflecting serious questions with non-committal answers and distracting the public with yes/no polls and crypto clocks. If we are to get out of this mess, lawmakers will need to draw a line in the sand, put the companies under oath, and put the business model—and the technology that drives it—front and center.

Authors