Fake net neutrality comment campaign a harbinger of automated disinformation to come

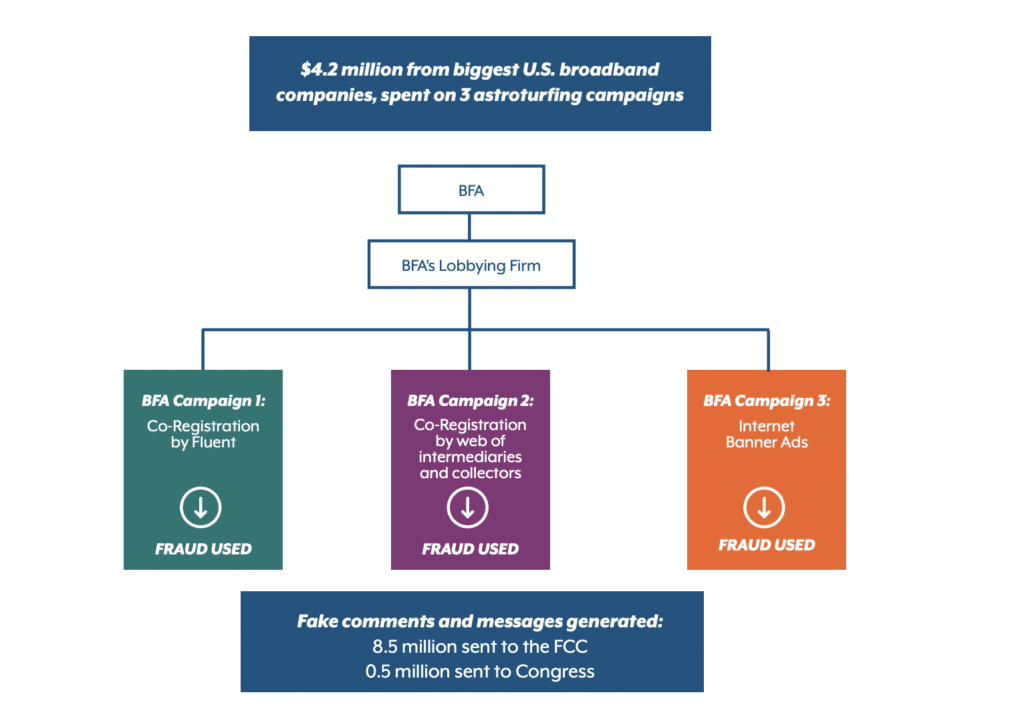

Justin Hendrix / May 6, 2021In an announcement, New York Attorney General Letitia James said her office had completed an investigation that found multiple entities were responsible for a set of campaigns that drove millions of fake comments, messages, petitions, and letters designed to influence the Federal Communications Commission (FCC) 2017 rule making process on net neutrality. The disclosure raises questions about how more sophisticated systems may be used to perpetrate such attacks in the future.

What happened

The Attorney General reached settlements with three lead generation companies paid by broadband providers that generated 8.5 million fake comments to the FCC- 40% of the total number of public comments- and over half a million fake letters to Congress. The lead generation firms that settled with the state were also involved in advocacy campaigns that generated millions more false comments, messages and petitions on net neutrality to the government. The Attorney General also found that a 19-year-old college student filed 7.7 million pro-net neutrality comments using automated software, and that another 1.6 million were submitted from fictitious entities that were unattributed.

“Americans voices are being drowned out by masses of fake comments and messages being submitted to the government to sway decision-making,” said Attorney General James. “Instead of actually looking for real responses from the American people, marketing companies are luring vulnerable individuals to their websites with freebies, co-opting their identities, and fabricating responses that giant corporations are then using to influence the polices and laws that govern our lives."

The Attorney General's report included quotes from individuals whose identities were impersonated in the comment submissions.

“I’m sick to my stomach knowing that somebody stole my identity and used it to push a viewpoint that I do not hold. This solidifies my stance that in no way can the FCC use the public comments as a means to justify the vote they will hold here shortly,” said one. “I find it extremely sick and disrespectful to be using my deceased dad to try to make an unpopular decision look the opposite,” said another.

The lead generation campaign paid for by the broadband companies used co-registration sites to collect personal information. "Individuals were promised, among others things, 'free cash,' gift cards, product 'samples,' and even an e-book of chicken recipes," according to the Attorney General.

What's next

The disclosure of the schemes suggests governments- and many other entities- may not be prepared to contend with new mechanisms to automatically generate content and, indeed, online identities, which are improving rapidly.

New technologies to automatically generate text, such as GPT3, a system developed by the researchers at OpenAI, can be used to automatically generate plausible text at scale. In 2019 a Harvard student used an earlier version OpenAI's language model, GPT2, to submit close to 1,000 synthetically generated comments to Medicaid.gov during a public comment period. A study found volunteers could not distinguish the synthetic posts from real ones.

Another concern is that such systems may be combined with new mechanisms to impersonate individuals. Researchers at Eindhoven University of Technology in the Netherlands recently published a paper titled "Impersonation-as-a-Service: Characterizing the Emerging Criminal Infrastructure for User Impersonation at Scale" that detailed a Russian service that collects user credentials using custom malware and matches this capability with a marketplace platform to "systematize the delivery of

complete user profiles to attackers."

"While AI-powered image and video generation are yielding highly realistic synthetic media, in many ways, synthetic-text generation has arguably surpassed synthetic-media generation," said Hany Farid, associate dean and a professor in the Information School at the University of California, Berkeley. "From the creation of an army of twitter-bots to carpet-bombing of public comments for proposed legislation, synthetic-text generation poses a significant threat."

"It’s going to get harder to detect manipulative commentary," said Renée DiResta, the technical research manager at Stanford Internet Observatory (and a board advisor to Tech Policy Press) who has written about the disinformation potential of text generated by artificial intelligence. "

In her report on the exploits targeting the FCC, the New York Attorney General proposed a number of legislative fixes, including that Congress and state governments should prohibit the submission of false comments or comments on behalf of an individual that are not expressly authorized. And, Congress and state governments should "amend impersonation laws to provide for substantial penalties when many individuals are impersonated before a government agency or official." Another straightforward proposal is that governments and other entities accepting public comments should have technical safeguards, such as CAPTCHA systems, to combat automated comments- but AI systems have been shown to defeat some of these systems.

So, just as lawmakers will need new tools, as will technologists.

"Contending with this threat will have to go beyond basic forensic algorithms and will have to contend with a fundamental aspect of today's internet where anonymity has both advantages and disadvantages," said Farid.

DiResta concurs. "We understand public comment as a way of gauging the interest of the people and this speaks to a need to design public comment systems in the future to take these manipulative activities into account," she said. "There will be a tension between respect for privacy and anonymity, and a need to gauge whether the people speaking actually exist."

Authors