Facebook Whistleblower Frances Haugen and WSJ Reporter Jeff Horwitz Reflect One Year On

Justin Hendrix / Dec 2, 2022

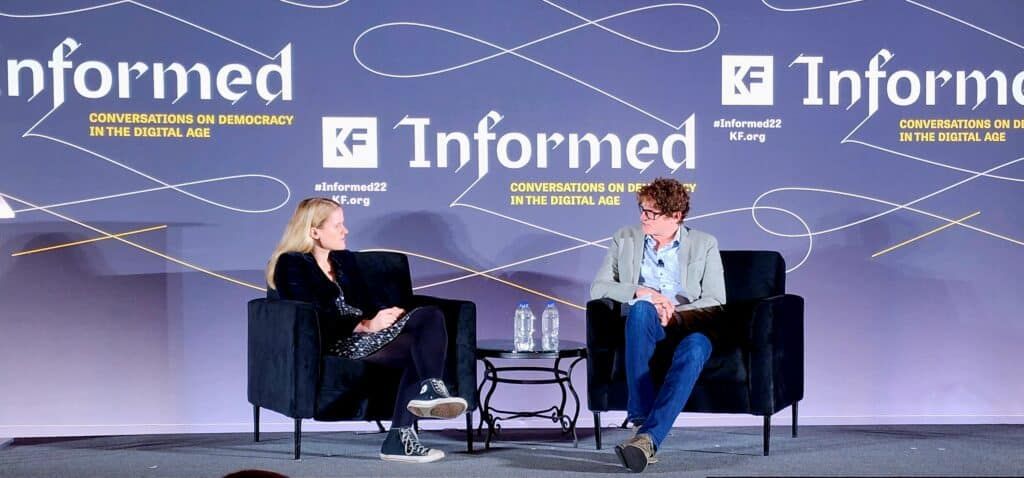

At the Informed conference hosted by the Knight Foundation this week in Miami, Facebook whistleblower Frances Haugen joined Wall Street Journal technology reporter Jeff Horwitz for a conversation reflecting on their relationship before and after the publication of The Facebook Files, the exclusive reports on the tranche of documents Haugen brought out of the company.

The session, titled In Conversation: Frances Haugen and Jeff Horwitz on Tech Whistleblowing, Journalism and the Public Interest, was the first public appearance by the two since the stories first broke in the Journal in September 2021. By October that year, the trove of documents was shared with a range of other news outlets, prompting weeks more of headlines and government hearings over revelations on the company's handling of election and COVID-19 misinformation, hate speech, polarization, users' mental health, privileges for elite accounts, and a range of other phenomena.

The conversation touched on Haugen's motivations, including her fears that tens of millions of peoples' lives are on the line depending on if and how Facebook (now Meta) chooses to address the flaws in the platforms it operates, particularly in non-English speaking countries. And, it explored how her relationship with Horwitz evolved, and the role he played in putting the disclosure of the documents into a broader historical context.

What follows is a lightly edited transcript of the discussion. Video of the discussion is available here.

Jeff Horwitz:

So yeah, we've known each other for a while now.

Frances Haugen:

It's like two years.

Jeff Horwitz:

Two years. Two years at this point. And I guess let's start with before we knew each other. I think you and I both had run into a whole bunch of disillusioned former Facebook employees, or in your case current Facebook employees, and nobody quite took the approach that you had to having qualms about the company. And I'd be really curious to ask just how you got to, who you spoke to before me? How you thought about what your role and responsibilities were, and how your thinking developed before we ever met?

Frances Haugen:

In defense of my coworkers, I reached out to you at a very, very specific moment in time in Facebook's history, which is the day that they dissolved Civic Integrity. So in the chronology of what happened at Facebook, in the wake of the 2016 election, within days-- I actually went back and looked at the news coverage-- within days of the election people started calling out things like the Macedonian misinfo farms and the presence of these... not even state actors, these people who had commercial interests for promoting misinformation, and how Facebook had been kind of asleep at the wheel. There were a number of features that, as someone who works on algorithms, I had been watching even from the outside and being like, "Why does this feature exist?" There was a carousel that would pop up under any post you engaged with. It's like you hit ‘like’ on something, a little carousel post would pop up and you could tell that that carousel had been prioritizing stories based on engagement, because all the stories would be off the rails.

It'd be like you'd click on something about the election, you'd get a post being like, "Pope endorses Donald Trump." You could tell how these posts have been selected. And in the wake of that election, they form the Civic Integrity team, or they really start building it out. And I saw a lot of people who felt very, very conflicted. There's an internal tension where you know that you have learned a secret that might affect people's lives. People might die if you don't fix this problem. Potentially a lot of people. If you fan Muslim bias in India, there could be a serious mass killing, for example. But at the same time you know, "If I leave the company there'll be one less good person working on this." So there's not quite enough resources to do the job that those people deserve. But you also know if I opt out, there'll be less people.

And so I reached out to you because I saw that Facebook had kind of lost the faith that they had. When you look at the practice of change management, the field of study, how do organizations change? Individuals struggle enough with the ability to change. When you add us together in concert, our institutional momentum, our inertia becomes almost impossible to alter. Because you have to choose a vanguard and point at them and be like, "Well, leadership's going to protect them. This is where we're going, we're going to follow them." And the moment that I reached out to you was the moment they dissolved that team.

And so I don't think it's so much that my coworkers approach the problem in a different way. I think it's that I had the privilege of having an MBA. I had the privilege of being like, "Oh interesting. Things have meaningfully just changed, and we need help from outside."

Jeff Horwitz:

And I think there's a sense that, I mean, generally whistleblowers, they talk to reporters when everything is all wrapped up. Obviously that didn't happen. What was your initial thought process about what you wanted to involve a reporter with, what you wanted to talk about, and how did that change?

Frances Haugen:

So I think I did not understand what an anomaly I was psychologically until I left Facebook. So when I left Facebook, I started interacting very actively with, or even when I start interacting with people beyond you, they would comment to me, they'd be like, "Oh my god, you're so stable." And this is a compliment I hope none of you ever get. It's very off putting. When someone's surprised at how stable you are. Like, "Oh God, was I dressing wrong? Was I acting weird? I don't understand." But there's a real thing that most whistleblowers, they suffer in silence and they suffer alone for a very, very long period of time.

They undergo a huge amount of stress alone, and by the time I reached out to you, I lived with my parents for six months during COVID and I would witness things happening and I would have an outlet to go talk to, where my parents are both professors. My mom is now a priest. I felt like I was not crazy. And I think a lot of whistleblowers, because they go through the whole journey alone, they're completely frayed by the time they begin interacting with the public. And so I had the advantage of, I could approach it calmly. I could approach it as, "I don't have to rush this." And the way I viewed it was Facebook, like Latanya [Sweeney] said, they were acting like the State Department. They were making decisions for history in a way that was isolated, and they would have this blindness about trade-offs.

They would put in constraints that were not real constraints. They would say, "We have no staffing, there's no way we can have staffing." You make $140 billion, I think it's $35 billion of profit a year, $40 billion of profit a year. You don't actually have constraints. That's a chosen constraint when you say I can't have staffing. And I wanted to make sure that history could actually see what had happened at a level where we could have rigor. And so that's why I took so much time and why I didn't touch it.

Jeff Horwitz:

And what was the, we'll call it appeal, of talking with me early on during that period, right? Because you were inside, and from the get go, you were very informed about just ranking and recommendation systems. Obviously that was your background, and you've been working on Civic. So where was the value, I guess, in talking to somebody who was a reporter, and had you ever spoken to reporters before on other things?

Frances Haugen:

So Jeff is... So as much as I'm Latanya's biggest fan girl, I glow over Latanya all the time, because I think she's amazing. One of the things that I think you cannot get enough credit on, Jeff, is the story of the Facebook disclosures is not a story about teenage mental health. That's the story of the land in the United States. The story of Facebook's negligence is about people dying in countries where Facebook is the internet. And you reached out to me, it's true. You did the affirmative action, but I went and I background checked you. I was familiar with your stories before we ever talked. I didn't associate with your name, but I read your coverage of India particularly, and the underinvestment Facebook had done and how that was impacting stability there. And I think if you hadn't shown that you already cared about people's lives and places that are fragile, like how Facebook treated some of the most vulnerable people in the world, I wouldn't have been willing to talk to them.

And so part of what I thought– you were so essential in this process– was people who are experts at things don't understand what is obvious or not obvious to an average person. And I grew so much in my ability to understand the larger picture beyond that specific issue. That's the issue that made me come forward. The idea that Facebook was the internet for a billion, 2 billion people and that those people got the least safety protections. But you came in and asked me all these questions that helped me understand where the hunger was for information and that allowed me to focus my attention.

Jeff Horwitz:

Did it make it harder for you, in some respects, having somebody who was asking you questions about what mattered and why?

Frances Haugen:

No, no, no, no. I love the Socratic method. I think the way we learn is in tension and I think the fact that you were present and you reminded me that it mattered, right?

We have work crises. Right after they dissolved Civic Integrity, I got a new boss. I had to go through months of 'how do you reestablish credibility,' or 'how do you learn how to speak a different language effectively because you're in a new part of the company.' And I'm so grateful you provided a drumbeat, you knew this was a historic moment and you reminded me of what I was thinking about and feeling in the moment when that transition happened. Because I could have lost the thread. I could have let my life... Our lives are... I'm not a martyr. I wasn't going to go... this wasn't my whole purpose, and I'm really glad that you provided an accountability partner and a thought partner in the whole process.

Jeff Horwitz:

Yeah, I think that's the thing just about this whole process for me was... I had to reflect a bit on how, for me, this was all upside, right? There was no way this really goes south for me personally. The worst case was it was going to be some wasted effort. And I think I got... Actually I was– early on, the first few months– I was a little frustrated with you sometimes because you were hard to reach, or there'd be periods of time you'd just go dark, and I think I probably didn't appreciate the level of stress that this required of you. And I guess I'd be... Tell me about beginning to think about what you were going to take out of the company, particularly given that a lot of this stuff wasn't your work product initially.

Frances Haugen:

Yeah, well I think in the early days, part of what was so hard about it was... To give people context, before I joined Facebook. So the job I joined Facebook for was to work on civic misinformation.

And I have lots of friends who are very, very senior security consultants. These are people who go in and are like, "Oh this is how your phone's compromised. I know you thought your phone was fine, actually it's slightly slower because you have all the stuff running on it." And I asked all of them, what should I be asking about before I take this job? Because if you're going to work on civic misinformation, you are now one of the most... Even if your team is small, you are doing a national security role. China has an interest in your phone now. Russia, Iran, Turkey have an interest in your phone. And everyone told me you should assume all your devices are compromised. Your personal devices, your work devices, you should assume you're being watched. And so imagine I'm interacting with Jeff and at this point I was working on threat intelligence. I was working on counter espionage, right? Think about the irony there.

Jeff Horwitz:

With the FBI guys. Former FBI guys.

Frances Haugen:

Former FBI guys who are wonderful, some of my favorite human beings, very funny people. Every time I message Jeff I'm like, "Is someone listening to our conversation?" And so it took a period of time for me to get over that anxiety. And one of the things I'm very, very grateful for Jeff, was there were individual moments where I almost just abandoned the project because I was like, will this be the thing where China will drop an anonymous note to Facebook saying, 'Your employee's doing this" just so it causes randomness inside of the company? You just don't even know how to think about those things. And I thought you did a really good job of helping me just think through... I'm a threat modeler.... sit there and model the threat with me. So I didn't go through that process alone. I'm very grateful for that.

Jeff Horwitz:

Now, of course there were limits to what I could do. So I could never tell you that I wanted you to do a particular thing. That would've been inappropriate from the Wall Street Journal’s point of view and a little presumptuous.

And then I also couldn't tell you that it was going to be alright.

Frances Haugen:

Yeah.

Jeff Horwitz:

And I guess I'd be curious about your sense of the risk that you were personally at during this period of time. I think you made it a lot easier for me because your position was that if you ended up being caught doing this after the fact, c'est la vie, you would have no regrets. But I guess I'd be curious about what your expectation was and also what your thoughts were about your privacy and anonymity, right? Because I think that changed over time.

Frances Haugen:

Well there's two questions. So we'll walk through each one, one-by-one. One of the things that I am most proud of that came out of everything I did is that Europe passed whistleblower laws. So after Enron, after WorldCom, those two whistleblowers, a big part of what they did and the advocacy they did afterwards was they got whistleblower laws that applied to me.

The reason why Europe passed whistleblower laws was– the big thing I emphasized in my testimony– was this is not an anomaly. More and more of our economy will be run by opaque systems where we will not even get a chance to know that we should be asking questions because it'll be hidden behind a black box. It'll be in a data center, it'll be on a chip. The only people who will know the relevant questions will be the people inside the company. But the problem with a lot of those systems, they were very, very intimately important for our lives. But each individual case will probably not have the stakes that I viewed about Facebook. So the way I viewed Facebook was, I still earnestly believe that there are 10 or 20 million lives on the line in the next 20 years. That the Facebook disclosures were not about teenage mental health, they are about people dying in Africa because Facebook is the internet, and will be the internet for the next five to 10 to 20 years in Southeast Asia, in South America.

Places that Facebook went in and bought the right to the internet through things like Free Basics. And so for me, I did what I did because I knew that if I didn't do something, I didn't talk to you or someone else, if I didn't get these documents out and those people died, that I would never be able to forgive myself. And so the stakes for me were, whatever, they put me in jail for 10 years? I'll sleep at night for 10 years. And for most people, I don't think, it's not going to be that level of stakes.

Jeff Horwitz:

And I think one of the things that happened… so just Puerto Rico. Frances, after going on two years of Facebook, found that due to some quirks in human resources rules at Facebook, she was going to have to leave the company in a month or return from the place, the US territory that you just moved to.

Frances Haugen:

I really did.

Jeff Horwitz:

And I was invited to come out and make a little visit. And I think, let's put it this way, I did not have a month-long ticket booked initially. I guess, I'd be curious about what changed when you started exploring Facebook's systems in a way that went beyond what you ran into in the normal course of affairs for your job.

Frances Haugen:

Well I think it's also a thing of… so like I said earlier, my lens on this project was... So I'm a Cold War Studies minor. The one thing that most people don't know is that a huge fraction of everything we know about the Soviet Union, the actual ‘how’ the Soviet Union functioned, was because a single academic at Hoover and Stanford went into the Soviet Union and scanned the archives after the wall fell. He was like, "We don't know how long this window's open. We're going to go in there, we're going to microfiche everything we possibly can." A single person was like, "History. If we want to know what happened in history, if we want to be able to prevent things, if we want to be able to not read tea leaves through an organization that lied to us continuously, we have to do this now." And the window really was small, it was three or four years. They started publishing papers, Russia started feeling embarrassed about some stuff that came out and they locked the best parts about the archive back down.

And so I'm really grateful that you were like, "We have a historic opportunity here. If we want to make sure the history gets written, you have three more weeks." So the situation was, I'm recovering from being paralyzed beneath my knees and I still have a lot of pain, especially when I'm cold. And during COVID, we can only socialize outdoors in San Francisco, which is a fog-filled frozen freezer. So I went to Puerto Rico, and I found out in the process of trying to actually formalize being in Puerto Rico that you cannot live in a territory, be it British or American territory, and work at Facebook. And so I informed Jeff out of the blue, I'm like, "Hey, by the way, I have to make a decision next week. Am I leaving Facebook?" And this suddenly made it a very discreet vision of, ‘this is the last window, this is the last time we get to ask questions.’

And I'm so grateful that you put in a huge amount of effort to just be there and make sure that we asked as many questions as we could so history got to have some kind of record for it.

Jeff Horwitz:

Yeah, I think something we talked about a lot was that this wasn't replicable. That after a certain point it became apparent, right. And also I think to some degree that's when your confidentiality went down the toilet too. You were getting into enemy territory inside what is a forensically very, very precise system in terms of tracking what you were doing. I mean, I think we were both baffled that, candidly, Facebook was as bad at observing anomalies in employee behavior on its systems as... I mean what, you being in a document from 2019 in which Instagram-

Frances Haugen:

2014.

Jeff Horwitz:

Yeah, yeah. In which the Instagram leadership is presenting a slideshow to Mark. You didn't work on Instagram, you didn't work on those issues. You were expecting to get caught basically every morning. I remember when the internet went down briefly, you were like "Oh crap, the game's up. We're done." And I think you had to... I guess to some degree... Obviously I was not requesting you grab things, but did it feel like I was pushing you, and was that a good or a bad thing? Because I was pushing you.

Frances Haugen:

So, I think there's two issues there. So one is unquestionably, once I really got a clear vision of what the stakes were... so I think it's always important for us to think about what is the process of knowledge formation? Where does a thesis come from? We start with feelings, we feel that something's off and then we gather evidence and we begin to have a thesis on what is off and then we become confident in our thesis and then you actually, once you communicate to someone else, you're like, "Oh, it's been challenged. I feel this is good." And I don't know, at some point in that process, maybe a couple of months in, I started understanding what the scope of what we were doing was, and the last couple of months was incredibly hard for me because I both felt we were not done, we didn't have an adequate portrait for history. But I also knew if at any point I got caught, no one would ever get to do it again.

And I think in that last three weeks, part of why I'm so grateful… I didn't really understand the boundaries of what were the most pressing issues to the public, and I think as you ask questions and paint an even fuller portrait, I don't think anyone who has empathy wouldn't feel like you could feel the heat of why this needed to be done. And so I'm very grateful that you helped provide context for me of what history needed to know. You were the first draft of history as a good journalist should be.

Jeff Horwitz:

And then let's talk about working with... So after Puerto Rico is done and things start getting… I'm going to be doing my work with my team of folks at the Wall Street Journal, we're spinning up very slowly with a large organization, which is what we do, and I recall you're doing a lot of things on the legal and prep side and obviously you made the decision that you were going to go public pretty early on.

Frances Haugen:

I don't think it was pretty early on. We didn't make that decision fully until August.

Jeff Horwitz:

Really? Good.

Frances Haugen:

So I left in May and so for context, part of why it is so detailed was I wanted it to stand on its own. It might be surprising given my public persona as a whistleblower, but I've had maybe two birthday parties in 20 years. I don't really like being the center of retention. I eloped the first time I got married. I tried really hard to have a wedding the second time. We had a date, we sent out invites, we still eloped. And the thing my lawyers were very, very clear about was they were like, "It is delusional for you to think that Facebook won't out you." If Facebook can control the moment that you get introduced to the public and how you get framed, they know who it is. They can see all the interactions. As soon as those stories come out, as soon as they start asking for confirmation or a chance to respond, they're going to figure out it's you."

So I think the thing that was hardest for me was, to be fair, you said you would start publishing within a month, six weeks.

Jeff Horwitz:

We were very slow at the Wall Street Journal.

Frances Haugen:

And it took you four months.

Jeff Horwitz:

It's true.

Frances Haugen:

Just in fairness. And so I think it's one of these things where the amount of education that technologists have about the process of journalism is very, very thin. One of the most valuable things the journalism community can do is provide a lot more education on the process of journalism, particularly to tech employees. Targeted ads are very cheap especially, or putting on YouTube videos, that kind of thing.

Jeff Horwitz:

What was the rep of reporters inside Facebook, by the way?

Frances Haugen:

Great question. So I remember inside of Facebook there being large public events where people, executives would talk about how selfish reporters are, which if you know reporters, reporters might have certain stereotypical character flaws. But being selfish people is, I don't think, one of them. They'd be, "They just want the fame and glory. That's all they're doing. You're being used." And even the idea of what is the role of journalism in a democracy, people need to understand. I am on the stage because I have one of the lightest weight engineering degrees you could get in the United States. Super flat out. I went to an experimental college, I was in the first year, you had the least requirements for an engineering degree of anywhere in the United States or probably the world, and I got to take more humanities classes as a result. Every elective class you take beyond your GenEd requirements at a US university decreases the chance that you will get hired at Google, period. Google even knew this. They knew that even Bioinformatics majors had trouble getting hired compared to CS majors.

There is a huge gap where people don't understand how important it is to be a human being first and a citizen second and employee third. They actively indoctrinate you the other way, to say your loyalty is to your coworkers. And I think a thing that I want to be sensitive on is part of why I was able to do what I did was because Facebook had a culture of openness. And I'm sure there are people inside the company who say, "You ruined a golden age. We had the ability to collaborate and now we don't, everything's locked down." And I think the flip side of that, that I would say-

Jeff Horwitz:

They were saying that before you did that too, though. It was already over.

Frances Haugen:

Sure, yeah. But I think there's a thing where companies that are willing to live in a transparent world will be more successful. Employees will want to work with them because they don't have to lie. They'll be able to collaborate. A company that can be open, which is a company that can't be open, the one that can be open will be able to succeed.

Jeff Horwitz:

So let's talk about simultaneously working with the press and with a lawyer and not necessarily entirely, not as a coordinated process.

I recall a lot of people telling you that you were going to go to jail. I recall Edward Snowden and Reality Winner and Chelsea Manning's name being thrown out.

Frances Haugen:

Yeah. Because Facebook is a nation state.

Jeff Horwitz:

Now I am not a lawyer so I can't tell you that that's not going to happen but I can recall thinking to myself, what the hell is wrong with these people? She doesn't have a security clearance and there was no track record of this happening.

Frances Haugen:

There's no Espionage Act going on.

Jeff Horwitz:

And then I also recall there being some disputes over who was going to represent you in particular fashions. And I think that process left me feeling... I mean look, obviously in terms of you as an advocate, I can't do that. I can't even root for you as an advocate, and as a source, of course, though I cared a lot about you coming out of this as well. And I would be curious what… and I pride myself on taking care of people who are sources, but I felt like I could not really help much and I felt like you had... I guess I'd be curious what resources you found were available to you, and what didn't work, and what you would like to see in the future for people who might play a similar role to you.

Frances Haugen:

I talked before about the idea that we should not treat me as an anomaly. We should treat me like a first instance in a pattern that we need many more of, because as our economy becomes more opaque, we need people on the inside to come forward. We need to think about what is that pipeline of education, everything from... There are engineering programs in the United States, like UCLA’s, where they don't talk about whistleblowing in their ethics program. And that's because one of the board members vetoed it. I won't name names because I'm not a shamer, but we need to have everything from education– colleges– all the way through current employees.

And then we need infrastructure for supporting those whistleblowers, because right now there are a couple of... There are a cluster of SEC whistleblower firms, but there is no way for someone who has no experience with this to differentiate among them. There are firms with very obvious names like Whistleblower Aid, where Whistleblower Aid I think plays a very critical role for national security whistleblowers. I don't think, necessarily, I was a good fit for them, but because I didn't know anything about how to even evaluate lawyers, I got thrown in the middle and there were parties that tried to step in and say, "You need a different kind of set of support." And because I didn't even know how to evaluate between these parties and I didn't know how to assess their interests and things like that, I got really left alone and things happened where the process was substantially... We came very close to having it being disastrous.

Things like, I didn't get media trained until five days before I filmed 60 Minutes, which was two days before your first article came out. And so I think there's a deep, deep need for infrastructure where even having a centralized webpage saying here are the strengths of these different whistleblowing firms. Even that level of context is missing right now. And it's serious because you have people who have put themselves in very vulnerable positions who end up being in places where they don't know whose interests are being represented or whether or not even with a lawyer that's safe. And so I feel kind of guilty that people are modeling their whistleblows on mine, and I don't necessarily think that was the ideal path.

Jeff Horwitz:

I think things got tense between us. It definitely got tense between us. I think partly because I had some concerns about how, just on the source protection level, it seemed like some things were a little weird. And then I also think you had your concerns with how the Wall Street Journal as an institution was approaching it and I mean, you gave us permission to publish the documents in full had we so chosen. That was not something the Journal wished to do. And I'd be curious about where you felt like journalism was not– or at least a particular outfit and a particular partnership– was not going to be able to accomplish what you wanted to accomplish. So, what led you to think that?

Frances Haugen:

So I think there's a couple different puzzle pieces there. So one, I think you had very legitimate concerns about the people who were around me. When I say things like, "We desperately need some infrastructure that is at least a little bit of Consumer Reports on legal representation," it's really, really vitally important. Because there was a period of time where the only support I had... I don't want to say that you had pure intentions. You have your own conflicts of interest, but at the same time you also were seeing really outlier behavior among some of the people who were around me. And I felt really bad for you that due to– I don't want to use the ‘negligence’ word, I think that's too aggressive– but there were definitely things that happened where you were harmed because people around me acted in inappropriate ways.

Jeff Horwitz:

It worked out fine for me in the end.

Frances Haugen:

It worked out fine. But the secondary thing, so that's one thing I want to acknowledge and I think that did cause tension between us because those actions, particularly things like 60 Minutes published some of our SEC disclosures that closely modeled reporting that Jeff was going to do.

Jeff Horwitz:

Which was cool. That was good. Thank you. The Wall Street Journal does not do TV very well.

Frances Haugen:

No, because... No, but I never gave permission to publish it and it meant that those issues didn't get introduced to the public with the level of thoroughness. I love the Wall Street Journal-

Jeff Horwitz:

Oh you're talking about the SEC cover letters.

Frances Haugen:

Yeah. And it meant that things like random bloggers wrote really cursory things on me instead of having them broken by the Wall Street Journal, which is why I tapped them, and so there were things like that happening.

But the secondary issue is this question of why did we move to the consortium model? And the reason we moved to consortium model was, one, I had brought up since the beginning that I wanted to have non-English sources have access to the documents and have an international consortium as well, and part of why those plans didn't get as far along before the coverage came out was my partner slipped in a shower in Vegas, under circumstances which we will not go into, and got a frontal lobe bleed in the middle of July.

So literally, I have a conversation with Jeff a week before this where I'm like, "Jeff, we need to start discussing the non-English consortium because I want to have the Wall Street Journal run an English exclusive around an international consortium." And then I spend the next month with someone who is belligerent because he has a frontal lobe bleed and is irrational. It's one of the hardest points in my life. And so that didn't get as much inertia, or we couldn't even start those conversations until right before their incursion. But once I came out, the press team that was working with me, they were like, "We have this real problem, which is there are all these endless sources that feel slighted that they didn't get the crowns jewels, and if you don't allow them to also be involved in this process, they're going to make you the story."

Jeff Horwitz:

I always thought that was... but yes.

Frances Haugen:

I understand. But that's why we expanded.

Jeff Horwitz:

No, no, it's a question about process. I mean, I remember you had... Your thought was that basically if the US government, if this was a chance you were going to lay out the case and lay out a potential way forward for data transparency as well as for rethinking content moderation and getting past the, "Take down the bad stuff," arguments which-

Frances Haugen:

Doesn't scale.

Jeff Horwitz:

Yeah, dead end.

Frances Haugen:

Doesn't work and doesn't scale.

Jeff Horwitz:

The current progress of US legislation I think, I've talked to you obviously, we are both into data transparency and there are some people in this audience who are involved in that effort. I think we're both pretty bullish on that as a thing that would be useful for me doing my job and you as an advocate. Are you disappointed, though, that nothing else has happened in the US?

Frances Haugen:

So one of the things people often ask me all these questions about is why was Europe able to pass the Digital Services Act, which is a generational law, right? It's on par with Section 230 in terms of changing the incentives for the internet. Why was Europe able to do that while the United States hasn't presented any legislation to do that? When someone yesterday said PATA is coming very close to passing, oh that would be amazing. Nate [Persily], I know you're in the audience, I'm rooting for you. But Europe was using a fundamentally different product.

Facebook overwhelmingly spent their safety budget on English in the United States because they knew they were an American company that could regulate with the United States, and Europe is a much, much more linguistically diverse place where I'm pretty sure for many languages in Europe they had almost no moderators, right? I had a Norwegian journalist say, "We gave Facebook a thousand posts promoting suicide in Norwegian. We even reported those and none of them got taken down." And so Europe was coming up with a different set of incentives, and so I'm not surprised this stuff hasn't passed in the United States because Facebook has spent hundreds of millions of dollars telling people they have to choose between content moderation, freedom of speech and safety.

It's like, "Oh I know all these things are bad, but this is your only way to solve it." And I hope that when the Facebook archive goes live– I'm rooting for you also, Latanya– that we can see that there are a huge suite of other approaches and other tools that we can use to make the system safer, but those tools will only get used if we have transparency because the business incentives do not incentivize these issues.

Jeff Horwitz:

Tell me about your new nonprofit and what you are hoping to accomplish in the last year and two months of public advocacy? Where are you trying to push things forward?

Frances Haugen:

So, as a product manager, one of the most important techniques or approaches that I learned at Facebook is that Facebook is a product culture that really believes in clearly articulating what is the problem you're trying to solve. So you can show me this cool thing you want to build, but unless we can agree on ‘what is the problem we need to solve,’ you may not actually have a good solution. And when I look at Facebook, I don't see the problem to be solved as there are nefarious hackers inside the company or that there are specific things with these technologies. I see it as there are not enough people sitting at the table that get to weigh in on what the problems are or how those problems should be solved.

And right now there are hundreds of people in the world who really understand how those algorithmic systems work. What the choices are that they need, what the trade offs are, what the consequences are; and that I think we need a million people involved who meaningfully understand and who could contribute to a conversation on how we should approach these systems. And so we're focusing on what I call capacity building and the ecosystem of accountability. So that's the thousand academics that Latanya talked about. That's making sure legislative aides in Congress understand what's possible and what the trade-offs are. That's civil litigators who understand what it means to cut a corner. That means concerned citizens.

Where is Mothers Against Drunk Driving for social media? We need that whole ecosystem. We need investors to understand what long term profit means versus short-term profit. And we're working on tools around how do you actually bring that million people to the table?

Jeff Horwitz:

So I think something that I noticed that changed after this, and I think partly because I was involved with it, that probably made it a little bit easier but I think probably other reporters in this room have learned as well. There was something really useful about you, just the NDA culture around Facebook.

It lost its hold. A lot of people, a lot of former employees were willing to talk. I mean, partly it helped that we had the documents. Obviously the stuff that will go out will be redacted, but we could call up the former employees and say, "Well, we've seen your work product already. Sorry." But I guess I'm wondering what role there is for former employees going forward, and what you would want to see former employees deal with? There's a way to incorporate more of them into this discussion. Because I think you got a lot of this too, which is, "Well, screw her. She took blood money for years. How could she possibly be a critic, boohoo?" I mean you got a lot of that early on and I think there's been kind of a tendency to have us versus them. I would be curious about what you would think.

Frances Haugen:

So one of the big projects we want to work on is a simulated social network, and the intention of that is right now we don't have any way of actually teaching these things in schools. So one thing that the Twitter whistleblower highlighted was that basically all the safety functions inside Twitter were critically understaffed and that's because every person who does my job in industry was trained in industry. We're going to Mars with SpaceX because they can hire Ph.D. aeronautical engineers, but the public trained them for years, 12 years, 10 years. I think there is a need for those people and their knowledge. Remember that knowledge is going to evaporate. It's transient. Facebook is cutting the teams that actually understand their systems. TikTok probably doesn't invest in the same way on those systems. They're not as profitable as Facebook is. Twitter cut their teams. We need to figure out ways for those people to begin capturing their knowledge because it is unlikely once the next wave of social networks comes through encrypted end-to-end social networks, we will never get to ask those questions again and that's the other reason why I capture this.

Because we have to get this knowledge in a set form and reproduce it and teach it because we will not get to learn it once we're working with end-to-end encrypted social networks.

Jeff Horwitz:

And let's see.

Frances Haugen:

We should be respectful of time.

Jeff Horwitz:

Yeah, Are we... Okay. No, no. It just switched from saying, "Please ad lib for a few more minutes" to "Please wrap." So boom, we're done here. See you guys later. Bye.

Frances Haugen:

Thank you guys.

Authors