Emerging AI Governance is an Opportunity for Business Leaders to Accelerate Innovation and Profitability

Abhishek Gupta, Risto Uuk, Richard Mallah, Francis Pye / May 31, 2023Abhishek Gupta is the Senior Responsible AI Leader & Expert with the Boston Consulting Group (BCG) and also the Founder & Principal Researcher at the Montreal AI Ethics Institute; Risto Uuk is a Policy Researcher at the Future of Life Institute; Richard Mallah is an AI Safety Researcher at Future of Life Institute; and Frances Pye is an Associate at the Boston Consulting Group.

As AI capabilities rapidly advance, especially in generative AI, there is a growing need for systems of governance to ensure we develop AI responsibly in a way that is beneficial for society. Much of the current Responsible AI (RAI) discussion focuses on risk mitigation. Although important, this precautionary narrative overlooks the means through which regulation and governance can promote innovation.

Suppose companies across industries take a proactive approach to corporate governance. In that case, we argue that this could boost innovation (similar to the whitepaper from the UK Government on a pro-innovation approach to AI regulation) and profitability for individual companies as well as for the entire industry that designs, develops, and deploys AI. This can be achieved through a variety of mechanisms we outline below, including increased quality of systems, project viability, a safety race to the top, usage feedback, and increased funding and signaling from governments.

Organizations that recognize this early can not only boost innovation and profitability sooner but also potentially benefit from a first-mover advantage.

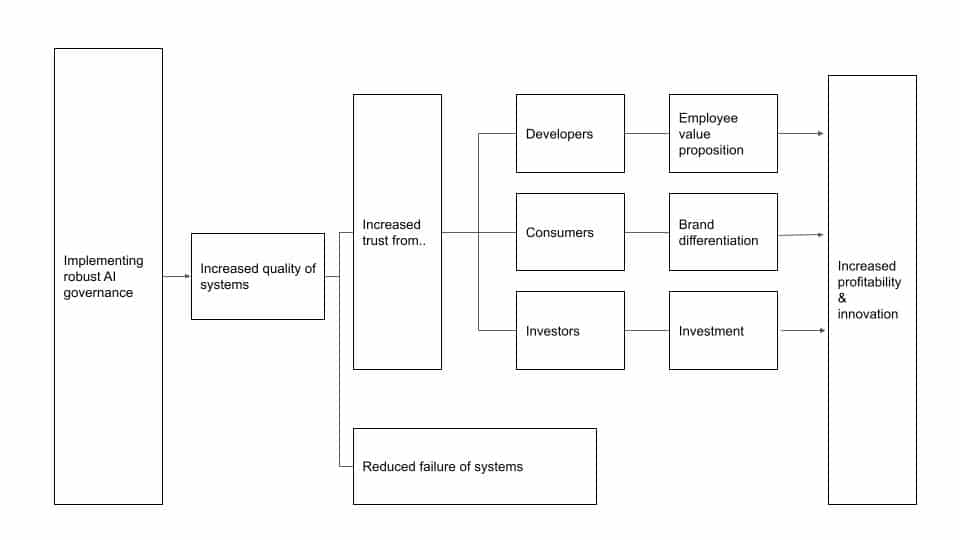

1. Impactful AI governance increases the quality of AI systems.

Successfully implementing AI governance tools can unlock higher-quality AI systems. These higher-quality systems should fail less and earn increased trust from downstream developers, consumers, and regulators, which can contribute to increased innovation and profitability through various means. Governance standards that signal this increased quality, such as risk management standards and output explainability, further drive this effect by raising trust levels with customers, creating more robust development processes that capture and more efficiently correct errors earlier in the AI lifecycle, and boost the ability of system developers to address points of failures rapidly.

A specific example:An actionable Code of Conduct, including reporting and transparency requirements. With a Code of Conduct, companies can craft concrete AI governance practices across the full life cycle of an AI system. This leads to better internal practices for developing AI and hence, a higher quality of AI systems. This higher quality system may be more reliable and operate more efficiently, reducing the risk of costly errors, project cancellation, or downtime, thereby increasing return on investment (ROI). BCG’s recent RAI study found that the failure rate of AI systems was reduced by 9% in companies that achieve RAI before scaling compared to those that don’t.

Such frameworks will also allow organizations to differentiate their brand with consumers and potential employees and avoid costly reputational damage. These mechanisms will be especially important in business-to-consumer (B2C) industries with significant competition and high customer contact. Other governance tools that can induce similar mechanisms include Three Lines of Defence (3LOD), regulatory sandboxes, incident databases, assurance standards, trustworthy assessment lists, or regulations that set the ‘rules of the game’ c.f. traffic lights. Comprehensive frameworks, like the NIST AI RMF, combine multiple of these elements together to provide organizations with a ready reference to implement.

2. Enhanced AI governance can collectively boost industries as a whole.

In addition to the specific mechanisms outlined above, the ecosystem of governance tools can come together to grow industries, promote a safety race to the top, and encourage the creation of new sub-industries.

Firstly, regulation can help establish clear guidelines and standards for developing and deploying AI systems, for example, standards in accuracy, reliability, and risk management. Such guidelines can provide a stable and predictable framework for innovation, reducing uncertainty and risk in AI system development. This will increase participation in the field from developers and encourage greater investment from public and private organizations, thereby boosting the industry as a whole.

Regulatory mechanisms can also come together to promote a ‘race to the top’ in safety, boosting trust and industries as a whole. The commercial aviation industry presents such an example of this. The Commercial Aviation Safety Team (CAST) monitors accident data, often from voluntary reporting programs, to proactively detect risk and implement mitigation strategies before incidents occur. After its conception, fatality risk for commercial aviation in the US fell by 83% from 1998 - 2008, while the size of the commercial aviation industry grew by around 5% per year in passenger kilometers in the same period. This race to the top in safety can increase assurance and consumer trust, leading to increased investment from more trusting investors.

Finally, governance can also give rise to the creation of new industries in pursuit of achieving the governance goal. For example, organizations might innovate on how to cost-effectively increase the explainability of models and experiment with more inventive suggestions such as regulatory markets.

3. Effective AI governance can notably increase trust in organizations developing and deploying AI systems from governments and governance organizations which is critical for investment, procurement, and signaling to other investors..

Governments and governance organizations have a strong history of successfully investing in AI technologies and their inputs (e.g., Open Data Institute, Horizon Europe), as well as acting as demand side stimulators for long-term, high-risk innovations that are the foundations of many of the technologies we use today. Such examples include innovation at DARPA that formed the foundations of the Internet, or financial support to novel technologies through subsidy systems e.g., consumer solar panels. Additionally, governments are significant procurers of technologies and can also drive large-scale uptake of new technologies. Finally, government funding also acts as a signaling mechanism to indicate the value and security of investment for private industry. Such investment would enable increased innovation of AI systems. Therefore, implementing effective AI governance will allow organizations to access the innovation and profitability benefits afforded through government trust.

- - -

Counter to the commonly held belief that regulation stifles innovation or that AI regulation should be considered only for risk mitigation, AI governance presents significant innovation opportunities for organizations by increasing the quality of AI systems, promoting a race-to-the-top in AI safety, and encouraging greater government investment in AI technologies. Moreover, if organizations invest in AI governance capabilities early, they can gain a first-mover competitive advantage.

Acknowledgments: The authors would also like to thank Sabrina Kuespert for her valuable contributions and insights.

Authors