DSA Showdown: Unpacking the EU's Preliminary Findings Against X

Jordi Calvet-Bademunt / Jul 17, 2024Last Friday, the European Commission shared with X its preliminary view that it is breaching the Digital Services Act (DSA), Europe’s online safety rulebook. Following the announcement, some media were quick to say that the Commission had charged “Elon Musk’s X for letting disinfo run wild.” In a conspiratorial tone, Elon Musk accused the Commission of offering X an “illegal secret deal”: if X quietly censored speech without telling anyone, the Commission would not fine the company.

Both these takes are one-sided. The preliminary findings are narrower than the initial investigation and do not explicitly deal with “information manipulation,” which is still being investigated. In addition, the “illegal secret deal” is not illegal and not so secret.

To promote transparency and public discourse, my organization, The Future of Free Speech, is tracking the enforcement of the DSA. Using the Commission’s official press releases, the following discussion seeks to provide a factual overview of the DSA enforcement proceeding against X.

The Origins Of The Findings

Last October, following Hamas’ attack on Israel, the European Commission sent X a request for information under the DSA regarding “the alleged spreading of illegal content and disinformation, in particular the spreading of terrorist and violent content and hate speech.”

Thierry Breton, the EU’s top digital enforcer and EU Commissioner, sent an accompanying letter that sparked significant concerns. He also sent similar letters to Meta, TikTok, and YouTube. Twenty-eight civil society organizations, including mine, wrote to Commissioner Breton to express their worry regarding the seeming conflation between illegal content and disinformation - which is generally protected by freedom of expression - and other issues.

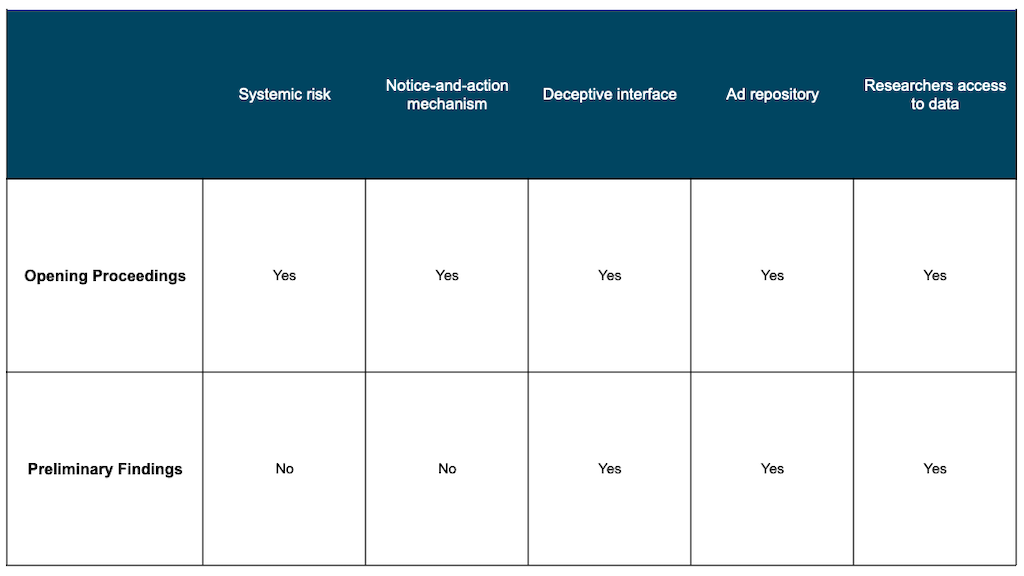

Eventually, in December of 2023, the European Commission opened formal proceedings against X. This enforcement stage empowers the Commission to adopt interim measures and non-compliance decisions. According to the press release, the opening of the formal proceedings focused on five key issues:

- Systemic Risks. Very large online platforms (VLOPs), like X, must assess any systemic risks stemming from the platform (articles 34 and 35 of the DSA). Systemic risk is a nebulous concept in the DSA that requires balancing multiple conflicting objectives, such as negative effects on civic discourse, public security, and free speech. We have publicly shared our concerns regarding these provisions on numerous occasions. The press releases suggest the obligations on systemic risk were the key basis for investigating the dissemination of illegal content and “information manipulation” in X.

- Notice-And-Action Mechanism. Online platforms must swiftly notify users of content moderation decisions and provide information on redress possibilities (article 16).

- Deceptive Interface. Online platforms must not design, organize, or operate their online interfaces in a way that deceives or manipulates their users or in a way that otherwise materially distorts or impairs their ability to make free and informed decisions (article 25). In particular, the European Commission was worried about the Blue checkmarks (more on this below).

- Ad Repository. VLOPs must compile and publicly make available through a searchable tool a repository containing advertisements on their platform until one year after the advertisement was presented for the last time (article 39).

- Researchers Access To Data. VLOPs must provide researchers with adequate access to platform data (article 40).

Before sharing the preliminary findings, the Commission sent X two other requests for information concerning its decisions to decrease the resources it devotes to content moderation and its risk assessment and mitigation measures regarding generative AI.

What Are The Preliminary Findings About?

According to publicly available information, the preliminary findings sent to X are the first of their kind for any company. Given this and X’s and Musk’s notoriety, they received significant media attention. But what are these preliminary findings about?

The preliminary findings are based on the focus areas included in the opening of the proceedings, but importantly, they are narrower. They focus on three areas only:

- Deceptive Interface (article 25). According to the Commission, X's design and operation of its interface for "verified accounts" with the "Blue checkmark" deviate from industry practices and mislead users. The ability for anyone to subscribe to obtain "verified" status undermines users' ability to make informed decisions about accounts' authenticity and content. The Commission also claims there is evidence suggesting malicious actors exploit "verified accounts" to deceive users.

- Ad Repository (article 39). The Commission considers that X fails to comply with the required transparency in advertising. It does not provide a searchable and reliable advertisement repository but instead implements design features and access barriers that hinder transparency for users. The design restricts necessary supervision and research into emerging risks associated with online advertising distribution.

- Researchers Access To Data (article 40). The Commission argues that X falls short in providing access to its public data for researchers, as required by the DSA. X prohibits eligible researchers from independently accessing public data through methods like scraping, as specified in its terms of service. Moreover, X's process for granting access to its application programming interface (API) appears to discourage researchers from conducting research projects or compels them to pay disproportionately high fees.

Notably, the preliminary findings say nothing about assessing and mitigating systemic risk (articles 34 and 35). In this regard, the Commission’s press release says that the investigation continues regarding “the dissemination of illegal content and the effectiveness of the measures taken to combat information manipulation.” I add that, given the particularly negative impact this focus area can have on freedom of expression, it is crucial that the Commission proceed extremely carefully. The preliminary findings also do not cover the notice-and-action mechanism (article 16). In fact, they do not mention this point at all.

Comparison of focus areas in the opening of the formal proceedings vs. preliminary findings

What About The “Illegal Secret Deal”?

Despite Musk’s accusation, the supposed “illegal secret deal” seems to be simply the commitments process established by the DSA. This is what Commissioner Breton said, who also claimed that it was X’s team “who asked the Commission to explain the process for settlement and to clarify [their] concerns.”

The commitments process enables VLOPs to offer binding measures “to ensure compliance with the [DSA]” and settle the investigation. This mechanism may limit transparency and be susceptible to abuse if, for instance, it is used to go beyond what is required by the DSA. Excessively limiting discourse restricts freedom of expression. However, with all its flaws, the commitments process is explicitly established in the law.

Moreover, the DSA obliges the Commission to publish the decisions concerning commitments. This may be insufficient to ensure the appropriate scrutiny of decisions that have a potential political and public discourse impact. That said, it seems disingenuous to qualify as “secret” a deal that will be made public, at least to some extent.

Given Musk’s public statements, it does not seem like X will be following the commitments path. Following the preliminary findings, X can now examine the documents in the Commission's investigation file and reply to the Commission's concerns. If the preliminary findings are confirmed, the Commission can adopt a non-compliance decision with a fine of up to 6% of X’s total worldwide annual turnover and order X to take measures to address the breach. A non-compliance decision could also trigger an enhanced supervision period to ensure compliance with X's measures to remedy the breach.

More details on this investigation and the other European Commission’s enforcement actions are available at The Future of Free Speech’s DSA Enforcement Tracker.

Authors