Don’t Let Generative AI Distract Us from the Real Election Risks in Tech

Matt Motyl, Spencer Gurley / Aug 16, 2024Countless stories claim generative AI is democratizing disinformation and hijacking democracy, and a recent survey shows that 47% of US voters sometimes see what they think is AI-generated election content in their feeds. Yet, with the bulk of this year’s 65+ national elections in the rearview mirror, it would appear that many of the most impactful media artifacts were not produced with generative AI, but rather were real world events that many witnessed in real time, such as the assassination attempt on former President Donald Trump and a horrendous debate performance by Joe Biden that contributed to the sitting US president stopping his re-election campaign. Accusations that opponents are using AI to mislead are perhaps more salient than actual uses of AI – this week, Donald Trump is falsely claiming that AI was used to inflate the size of Vice President Kamala Harris’ crowds.

In other words, while the sensational, sky-is-falling warnings that AI-generated media will impact elections have not come to pass. Rather, inflation of the threat may be contributing to the growing distrust that people have in elections, governance, and the veracity of information online. This is not to say that generative AI will not harm electoral processes, but the harms are tied to its distribution mechanisms. To date, the harms of synthetic media seem to be more due to alarmist predictions in news media than anything actually created with generative AI tools.

A more effective approach to responsibly covering AI in the context of elections emphasizes the importance of contextualized reporting and promoting media literacy among audiences. To comprehend the impact of AI on elections, it's essential to recognize the backdrop against which these advancements are unfolding. The proliferation of easy to use and publicly available large language models (LLMs) and other generative AI technologies has led some to dub this year’s elections as the first AI elections. While these advancements pose risks, most of these risks are not new, and we already know some of the effective levers that tech companies can pull to mitigate the risks, particularly on social media platforms.

For example, misinformation, or shared inaccurate information, is as old as interpersonal communication, even if the term has only been used for the past 419 years. However, these types of harmful content can now spread faster and further than ever before due to the internet and massive social media platforms. The unique challenge with the latest generative AI tools is that now it is easier than ever for anyone on the internet to ask chatbots to write a compelling argument or create a realistic deepfake. The dissemination of this content on mass and social media could be quite harmful to the electoral process, but the underlying distribution mechanisms are little changed. So, it is not so much that the risks of false or manipulated media are new, as it is the ease of creating and widely distributing this risky content.

As an integrity professional who has spent years working on safeguarding elections and combating misinformation at large technology companies, I believe new AI tools primarily facilitate old harms on existing platforms that have yet to address their known vulnerabilities.

It is true that generative AI tools have impressive capabilities to generate content, but the generation of content is not the key problem. People have created false and misleading political propaganda throughout history. So, the fact that someone can enter a prompt into a generative AI tool to create content is not in and of itself harmful. At this point in time, using AI to create a deepfake is akin to using a chisel to carve a misleading map into a desolate cave’s wall. The creator may be trying to harm others, but until they find a way to distribute their creation, it will have little impact. Yet, because of not-so-new social media platforms, the content can be delivered directly to millions or billions of people. Given the known bias of engagement-based recommendation algorithms used by most of these platforms to more widely distribute divisive, misleading, and otherwise risky content, the more harmful the content is, the more likely it is to be delivered to more people. This holds true regardless of whether a human took the time to manually create the content, or the human instructed a generative AI tool to do it. The cause of the harm is not new; it remains the people motivated to advance some narrative by exploiting existing distribution tools, like mass and social media.

Like many technological advances, these publicly available generative AI tools democratize content creation. Therefore, creating fake news articles or deepfake campaign videos is no longer limited to a select few people with the requisite technical skill or wealth to afford the creation tools, but rather anyone capable of using a computer or smartphone. This democratization of creation doesn’t create new harms inherently, but it does cause massive growth in the amount of harmful content that is produced. Given that social media platforms rarely face accountability for the harms people experience when using their products, they have not taken sufficient action to protect their users from harm. In contrast to 2020, when many of these companies employed thousands of experts to safeguard the US election and largely averted a repeat of past election fiascos, the companies conducted mass layoffs of these experts ahead of the 2024 cycle and are likely ill-prepared for the vast increase in the volume of harmful content made possible by generative AI tools. Thus, the risk is not generative AI as much as it is social media companies choosing not to invest in protecting users from political malfeasance.

As in the past, the risks are greater in smaller and less wealthy countries because the safety tools at the big technology companies are trained primarily using English-language content in the Western hemisphere. Therefore, the ever-growing volume of harmful content is more likely to evade detection and removal in places like Ethiopia and Myanmar, with harrowing results. The problem is not that generative AI is creating a categorically new risk, but rather it can be viewed as an accelerant to add to the still-raging fires on existing distribution platforms.

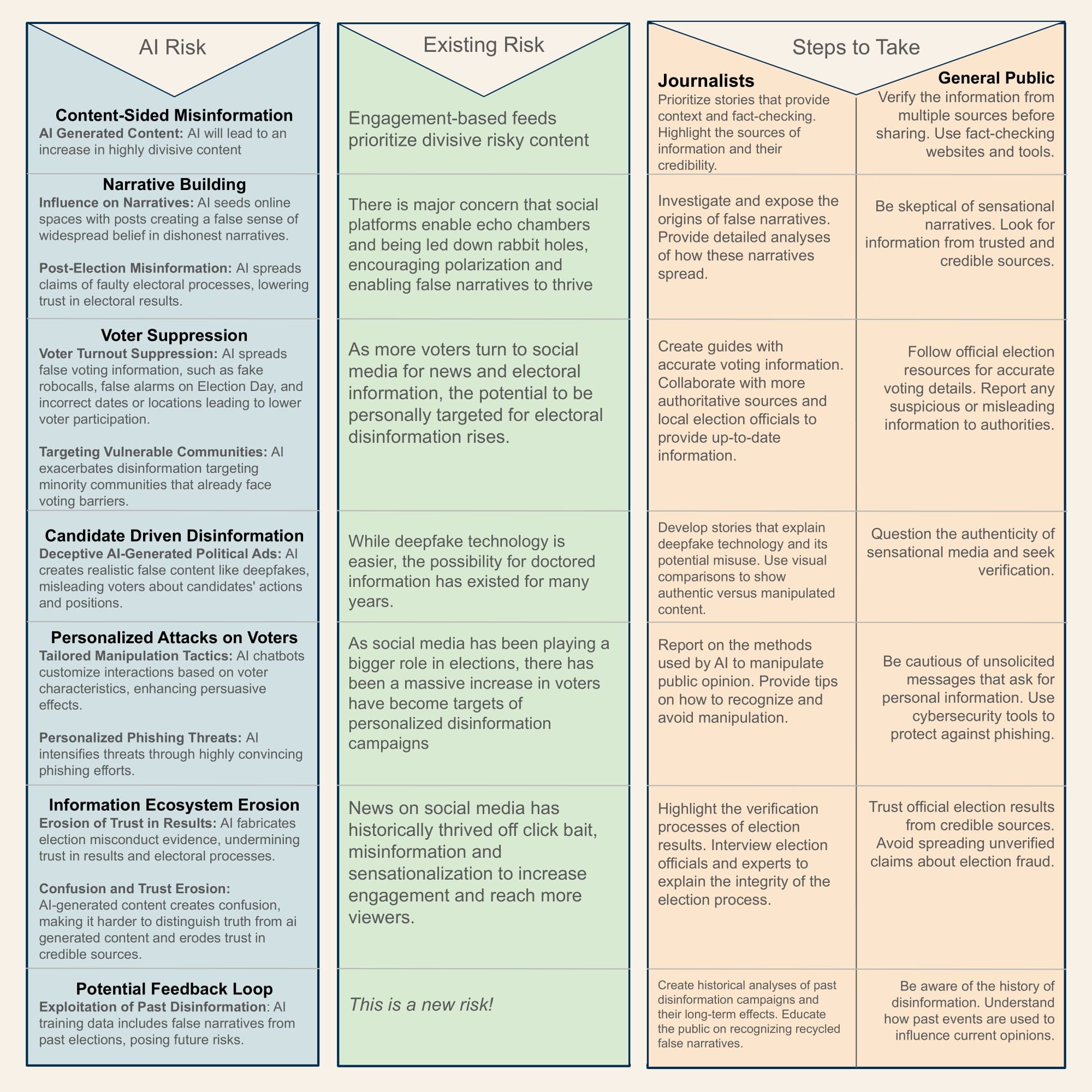

In the chart below, we map many of these AI risks to the existing risks relying on pre-existing systems, and highlight the appropriate steps to take as people communicating about online harms and as users of these platforms who are going to encounter more AI-generated content as the election grows nearer.

Given that many of these platforms laid off their trust and safety experts who would be responsible for stopping the spread of this content, it seems likely that the volume of harmful content on these platforms will continue to grow. Some companies are taking steps to make it harder for their tools to generate specific types of risky content, like violent and sexual images, or pictures of political figures, but clever users still succeed in bypassing those guardrails. Open-source models, like Meta’s Llama-3, can be installed locally or run on Hugging Face, and may have the safety features removed. The result is incredibly powerful models that will gladly assist users in creating compromising, fake photos of a disliked politician, or to generate an automated stream of libelous text or voice messages that could be sent to undecided voters. Again, these capacities are terrifying, but still require distribution to be effective.

Therefore, it is not enough to demand that AI companies build safeguards into their tools; we must also demand that the social media companies who distribute this content to billions of people take action and adopt product and technical changes that they already know reduces the distribution of harmful content, and that they adequately resource trust and safety and election integrity teams.

Acknowledgment: the authors thank the Integrity Institute AI working group, especially Jenn Louie and Farhan Abrol, for inspiring and providing feedback on an earlier draft of this piece.

Authors