Constructing Transparency Reporting for AI Foundation Models

Tim Bernard / Mar 5, 2024

Alexa Steinbrück / Better Images of AI / Explainable AI / CC-BY 4.0

Last fall, a group of researchers at Stanford’s Center for Research on Foundation Models published the Foundation Model Transparency Index (FMTI) to measure the transparency of the foundation models that are increasingly finding their way into countless business and consumer applications. FMTI indicators cover:

- “upstream” elements, or inputs into models’ development, including data set details, labor, compute, energy, and privacy mitigations;

- “model” elements relating to the product itself, including: risks, capabilities, modalities of input and output, and size; and

- “downstream” elements associated with end-users, including usage policies, release procedures, monitoring, and redress.

Ten of the then most commonly used models were evaluated according to the index. The results were not terribly encouraging, with the best scoring model—Meta’s Llama 2—only meeting 54 of the 100 criteria, and at the bottom of the chart—Amazon’s Titan Text—receiving only 12 points.

In a recent preprint, most of the same team, with the addition of Arvind Narayanan, director of Princeton’s Center for Information Technology Policy, has taken the next step with this project. While the index answered the question of what the developers should be telling us about their foundation models, the new paper outlines how they should be doing so—namely by way of transparency reports. While current usages of data sheets and model cards allow for some important transparency, the authors propose a more comprehensive and actionable approach.

To surface insights for foundation model transparency, the authors analyze the benefits and deficits in existing transparency reports by social media platforms, an industry that is regularly compared to AI when discussing risks and regulation. The paper identifies the key general point of comparison:

[A] disruptive and powerful emergent technology came to be widely adopted across societies, thereby intermediating important societal functions such as access to information and interpersonal communication.

Six desiderata of transparency reports, common to both spaces, are described. The first three, to varying degrees, are generally achieved by social media transparency reports; the latter three are not yet fully satisfied in that domain, and the researchers expand on how their proposal seeks to do better in this category for foundation model reports.

- Centralization: transparency reports put all of the relevant data in one place for easy access by stakeholders such as researchers, journalists, or regulators. In the context of foundation models, it is proposed that wherever a model is distributed, downstream of the developer’s own systems, the transparency report should also be accessible.

- Structure: the data is presented in such a way to guide a reader to the answers to spelled-out and discrete high-level questions. The FMTI divides its criteria into three top-level domains and 13 subdomains, and the same structure is recommended for the reports.

- Contextualization: this is narrative material that helps readers, especially those who are not already experts on the product in question, interpret the data appropriately. In recognition of costs involved and competitive or liability concerns, the FMTI does assign points where companies decline to provide data but find a way to suitably explain and justify this decision.

- Independent specification: social media platforms, to a great extent, have been able to choose which data to present in their reports. While the EU Digital Services Act (DSA) gives some specificity about what data to report, and the paper notes some useful outcomes from the first round of reports from Very Large Online Platforms (VLOPs), though the detailed rules and templates for these reports are still in draft form as the European Commission finalizes the implementing regulation on transparency. In contrast, the FMTI has already laid out in some detail 100 items that all foundation models should be able to report on.

- Standardization: this is closely related to the previous category, but focused on making the subjects comparable across the industry. This is complicated in the social media arena because of wide divergences between the platforms in their design and usage, and there is variety even among DSA reports to date due to a lack of enforced specifications. Relatively speaking, foundation models are more of a kind, and so it may be significantly easier, in principle, to expect or demand consistency between models—though the authors note that, currently, “foundation model developers conceptualize model development differently, and the community lacks a common conceptual framework.”

- Explicitness about methodology: an overlapping category with contextualization, there are often multiple choices made in calculating the data given in transparency reports, but these are not always made explicit. The paper gives some specific examples of how methodologies should be stated: referring to a detailed analysis; stating the adaptations that are made to the model to obtain data (e.g. preparations for running benchmark testing); or by detailing the randomized sampling methods used.

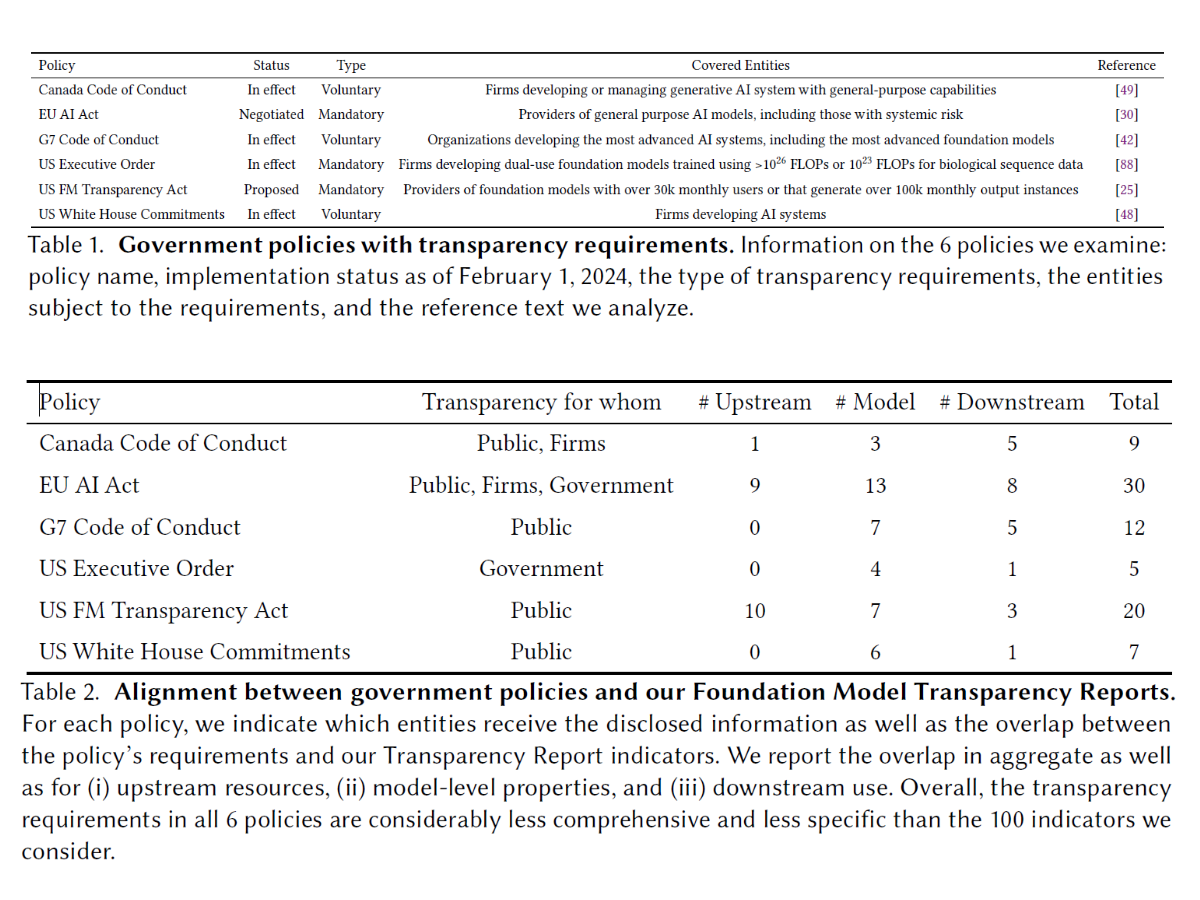

The paper also considers six current and draft laws and voluntary codes, comparing their mandates (or recommendations, in several cases) with the approach proposed in the paper.

The team’s analysis revealed that between five and 30 of the FMTI’s 100 indicators are required in these policies. This highlights the much greater degree of comprehensiveness in the proposed Foundation Model Transparency Reports and the “lack of granularity” in these existing policy documents. (There are also a few cases where these policies, most notably the EU AI Act, contain transparency requirements that are not part of the FMTI framework.) As with the DSA, the details of the implementation of the EU AI Act are yet to be determined, and, in this case, will be largely dependent on decisions by yet-to-be appointed national regulators.

The authors admit that transparency reports alone, even when well-implemented, are not a panacea: “transparency is not an end unto itself, it is merely a mechanism that may allow further insight into the operations of technology companies in order to better pursue other more tangible societal goals.” And even before other steps can be taken to advance more concrete social needs, we need to know that the reports are accurate: the paper’s discussion of social media transparency reports notes that third parties are rarely in a position to verify the data in transparency reports. (The DSA’s audit requirements may somewhat ameliorate this issue for the covered platforms.) The same will be true for foundation model transparency, absent mandates for independent access or audits.

Social media transparency is still in its adolescence. As full implementation of the DSA gets into gear, we may soon learn more about how mandatory, well-defined, and audited transparency can inform further policymaking. The paper also details parallels from a very different but more established domain: financial reporting—regimes which were implemented only in the aftermath of various scandals, when the specific reporting needs had already become far more clear. Consequently, these examples are less instructive than one might wish for when developing an approach to transparency that is well-targeted for producing the data that society actually needs.

Despite lacking an ideal model, the existence of a clear and wide-ranging proposal developed by experts in the field this early in the era of foundation model development sets the stage for hands-on regulation that may be proportional with the short- and long-term risks posed by foundation models.

Authors