Comparing Platform Research API Requirements

Emma Lurie / Mar 22, 2023Emma Lurie is a JD/PhD Candidate at Stanford Law School and the UC Berkeley School of Information.

Platform researchers are living through a strange moment– a pseudo-post-API age. On the one hand, there is no need to convince anyone–in Washington, Brussels, or even Silicon Valley–that using platform data to understand the relationship between social media and society is of the utmost importance. Yet, we keep losing tools that facilitate social media researcher data access. At the time of writing, the Twitter Academic API is on life-support and Meta’s CrowdTangle is not far behind it.

And then, in February 2023, the long promised TikTok API was released. It was an exciting announcement for those struggling to do large-scale research on TikTok, but researchers quickly identified provisions that troubled them in terms of service.

The TikTok saga serves as a reminder that researchers need to build independent infrastructure to study platform content. We cannot depend on platforms’ tenuous interests in transparency, or trust that platforms will continue to feel the political pressure to engage in transparency theater campaigns. At the same time, it is also true that platform-mediated APIs can provide secure, accessible, large-scale and legal access to platform data. And there is cautious optimism in the research community that this mode of data access will continue as proposed bills in the U.S. Congress and the requirements of the E.U.'s Digital Services Act seek to strengthen platform-mediated data access for researchers.

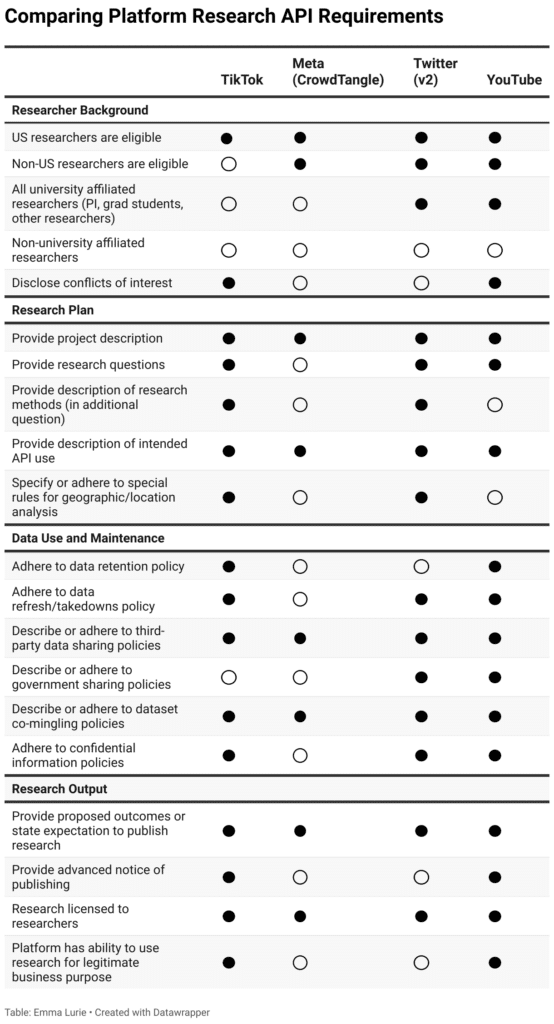

To understand the current landscape, I conducted a comparative analysis of four major social media researcher APIs: TikTok, Twitter, Meta, and YouTube. This comparison demonstrates both where particular platforms are out-of-step with their peers and where changes may need to be made to industry norms.

Method

I conducted an analysis of the 1) applications and 2) terms of service of four major platform research APIs:

- Meta CrowdTangle API

- Twitter Academic v2 API

- TikTok Research API, and

- YouTube Researcher Program API (application from 2023).

I compared the documentation for each of these APIs and analyzed clauses that 1) had been flagged by other researchers as concerns with the TikTok API; or 2) I found to be otherwise noteworthy comparison points. Reading through the terms of service was challenging, as many link to other related documents (e.g., Researcher API Terms of Service linking to Developer API Terms of Service). Companies pack a lot into these terms of service (ToS), so there were some difficult choices about what made it into the chart.

It’s important to recognize that this comparison chart looks at the research API applications and terms of service as written, not at the ways that they are applied. Sometimes a platform does not specify something in the initial application, but sends researchers follow-up questions. Other times, platform policies say one thing, but researchers that do not comply with the policies seem to face no consequences. Therefore, this chart should be taken as a starting point rather than a conclusion about platform research APIs.

1. Researcher Background

Researchers at U.S. academic institutions have access to all major platform APIs. The TikTok API is currently restricted to only U.S. academic researchers. For all platforms, researchers must be affiliated with a university to be eligible for all of the researcher APIs.

Researchers are sometimes asked to disclose conflicts of interest. The bounds of conflicts of interest vary by platform, but generally include working for the platform or its competitors.

2. Research Plan

All platforms require researchers to describe their research project and how they plan to use the API data. The amount of information requested varies by platform, sometimes greatly: the Twitter API application requires a minimum response of 200 characters per question and the TikTok API application requires a minimum response of 200 words per question. CrowdTangle asks for a one paragraph response. Specifics about research questions, particular methods, and the intended use of particular types of data (e.g., sensitive use cases, location data) vary by platform. Twitter and TikTok have the most detailed questionnaires for researchers to fill out about their research plan.

3. Data Use and Maintenance

Some of the platforms ask researchers to provide details about the following categories, while others require researchers accessing the API to agree to standard platform policies:

Data refresh and takedown policies refer to how often researchers remove content collected from the API that is no longer available on the platform. Sometimes this content has been taken down by the platform and other times it may have been removed by the user themselves. Platform data refresh policies vary. TikTok requires a “refresh” every 15 days, YouTube requires a refresh every 30 days, and Twitter’s requires to make “all reasonable efforts” to identify content that is taken down or a “written request” language. These policies both safeguard users’ right to be forgotten and make platform-compliant content moderation research very difficult.

Data retention policies refer to expectations around how long researchers will retain control over the API data. As others have written, particular institutions and publication venues have requirements that may contradict platform expectations for these rules. For example, Stanford’s IRB requires the archiving of project data for a minimum of three years after the conclusion of the final project. Researcher API applications often frame the data retention as a question (e.g., YouTube asks “How long do you store YouTube API Data?”) rather than mandating a specific data retention term. However, to comply with the data refresh policies, researchers are unable to retain content that has been taken down from the platforms.

Platforms either ask researchers or prescribe for researchers third-party data sharing platforms. Some platforms allow researchers to share with certain collaborators without a separate application, others like YouTube prohibit third-party data sharing. Researchers have raised concerns that limiting data-sharing hinders replicability and open science principles. Twitter attempts to meet researchers half-way, advising that sharing identifiers of tweets rather than all associated data of a tweet is a way to ensure that qualified researchers can replicate research while limiting privacy harms.

All platforms have specific language limiting sharing platform data with governments unless legally mandated (and in that case notifying platforms of the sharing). CrowdTangle prohibits sharing raw data with government officials but permits the sharing of results. Twitter and YouTube both require researchers to disclose and update any government data sharing that occurs. While TikTok has stipulations about third-party data sharing, no specific policies are made with respect to government-affiliated entities.

Some platforms also have language forbidding co-mingling platform data with other data sources. Sometimes these policies restrict particular datasets (e.g., TikTok researchers may not scrape data from the platform and combine it with API data) and sometimes they restrict particular analysis with co-mingled datasets (e.g., user re-identification).

Most platforms have policies governing the confidentiality of the platform research API data. Often these policies are in more general Developer API Terms of Service that are linked in the researcher API terms of service.

4. Research Output:

All of the research API terms of service make it clear that researchers are expected to publish the research they conduct using platform data. Some even ask researchers to list targeted research venues for the project.

YouTube and TikTok both require researchers to provide advanced notice of publication. YouTube researcher terms of service ask researchers to “use reasonable efforts to provide YouTube with a copy of each publication at least seven days before its publication. This is meant solely as a courtesy notice to YouTube. YouTube will not have editorial discretion or input in any Researcher Publication.” TikTok’s terms of service say that researchers using the API “agree to provide TikTok with a copy at least thirty days before its publication for courtesy.”

While outside the scope of the chart, Meta’s Social Science One initiative also mandates advanced notice, explaining that this is so Meta can “identify any Confidential Information or any Personal Data that may be included or revealed in those materials and which need to be removed prior to publication or disclosure.” That kind of language about confidential information and data is absent from the other API research terms of service in this analysis.

For all platforms, the research itself is licensed to the researchers. However, some platforms, particularly TikTok and YouTube, require a license to some aspects of the work, and to researchers’ university affiliation for business purposes including marketing. Researchers have raised concerns about how this licensing could affect their publishing and ownership of their work.

Conclusion

Researchers leveraging platform APIs seek to answer a wide array of questions, including questions about people, the interaction of platforms and people, and the behavior of platforms themselves. Some of these research questions aim to improve our understanding of societally important topics like mental health, political polarization, and racial discrimination. Researchers should be able to legally access the data that furthers these important questions.

At the same time, platform API guidelines and restrictions can also safeguard users’ rights and expectations about how their data is stored and used. Careless handling of users’ sensitive data, malevolent actors who seek to harass or target particular groups or individuals, could do significant damage to users and the research community’s reputation.

The release of the TikTok API raised new questions about the norms and expectations that researchers have of the platforms. I hope this chart provides a jumping off point for some of those conversations that should include: 1) what data, access, and questions should be available to researchers from platforms?; 2) what kinds of infrastructure, policies, and norms should researchers build outside of platform-mediated partnerships to study these questions?

Authors