ChatGPT, Safety in Artificial Intelligence, and Elon Musk

Tim Bernard / Jan 3, 2023Tim Bernard recently completed an MBA at Cornell Tech, focusing on tech policy and trust & safety issues. He previously led the content moderation team at Seeking Alpha, and worked in various capacities in the education sector.

Since November 30, it’s been hard to avoid screenshots of text generated by OpenAI’s ChatGPT system. ChatGPT’s outputs are sometimes wrong despite sounding incredibly confident, but that hardly precludes the text from reading as if it was written by a person.

Last month, Elon Musk tweeted that OpenAI had access to Twitter’s database, presumably to train its models on the vast amount of text generated by the platform’s users. Back in 2015, Musk was among the founders of Open AI, then a nonprofit organization, and he has called the output of ChatGPT “scary good.” As the owner of Twitter, however, Musk indicated that he has put OpenAI’s access to Twitter’s database “on pause.” As GPT-3 was trained on a closed corpus of material, and rumors suggest that the training for GPT-4 may already be underway, this pause may have little material effect.

But the change in Musk’s posture towards OpenAI gives us an opportunity to become familiar with the ideas that inspired the lab’s creation, and the differing meanings of “safety” in artificial intelligence (AI). This first requires looking at several strains of thought that seem to be prevalent amongst Silicon Valley’s elite.

Effective Altruism, Longtermism, and AI

Effective Altruism is based on the simple notion that charitable giving should be evaluated empirically, as represented by the approach of GiveWell, which rates charities on factors like how much it costs to save a life, with the end-result of ranking highly causes like providing mosquito nets for sub-Saharan Africa.

The next conceptual step is dubbed “longtermism,” which is the notion that we should think about ethics by considering the impact of our actions on the (very) long-term, as any future humans are just as worthy of consideration as those alive today. Some extend their commitment to “strong longtermism,” which is based on the conclusion that, as there will be (or could be, at any rate) many more humans alive in the future, we should prioritize long-term goods over short-term ones. This includes consideration of existential risks affecting humanity: threats that might end the human species (even if in the distant future) take particularly high priority.

What does this have to do with a chatbot that sounds a little too like a real person for comfort?

Oxford philosopher Nick Bostrom, who has been writing about existential risks to humanity since at least 1999, published Superintelligence: Paths, Dangers, Strategiesin 2014, a book that explores the danger that an artificial general intelligence (AGI) that surpasses human intellectual capabilities might hold for the future of humanity. Musk once recommended the book, calling AI “[p]otentially more dangerous than nukes,” and repeated similar sentiments in an address at MIT later that year.

The following year, Musk and Bostrom participated in a panel at the Effective Altruism Global Conference, where Vox’s Dylan Matthews observed and expressed concern that a segment of the EA movement among the tech elite was focusing on the issue of the existential threat of AI to the exclusion of many other challenges. “At the risk of overgeneralizing, the computer science majors have convinced each other that the best way to save the world is to do computer science research,” Matthews said.

OpenAI

Later in 2015, Musk and the venture capitalist Sam Altman, along with a group of investors and other partners, announced the establishment of a new nonprofit research organization, OpenAI. At the time, OpenAI promised to advance “digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return.”

OpenAI set out with the explicit goal of developing “human-level” AI. Its 2018 charter uses the language of “AGI” or “artificial general intelligence,” and suggests the organization’s goal is to develop such technology in a safe way. In an interview, Musk and Altman explained that part of their vision for OpenAI was to make its technology available to all, since an open model– in their view– would be safer for the world than just a handful of companies having access to the most advanced AI systems. In a comment mirroring the old argument against gun control, Musk said “I think the best defense against the misuse of AI is to empower as many people as possible to have AI. If everyone has AI powers, then there’s not any one person or a small set of individuals who can have AI superpower.” (Incidentally, Bostrom sees some dangers with the open approach, and specifically warned that “[i]f you have a button that could do bad things to the world, you don't want to give it to everyone.”)

Musk Breaks with OpenAI

In February 2018, OpenAI announced that Musk was stepping down from its board, noting that Tesla’s increasing focus on AI could cause future conflicts of interest. It wasn’t until a year later that he mentioned that he “didn’t agree with some of what [the] OpenAI team wanted to do.” Musk has never publicly explained exactly what he disagreed with at the time, but a few possibilities align with some of his other comments. (This analysis takes Musk at his word, though it is well known that he has not always been strictly truthful, and his early interest in OpenAI, his subsequent pronouncements, or both, could be grounded in far less lofty interests, such as crafting his reputation and/or excusing his about-turn.)

1. Realizing that more investment was required to succeed in its mission, OpenAI shifted to a new legal framework in March 2019. Although the new for-profit entity, OpenAI LP, is still controlled by the board of the original non-profit, and its “primary fiduciary obligation” is to uphold the OpenAI charter, investors are able to receive up to 100 times their investment back in profits. Any excess will go to the fund the non-profit entity, though it has been observed that to return more than 100x would make OpenAI a phenomenally successful venture, even by the standards of celebrated Silicon Valley giants. A few months later, Microsoft invested $1 billion in OpenAI. Musk’s recent tweet about pausing access to Twitter’s database explained: “Need to understand more about governance structure & revenue plans going forward. OpenAI was started as open-source & non-profit. Neither are still true.” It should be noted that the simplest explanation for Musk’s action may be that Twitter is in no position to be effectively giving away data to what is now a for-profit entity, but the governance concerns appear to be Musk’s chief worry.

2. Though it’s not clear how much OpenAI was ever committed to making everything it did open-source, some of the code from its projects is available on GitHub. OpenAI did raise some eyebrows when it announced in February 2019 that it would not immediately release the full version of GPT-2 or its code to the public due to the dangers of its misuse (see the “Policy Implications” section of the company’s blog post on the subject). OpenAI then announced a staged release, with incrementally larger versions of the model and eventually the complete codebase, released in November. When it came to the GPT-3 model, although there is a public API, the code has not been made public.

3. Not only was the code for GPT-3 kept proprietary, but in the fall of 2020 OpenAI signed an exclusive license with Microsoft, permitting one of its largest investors to use the model in its products. Even though the API remained available to others, Microsoft alone would have complete access to the code to work with directly, or adapt to suit their needs. In September 2020, Musk commented, “This does seem like the opposite of open. OpenAI is essentially captured by Microsoft.”

In her February 2020 in-depth profile of OpenAI, Karen Hao describes a company that remained committed, at least in principle, to its original goal of bringing about AGI in a way that is “safe” and that serves society as a whole. Employee compensation is apparently linked to the depth of their adherence to the OpenAI charter. However, Hao also paints a portrait of a culture where secrecy and an emphasis on loyalty– perhaps inspired by competition for funding and obsession with public image– have had a deleterious effect on the company’s aspirations of openness. In response to the article, Musk responded curtly that “OpenAI should be more open imo.”

Visions of AI Safety

A follow-up tweet included the additional comment, “Confidence in Dario for safety is not high.” Musk was referring to Dario Amodei, then research director at OpenAI. In Hao’s article, Amodei is cited as articulating three safety goals: “making sure that they [AI systems being developed] reflect human values, can explain the logic behind their decisions, and can learn without harming people in the process.” He also mentioned the importance of bringing on safety researchers with different visions. Amodei has since left OpenAl to co-found Anthropic, which describes itself as an “AI safety and research company.” Anthropic received Series A and Series B funding from other EA adherents, including Sam Bankman-Fried, the founder of recently collapsed cryptocurrency exchange FTX.

It is unclear what about Amodei’s approach to safety bothered Musk, but one avenue of speculation may connect Musk’s critiques of OpenAI, his interest in AI in the first place, and what we know of his approach at Twitter. As noted above, Musk’s own interest in longtermism appears to have played a significant role in his interest in founding OpenAI. This suggests that his focus is on the long-term risks of a malevolent or otherwise dangerous AGI, rather than the short-term problematic effects of AI in the world today.

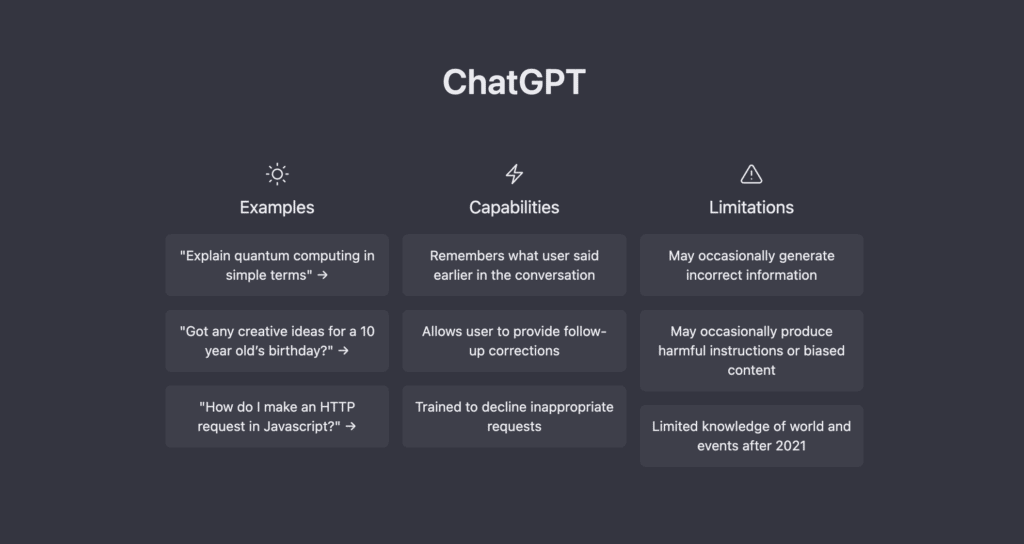

In contrast, and despite its charter’s focus on AGI safety, OpenAI has repeatedly attributed its hesitancy in releasing products faster to the potential immediate harms from bad actors using its software or particular use-cases. Some early research from Amodei’s Anthropic has also studied how to mitigate these harms. On a non-technical level, OpenAI has also imposed policies around use of its products, including a content policy that hits the main themes of any responsible UGC platform, and employs a veteran of policy leadership roles at Facebook and AirBnB as its Head of Trust & Safety. (Users of ChatGPT have had plenty of fun dodging its built-in content controls.)

It is well-documented by now that Musk has been broadly contemptuous of traditional approaches to Trust & Safety and content policy in his role at Twitter, mocking past practices, firing workers, and bringing known spreaders of hate and misinformation back to the platform. It may be that he has little sympathy for the concern over safe uses of new AI products, and is only interested in developing an eventual AGI that will be safe for the existence of humanity.

Prominent AI ethicist Timnit Gebru recently decried the impact of EA philanthropic funds pouring into research entities of all kinds, including OpenAI, that focus on speculative AGI safety while causing real-world harms in the present through their haste to release more and more powerful models without sufficient attention to their drawbacks. Gebru and her collaborators have warned of the dangers of bias embedded in large language models, and of misinterpreting their output as the product of genuine understanding.

Three largely distinct approaches to the AI safety of can therefore be identified:

- Mitigating the dangers of an eventual superintelligent AGI (identified with Bostrom and the Silicon Valley longtermists, sometimes referred to as "AI Safety")

- Striving to ensure that AI is developed and used without perpetuating the bias present in the training data, and that the outputs are understood for what they truly are (“fairness in AI”, "AI Ethics")

- Preventing the AI generating content that is highly objectionable, directly harmful and/or opposed to the values of its creators, whether deliberately or inadvertently (Trust & Safety / content moderation perspective)

These “safety” issues are not the only ethical concerns with AI, especially in specific applications. Relevant to the Twitter/ChatGPT example at hand, there are also complex questions of intellectual property and privacy: should all authors of material included in the training corpus give consent or receive credit or compensation? What are the ramifications of automating the believable impersonation of individuals?

When the agenda for AI safety is being so loudly set by Musk and others in his orbit, it is all the more important to recall that current AI implementations are already replete with ethical and legal pitfalls, as laid out in the recent White House Blueprint for an AI Bill of Rights. Researchers such as Gebru argue that progress can only come with a precise awareness of the potential harms, careful investigation, and scrutiny at every step. And, for responsible rollout of AI products to wide audiences, a strong Trust & Safety function will continue to be essential.

Authors