California Signed A Landmark AI Safety Law. What To Know About SB53.

Cristiano Lima-Strong / Sep 30, 2025

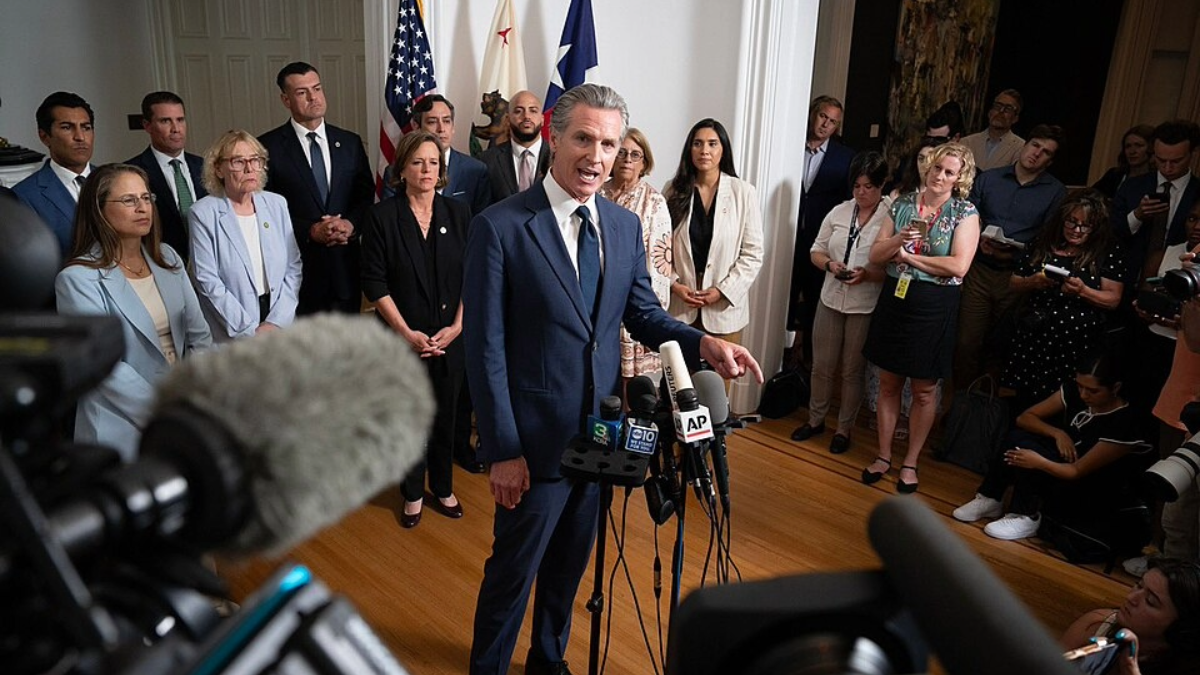

California Gov. Gavin Newsom (D) speaks at a press conference. (Governor Newsom Press Office)

California Gov. Gavin Newsom (D) on Monday signed into law the Transparency in Frontier Artificial Intelligence Act, known as SB53, capping off a tumultuous year of negotiations over AI regulations in the state and ushering in some of the most significant rules in the United States.

The proposal, the subject of intense debate stateside and federally, is poised to be a major marker in the debate over AI safety nationwide and could serve as a template for other states to follow — if lawmakers in Washington do not ultimately preempt such state rules.

“With a technology as transformative as AI, we have a responsibility to support that innovation while putting in place commonsense guardrails to understand and reduce risk,” California State Sen. Scott Wiener (D), who introduced the bill, said in a statement. “With this law, California is stepping up, once again, as a global leader on both technology innovation and safety.”

Here’s what you need to know about the measure and how it came to pass:

How we got here

While SB53 was first introduced in January, its path to becoming law kicked off in earnest last year, when Wiener introduced a more sweeping bill to prevent risks from leading AI models last February, SB1047, or the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act. As initially written, the bill would have required AI developers to conduct safety tests prior to rolling out any of their most powerful models, including making a “positive safety determination” that it “lacks hazardous capabilities.”

The legislation, which, if signed, would have imposed the most stringent AI safety regulations nationwide, quickly became a political lightning rod and drew massive push back from tech industry groups who argued it would stifle innovation in the burgeoning AI sector. Several top federal officials from California, including former House Speaker Nancy Pelosi, echoed those concerns, dealing a blow to the proposal’s prospects to become law.

The bill sailed through the state’s legislature with broad support, but Newsom vetoed the measure last September, writing in a message to lawmakers that the bill “could give the public a false sense of security about controlling this fast-moving technology” by only focusing on the most advanced AI models. Instead, he said, the state needed to strike “a delicate balance.”

To advance the negotiations, Newsom convened a group of researchers, dubbed the Joint California AI Policy Working Group, to draft a report outlining recommendations on AI regulation. When Wiener introduced SB53 in February, the successor to SB1047, he said in a statement that he was “closely monitoring the work of the Governor’s AI Working Group.”

The working group released its final report in June, calling for “targeted interventions” that balanced the technology’s “benefits and material harms,” after which Wiener’s office made changes to the bill to “more closely align” with the report’s recommendations. The updated bill cleared California’s legislature in September and was signed by Newsom shortly after.

“California has proven that we can establish regulations to protect our communities while also ensuring that the growing AI industry continues to thrive,” Newsom said in a statement on SB53 this week. “This legislation strikes that balance.”

What the law will do

The law will require developers of the most advanced “large frontier” models to disclose on their websites a framework for how they approach numerous safety issues, including standards and best practices, how they inspect for “catastrophic” risks and how they respond to “critical safety incidents.” It sets a computational threshold for defining frontier models and defines “large” frontier developers as those generating more than half a billion dollars in annual revenue.

The law also requires companies to publicly release transparency reports prior to or concurrently with their deployment of a “new frontier model,” or of an updated version of a prior model, that details how they plan to uphold the framework, and to regularly report to California’s Office of Emergency Services “a summary of any assessment of catastrophic risk.”

Additionally, SB53 makes it easier for both members of the public and company whistleblowers to report potential safety risks. The law requires the state’s Office of Emergency Services to set up a mechanism for members of the public to report critical safety incidents. And it prohibits companies from adopting policies or otherwise restricting or retaliating against staff for disclosing information they have “reasonable cause” to believe reveals that a developer poses “specific and substantial danger to the public health or safety resulting from a catastrophic risk.”

The law also calls for the creation of a consortium that will be tasked with developing a “cloud computing cluster,” known as “CalCompute,” that will advance the “development and deployment of artificial intelligence that is safe, ethical, equitable, and sustainable.”

How the law is being received

SB53 appears to have drawn support from a broader collection of groups than SB1047, including from both consumer advocates and some tech companies.

“SB 53’s passage marks a notable win for California and the AI industry as a whole. Its adaptability and flexible framework will be essential as AI progresses,” Encode AI’s Sunny Gandhi said in a statement.

Sacha Haworth, executive director of the advocacy group Tech Oversight California, called SB53 “a key victory for the growing movement in California and across the country to hold Big Tech CEOs accountable for their products, apply basic guardrails to the development and deployment of AI, and protect whistleblowers’ ability to step forward.”

In contrast to SB1047, industry reactions to SB53 have been more mixed.

Anthropic, the developer of the AI chatbot Claude, came out in support of the measure in recent weeks. Meta called the new law “a positive step” toward “balanced AI regulation” and OpenAI said it was “pleased to see that California has created a critical path toward harmonization with the federal government,” though neither openly championed the bill, according to Politico.

Still, tech industry groups that count some of those companies as members, such as CCIA and the Chamber of Progress, railed against the measure and called on Newsom to veto it.

Collin McCune, head of government affairs at Andreessen Horowitz (a16z), said that while SB53 “includes some thoughtful provisions that account for the distinct needs of startups … it misses an important mark by regulating how the technology is developed — a move that risks squeezing out startups, slowing innovation, and entrenching the biggest players.”

McCune also appeared to tease the prospect that federal lawmakers could ultimately preempt the California standards, adding, “Smart AI regulation can help us win the AI race, but we need the federal government to lead in governing the national AI market. Recent conversations with the House and Senate about a federal AI standard are an encouraging step forward. More to come.”

Authors