Beyond Regulation: What 500 Cases Reveal About the Future of AI in the Courts

Isadora Valadares Assunção / May 20, 2025Isadora Valadares Assunção is a sociotechnical AI researcher currently studying law at the University of São Paulo, where she coordinates Techlab, a study group focused on law and technology.

Across the world, regulation of artificial intelligence (AI) is uneven. While some jurisdictions already have comprehensive regulations, others focus only on sector-specific rules, and still others resist or delay regulation entirely. For instance, the European Union’s recently enacted AI Act provides a horizontal, risk-based model that cuts across sectors and technologies. In contrast, in the United States, resistance to binding legislation persists, including calls for a moratorium on state AI regulation that would extend over a decade.

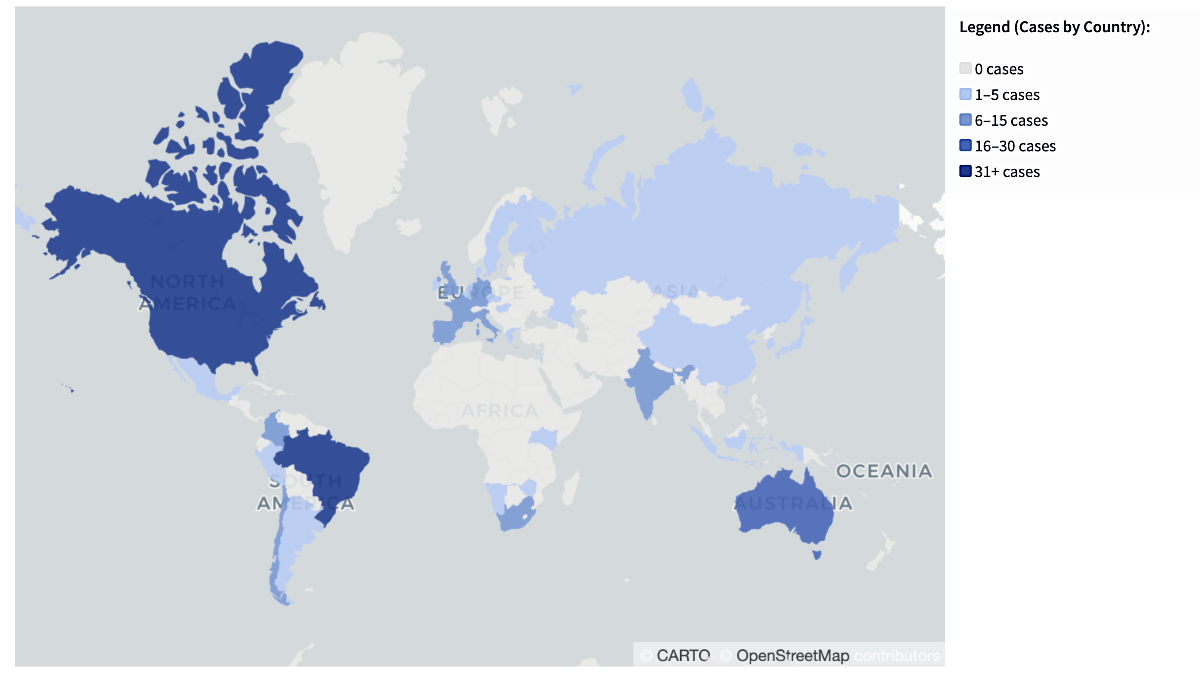

Source: AI on Trial, author's analysis.

These dynamics have shaped the global conversation around AI governance as a matter of prospective legislation and policy design. But this emphasis obscures a critical and underexamined fact: courts are already regulating AI. Across the world, judges, data protection authorities, and administrative tribunals are actively resolving disputes in which AI systems play a central role, whether in automated immigration decisions, biometric surveillance, or data processing for model training. In doing so, they are establishing de facto rules, boundaries, and precedents that directly affect how AI technologies are developed, deployed, and challenged.

This article presents findings from AI on Trial, an original empirical project aimed at surfacing this overlooked domain. The project tracks and categorizes litigation involving AI technologies across jurisdictions, highlighting the ways in which judicial and administrative bodies function as normative actors in the governance of AI. This spans contexts where the courts are interpreting regulation itself to those in which legislative or regulatory responses are nonexistent, fragmented, slow, or contested.

The current version of the AI on Trial dataset includes 500 cases from 39 countries and jurisdictions, encompassing both judicial decisions and administrative rulings. These cases are drawn from a wide array of legal domains and involve actors ranging from private individuals and corporations to government agencies and transnational platforms. To be included in the dataset, a case must involve direct or materially significant use of AI, rather than mere mention. Each case was manually classified and coded by jurisdiction, court or administrative authority, AI-related issue (e.g., bias, transparency, IP, etc.), and year. A brief summary of the case and excerpts of the main decisions were also annotated.

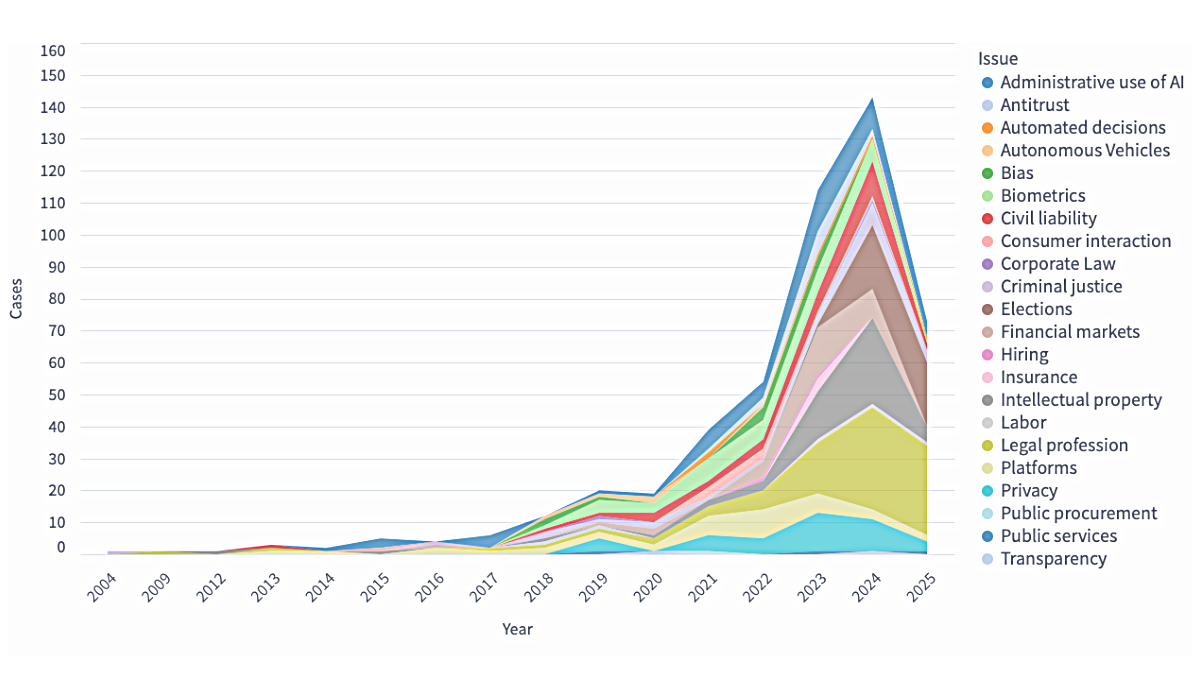

Across the 500 cases analyzed in the AI on Trial dataset, three issue categories alone concentrate 39.4% of all disputes: cases that concern the legal profession (92 cases), intellectual property (56 cases), and administrative use of AI (49 cases). These categories are not just quantitatively dominant; they represent the most visible friction points between AI systems and the legal frameworks that seek to govern them.

The growth of litigation in the legal profession category is particularly striking. From just three recorded cases in 2021, the number jumped to 32 in 2024, with another 28 already documented in early 2025. These cases involve both the use of AI by legal actors and its citation in judicial reasoning. A clear example is Ross v. United States (2025), in which the DC Court of Appeals cited OpenAI’s ChatGPT in both majority and dissenting opinions to help interpret the notion of “common knowledge” in an animal cruelty case. In Brazil, a lawyer filed a petition containing 43 fabricated precedents hallucinated by a generative AI model, leading to the case’s dismissal and disciplinary inquiry. These examples illustrate how AI tools are reshaping epistemic authority and professional norms within legal practice, sometimes subtly, at other times problematically.

Longitudinal evolution of issues being litigated. Source: AI on Trial, author's analysis.

Intellectual property disputes have also surged. From fewer than five cases annually before 2022, the issue peaked at 27 cases in 2024, primarily tied to the rise of generative AI. These disputes commonly involve claims that training data was scraped without authorization, infringing on copyright or moral rights. In Getty Images v. Stability AI, currently pending in multiple jurisdictions, plaintiffs argue that using millions of copyrighted images in model training violates IP law.

The administrative use of AI represents a longer-standing but no less important area of litigation. Cases in this category span back to 2014, but saw a significant uptick between 2019 and 2023, peaking at 13 cases in 2023. These often concern automation in public services, such as immigration, benefits, or regulatory enforcement. In Haghshenas v. Canada (2023), the complainant challenged a visa denial influenced by the Chinook tool used to streamline immigration processing. While the court upheld the process on the grounds that a human officer retained final discretion, the case revealed the murkiness of hybrid decision-making and the difficulty of pinpointing accountability in systems where humans rely heavily on machine-generated summaries.

These trends reveal not only where litigation is happening, but also how judicial systems are being drawn into the front lines of AI governance. In each of the three leading categories, courts are not merely reacting to harms; they are constructing new legal boundaries for emerging technologies, defining what counts as fair process or lawful innovation in contexts where prior legal guidance is often ambiguous or nonexistent. If we are to govern AI in a way that reflects social realities rather than abstract principles, we cannot afford to treat the courtroom as a footnote. The law is already speaking. The task now is to listen carefully, and to make sure its judgments are part of the conversation we are building about the future of technology and democracy.

Authors