Auditing Amazon Recommender Systems: Insights Into Risk Assessment Under The Digital Services Act

Paul Bouchaud, Raziye Buse Çetin / Jan 25, 2024Amazon is the most popular e-commerce platform in Europe with approximately +180 million active users. It has been designated a Very Large Online Platform (VLOP) in the framework of the Digital Services Act by the European Commission. Although Amazon initially contested this designation, it is now expected to submit risk assessment reports to the European Commission. Given that neither the platform's risk assessment nor their answer to the commission request for information are publicly disclosed to date, we, as civil society actors, sought to shed light on how Amazon's bookstore algorithms may pose systemic risks to civic discourse and the diversity of viewpoints.

Even before the advent of social media, online marketplaces sought to personalize users' experience. Recommender systems allowed e-commerce platforms to adjust their digital shelves to align with users' supposed interests based on behavioral data. Collaborative filtering– an approach based on the idea that our preferences often align with those of others– emerged as a highly effective method to increase sales. Amazon capitalized on item-based collaborative filtering since the late 1990s, “Customers who bought this also bought” recommendations driving 30% of the traffic for books and ebooks, as estimated in 2015.

By selecting a handful of items based on what "other customers also bought" from an inventory that spans millions, recommenders present a narrow range of items. While this reduction in diversity may seem inconsequential or even desirable in the context of consumer goods – after all, who wants fireworks recommended while shopping for baby safety gates – it becomes a substantial threat to the diversity of viewpoints when applied to books, in particular those discussing societal issues.

Human behavior tends to favor information that confirms existing beliefs while avoiding contradiction. Consequently, like-minded books are likely to be purchased by the same users, which, in turn, justifies collaborative filtering to recommend them together. The system essentially encourages users interested in one item to explore the other, creating a self-fulfilling prophecy where the two items are increasingly "bought together." This approach tends to steer users toward an idealized 'average' behavior, erasing the subtleties and diversity in content consumption. Fortunately, such obvious feedback loops have been identified early on by the research community and recommender systems with increased diversity were designed.

Amazon is often examined for pricing and product ranking, and is less studied as a platform impacting civic discourse. With over 90% of self-published books hosted on Amazon in the US in 2018, the platform houses content akin to that on social media platforms. We argue that Amazon's use of collaborative filtering in its bookstore is an editorial choice, warranting closer examination regarding systemic risks outlined in the Digital Services Act.

Our research explored the recommendations provided by two major bookstores: Amazon.fr, serving an average monthly active user base of 34.6 million in France, and Fnac.com, the largest French bookstore. For both, we collected the "Other customers also bought" suggestions, which an unlogged user without browsing history would encounter upon visiting the bookstore, for tens of thousands books, mainly non-fiction, related to social issues.

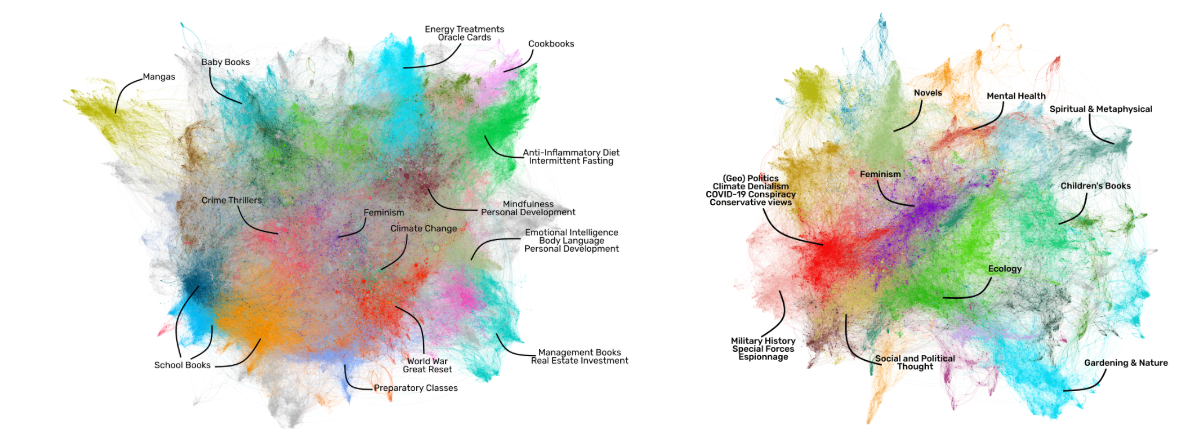

Our findings, detailed in the article “Browsing Amazon's Book Bubbles” and the report “The Amazing Library,” revealed the presence of tightly-knit communities of semantically similar books. Within these communities, users find themselves ensnared by a limited web of recommendations. Admittedly, the range of topics within these book landscapes is vast, ranging from gardening to metaphysics and geopolitics. However, users find themselves immersed in these landscapes and, by design, remain unaware of the rich diversity of topics and viewpoints available. Once a user enters a community, typically by clicking on a search results, tens of successive clicks on books recommendation are needed to break free from this initial community – on average, 24.9 clicks on Amazon.fr and 20.0 on Fnac.com, with a median of 11 clicks needed on both platforms to break free.

Book recommendation landscapes (left) on amazon.fr [48,636 books, 391,664 recommendations]; (right) on fnac.com [25,103 books, 232,129 recommendations]. The book communities are color-coded. Interactive versions of the networks are available at explore.multivacplatform.org and explore.multivacplatform.org/fnac/.

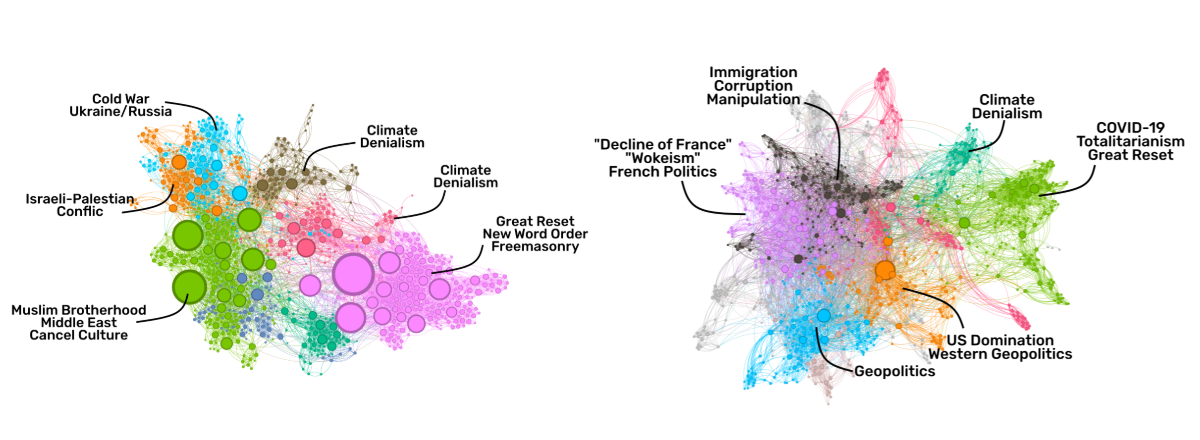

At first glance, when examining recommendations at the topic level, it is legitimate to argue that a recommender system suggesting additional gardening books when a customer is shopping for such literature, rather than diverting towards manga, is not only acceptable but also desirable. Nevertheless, the repercussions of a lack of diversity in recommendations become palpable when we delve into books addressing contentious societal topics. On both Fnac.com and Amazon.fr, we identified a large community of books propagating conspiracy theories, contrarian and politically skewed viewpoints. This eclectic assortment ranges from climate denialism to narratives surrounding the "New World Order," COVID-19, and conservative views on gender issues.

When one explores a climate denialist book on Amazon, the platform suggests antivax conspiracy books, in addition to other climate denialist content. The rationale behind these recommendations can be explained by collaborative filtering; as an examination of the user reviews for these books reveals that books recommended together tend to be purchased/reviewed by the same users— further details are available in the article “Browsing Amazon's Book Bubbles.”

These recommendations exemplify how a lack of viewpoint diversity in suggestions can detrimentally impact civic discourse. Through their recommender systems, these two bookstores skew users' perceptions of ongoing discussions, potentially leading them towards misleading, if not outright misinformative, books.

Yet another pivotal aspect within the user experience on these platforms is the search engine. According to the "The Amazing Library" report, a collaborative effort between AI Forensics and CheckFirst, it was revealed that as of the autumn of 2023, searches for queries like "IPCC" or "COVID" in Belgium and France yielded a substantial number of misleading results. These search results funneled users toward a community dominated by conspiracy literature. Despite the opaque nature of Amazon's search algorithm, when the results are ranked by decreasing average user reviews, a seemingly objective ranking criterion, instead of relying on Amazon's ranking system, we observed an even greater prevalence of scientifically inaccurate content.

The use of user reviews or purchase patterns as a basis for recommendations, while seemingly impartial, overlooks complex socio-technical feedback loops. Algorithms do not just only reflect but also shape user behavior; similarly, users do not passively consume content but may in fact try to game the algorithm to their preferences. Addressing the potential systemic risks these algorithms pose — risks that extend to the exercise of fundamental rights such as freedom of information, the pluralism of media, and the integrity of civic discourse and electoral processes — requires more than technical tweaks. It demands a fundamental shift in perspective.

Amazon isn't just selling products; it shapes cultural discourse. The books it recommends influence millions, thereby influencing public discourse. This influence demands a thorough consideration of the societal impact of its recommendations. Beneath the veneer of neutrality projected by recommender systems, where 'user biases' and ‘bad actors’ are invoked to justify their misguidances, lies a crucial editorial decision. This decision pertains to the implementation of recommender systems that, by design, promote to user like-minded content, instead of alternatives such as bridging systems. Amazon’s recommender may fully align with both Article 27 of the Digital Services Act — by being clear on the parameters used, simply co-purchase patterns here — and Article 38 — by providing recommendations not based on profiling as defined in the GDPR— and still pose systemic risks to the civic discourse in the Union.

Beyond marketplaces and Amazon, the algorithmic machinery that online platforms invest countless resources in developing, does not aim to ensure pluralism of views and other democratic values, but rather to maximize profit. To effectively mitigate the systemic risk identified in the Digital Services Act, it is imperative to acknowledge the misalignment between the objectives of these platforms and pluralism of views to underscore the need for systemic changes, rather than mere technical tweaks.

Authors