Assessing Support for Child Online Safety Legislation at Senate Hearing with Big Tech CEOs

Gabby Miller, Haajrah Gilani / Feb 1, 2024Gabby Miller reported from New York; Haajrah Gilani reported from the Capitol in Washington D.C.

Mark Zuckerberg, CEO of Meta Platforms Inc., center right, addresses the audience, including parents of children injured or lost in events involving social media, during a Senate Judiciary Committee hearing in Washington, DC, US, on Wednesday, Jan. 31, 2024. Photographer: Kent Nishimura/Bloomberg via Getty Images

When Christine Almadjian began using social media, it was for lunchtime selfies and retweeting posts about One Direction. But she soon found herself in private chat rooms with predators who would continue to loom around through new or duplicate accounts.

“As children, we did not have the foresight to dangerous situations the way we might now,” said Almadjian at a senator-led press conference following the hearing. Almadjian, who is a member of the End Online Sexual Exploitation and Abuse of Children Coalition, told Tech Policy Press that she hears people joke about online sexual exploitation and tries to approach situations lightheartedly, “but it really is something that a lot of us suffered with and suffered from."

This suffering was center stage at Wednesday’s Senate Judiciary Hearing on "Big Tech and the Online Child Sexual Exploitation Crisis," where some of Silicon Valley’s biggest tech executives were expected to answer questions from lawmakers on their companies’ actions to protect children from sexual exploitation online. The Dirksen Senate Office Building was packed with families holding photos of children they lost to alleged online exploitation and young advocates wearing black t-shirts that read, “I'm worth more than $270” — a reference to leaked internal communications between Meta employees that assigned a “Lifetime Value (LTV)” of a 13 year-old at “roughly $270 per teen.”

Related Reading:

- Backgrounder: US Senate Judiciary Committee to Grill Tech CEOs on Child Safety (Tech Policy Press)

- Transcript: US Senate Judiciary Committee Hearing on "Big Tech and the Online Child Sexual Exploitation Crisis"

Despite Congress hosting nearly forty hearings on children and social media since 2017, Senate Judiciary Committee leaders promised Wednesday that this would be one of the last before enacting meaningful legislative change. Little new information, however, was gleaned. And despite many senators announcing commitments during the hearing to hold Big Tech accountable, none provided a clear-cut timeline for moving the proposed legislation forward.

Witnesses included:

- Linda Yaccarino, Chief Executive Officer, X Corp. (written testimony)

- Shou Chew, Chief Executive Officer, TikTok Inc. (written testimony)

- Evan Spiegel, Co-founder and Chief Executive Officer, Snap Inc. (written testimony)

- Mark Zuckerberg, Founder and Chief Executive Officer, Meta (written testimony)

- Jason Citron, Chief Executive Officer, Discord Inc. (written testimony)

The executives faced hours of grilling, with Meta’s Mark Zuckerberg and TikTok’s Shou Chew largely on the receiving end of Senators’ frustrations during the nearly four-hour hearing. This often gave cover to Discord, Snap, and X, whose CEOs all left Capitol Hill relatively unscathed.

A series of flashpoints marked the hearing. Sen. Marsha Blackburn (R-TN) told Zuckerberg that it appears Meta is trying to be the “premier sex trafficking site,” which was met with rousing applause; Sen. Ted Cruz (R-TX), who was nearly yelling by the end of his seven minutes, pressured Zuckerberg to explain why a ‘see results anyways’ option existed for a search involving content that ‘may contain child sexual abuse’; and Sen. Blumenthal (D-CT) asked Zuckerberg if he believes he has the “constitutional right to lie to Congress?”

One particularly tense and headline-grabbing moment came amid a line of questioning from Sen. Josh Hawley (R-MO). “You're on national television. Would you like now to apologize to the victims who have been harmed by your product? Show 'em the pictures,” Sen. Hawley told the row of families sitting behind Zuckerberg, who held up pictures of their lost loved ones. Zuckerberg turned around, offered his condolences, and pledged the platform would “continue its industry-leading efforts” to protect children online. As the only CEO to have a table all to himself, this moment captured Zuckerberg on both a physical and metaphorical island at the hearing.

Mia and Todd Minor were one of the families in the crowd who received Zuckerberg’s “best version of an apology.” While they weren’t particularly impressed with the statement, Todd Minor felt good about one thing: how exhausted Zuckerberg looked. “It’s wearing on them [tech executives], and that’s what needs to happen,” Todd Minor told Tech Policy Press.

Much of the Senators’ focus was on internal leaked exchanges between Zuckerberg, Meta’s President of Global Affairs Nick Clegg, and the Head of Instagram, Adam Mosseri. These communications were used as the basis for lawsuits filed by a bipartisan coalition of 42 state attorneys general over allegations Meta knowingly designed and deployed harmful features that purposefully addict children and teens. The documents show that despite months of urging from high-level executives and Meta’s research team to invest more into well-being and safety improvements — a promise Meta’s Director of Global Safety Antigone Davis made to Congress in her 2021 testimony — Zuckerberg instead responded to “other pressures and priorities.”

In Zuckerberg’s opening statement and at least three instances in the hearing, he redirected Senators to the “shifting” science regarding mental harms online. He repeatedly cited a National Academies of Science (NAS) report that rejected the idea that social media causes changes in adolescent mental health at the population level. Following the hearing, Meta tripled down on the argument in a press release by again citing the NAS study, which calls for legislative action like revisions to the Children’s Online Privacy Protection Act – a reform not mentioned in the hearing.

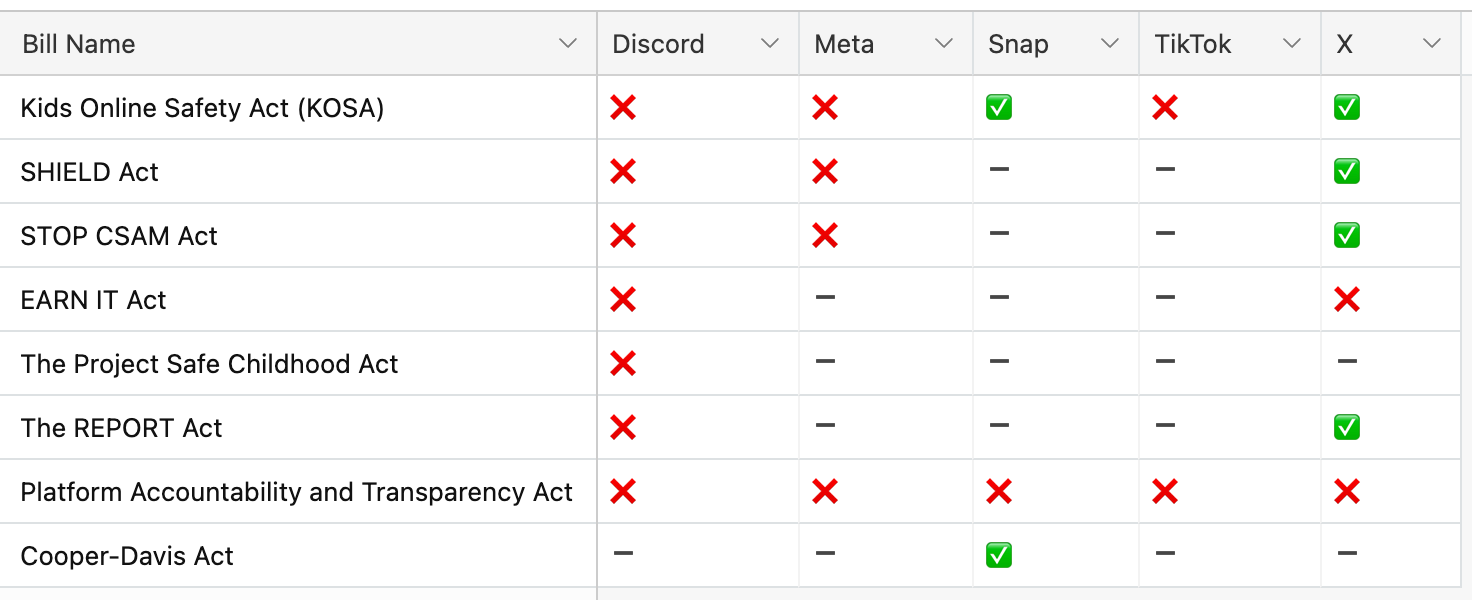

Perhaps one of the most useful lines of questioning came from Senators Amy Klobuchar (D-MN), Chris Coons (D-DE), and Blumenthal, who demanded the tech CEOs go on the record stating which bills they did or did not support. The questions should not have come as a surprise to CEOs as a number of bills designed to prevent the exploitation of kids online have emerged from the Senate Judiciary Committee within the last year, including the STOP CSAM Act, EARN IT Act, SHIELD Act, Project Safe Childhood Act, and REPORT Act.

Related Reading:

- 2024 Set To Be Crucial Year For Child Online Safety Litigation (Tech Policy Press)

There’s also a broader push to pass the Kids Online Safety Act, a bipartisan bill initially introduced by Senators Blumenthal and Blackburn in 2021 that sets a 'duty of care' to protect children under 17 for social media platforms and mandates platforms provide more reporting tools for parents, among other provisions. Despite KOSA passing out of the Senate Commerce Committee in July, and having nearly half the Senate’s official support, the bill was not taken up for a floor vote in December as some had hoped.

The Commerce Committee is also currently pushing to advance the Platform Accountability and Transparency Act (PATA), a bill sponsored by Sen. Coons that would set reasonable standards for disclosure and transparency. No executives at the hearing were prepared to endorse PATA. “Let the record reflect a yawning silence from the leaders of the social media platforms,” Sen. Coons said.

After the hearing, Sen. Blumenthal told Tech Policy Press that KOSA must be passed to ensure tech companies no longer are grading their own homework. “That’s what KOSA would do by law, and we’re going to continue to shine a light on them [tech companies], call attention to them, and make sure that our story, but most importantly the stories that you’ve heard [from victims] here today, are front and center.”

During the hearing, the executives often refused to go on the record with definitive answers as to whether they support a bill, with some claiming they support bills “in spirit” (Meta’s Zuckerberg and TikTok’s Shou Chew) and others dodging specific legislation in lieu of more vague answers, like support for a “national privacy standard” (Discord’s Citron). And X’s Yaccarino was twice confronted about whether X supported the EARN IT Act, first by Sen. Klobuchar and again by Sen. Graham, offering only that she looked forward to “continuing conversations.”

Sen. Klobuchar became increasingly frustrated when met with non-responses as she ticked through the bills. “I’m so tired of this. It’s been 28 years since the internet, and we haven’t passed any of these bills because everyone's double talk, double talk. It's time to actually pass them and the reason they haven't passed is because of the power of your company,” she said.

Snap’s Evan Spiegel and X’s Linda Yaccarino, for their part, were at times the most forthright in their views on specific legislation, both offering clear, unequivocal support for KOSA, among other bills. The KOSA endorsements mark a significant shift that’s occurred in just the last week: despite vehement opposition of KOSA from the trade group NetChoice, which represents Meta, TikTok, Snap, X, and others, some tech executives are breaking ranks to come out in support of the bill. Less than a day before the hearing, Microsoft’s President Brad Smith also announced his company’s support.

Yaccarino also stood out for supporting nearly all of the proposed online safety legislation discussed, with the exception of the EARN IT Act. In one of the more critical questions pointed at Yaccarino on Wednesday, Sen. Alex Padilla (D-CA) asked how she will implement guidance for the “hundreds of thousands” of minors on X. She answered that as a “14-month-old company,” X had just begun to discuss enhancing its preexisting parental controls. (Twitter, which was rebranded as X after ownership change 14 months ago, was founded in 2006.)

Tech Policy Press created a chart that shows which tech executives supported each bill mentioned at the hearing. For those who were not directly asked about certain bills, we marked their stances as “unclear,” and if an executive did not provide an affirmative “yes,” we marked it as a “no.”

Data and chart by Gabby Miller.

Sen. John Cornyn (R-TX) spent nearly all his allotted time questioning TikTok CEO Shou Chew about the company’s relationship to the Chinese government. He made no mention of the Project Safe Childhood Act (PSCA), a bill he sponsored that would align federal, state, and local entities to work together to combat child exploitation online. The bill passed the Senate by voice vote in October, while the accompanying House bill remains in committee. (Sen. Graham briefly mentioned the PSCA while grilling Citron on what legislation Discord supported.)

Some of Sen. Cornyn’s Republican colleagues, including Senators Tom Cotton (R-AK), Ted Cruz (R-TX), and Lindsay Graham (R-SC), also spent large parts of their allotted seven minutes questioning the Singaporean executive over the same concerns. These questions detracted from time spent pressing the CEO on real child safety issues plaguing TikTok, like predators misusing product features to post and share CSAM with their networks.

With Zuckerberg and Chew receiving the brunt of the senators’ questions, the remaining witnesses spent the majority of their time in silence.

In one of the only questions exclusively directed at Spiegel, Sen. Laphonza Butler (D-CA) asked the Snap CEO if he had anything to say to the parents of children who accessed illegal drugs through Snapchat, some of whom have died from overdose. Spiegel did apologize, albeit for Snap's inability to “prevent these tragedies.” Spiegel, in describing the work Snap has done to “proactively look for and detect drug related content,” cited a campaign Snapchat ran on its app that was “viewed more than 260 million times” as one of the only indicators of success. Spiegel stood alone in supporting the Cooper Davis Act, which would require providers report the sale or distribution of counterfeit controlled substances on their platforms.

Sen. Lindsay Graham, while not directly mentioning a bill, advocated for and asked executives about repealing Section 230 of the Communications Decency Act, which gives blanket immunity to providers that host illegal content on their sites. When it comes to repealing Section 230, Sen. Graham isn’t alone. In an interview with Tech Policy Press, New Mexico Attorney General Raúl Torrez said that this law was enacted in a time before social media, and that he thinks what once was a protective wall that allowed these tech companies to grow behind, now has gotten out of hand.

Attorney General Torrez believes more legal action against tech companies should be permitted. In December, he filed a 225-page lawsuit against Meta and Mark Zuckerberg for allegedly failing to protect children from sexual abuse, online solicitation, and human trafficking. The suit also argues that the flawed design of Instagram and Facebook led to the recommendation of CSAM and child predator accounts to minor users, in violation of New Mexico’s Unfair Practices Act.

Attorney General Torrez remains hopeful that changes to holding Big Tech accountable are around the corner. “What you saw today is a real bipartisan consensus that the status quo is not working and not protecting the most vulnerable members of the community.”

As the hearing drew to a close, Sen. Peter Welch (D-VT) said he felt a wave of optimism because of the Committee’s shared concerns about children’s safety online. “There is a consensus today that didn’t exist, say, 10 years ago, that there is a profound threat to children, to mental health, to safety,” he said.

As Todd Minor held a photo of his late son, Matthew, he told Tech Policy Press that tech executives need to “understand that this is not going away.” The Minors have been advocating for KOSA and now run a foundation named after their son, where they support their local community through raising awareness about online dangers and offering a college scholarship fund.

Now, Todd Minor would like for the tech executives to reach out to his family and other surviving parents for their input on improving these platforms and prioritizing safety over profits. “Either they comply, or we’re just going to keep coming,” he added.

Authors