Are We Ready for the Quantum Age? Preparing for the Risks of Quantum Technologies with Rights-Respecting Policy Frameworks

Daniel Duke, Lorri Anne Meils / Mar 5, 2024

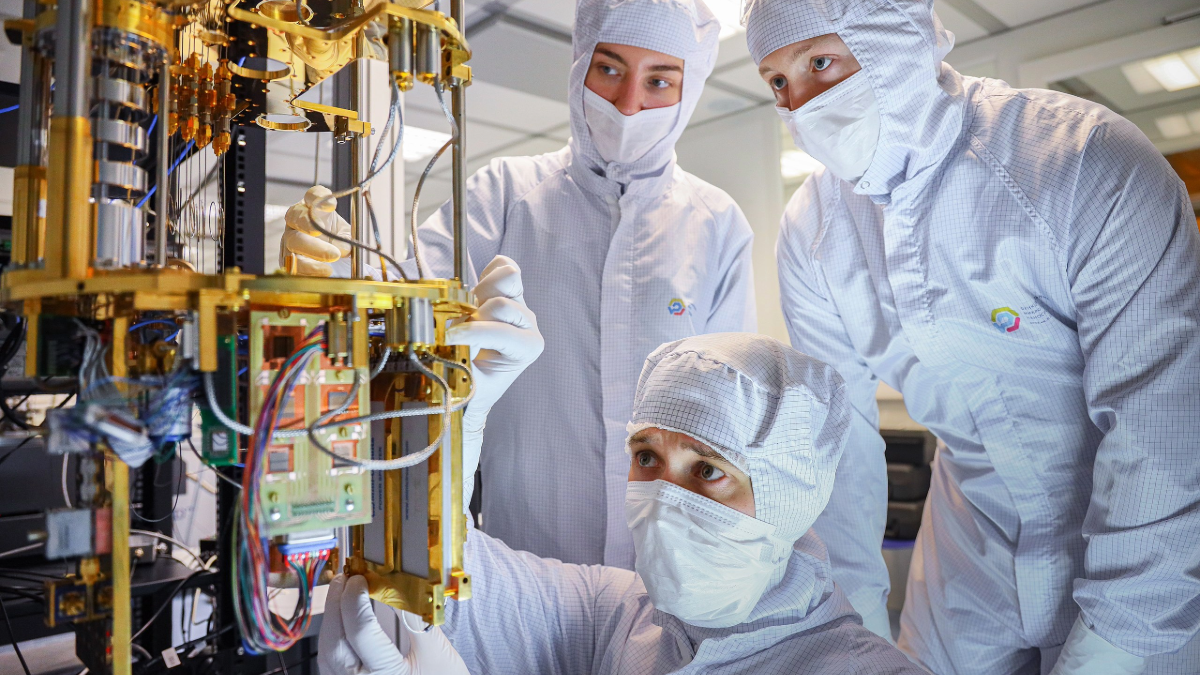

A team at FMN Laboratory assembles a cryogenic part of a quantum computer, which provides cooling of superconducting processors to almost absolute zero. Wikimedia/CC by 4.0

At what point will we declare that quantum technologies are no longer emerging, but have fully arrived? Whatever the breakthrough is that signals the tipping point, legal frameworks are not yet ready to handle the impacts of widespread quantum computing on people, societies and the rights they hold. Recent developments in the artificial intelligence (AI) policy space provide a useful roadmap for anticipating the evolution of policy approaches for regulating quantum technologies and the universe of risks they will bring with them.

Yet, as with AI, the risks are still under examined. Though we know that they will emanate from the ways in which quantum computing will amplify existing technologies–such as AI and surveillance – it is also clear they will stem from brand new capabilities, like breaking all current encryption or the application of quantum sensing (which will bring the ability to see through barriers, around corners, and potentially into the body or mind). This paper aims to shine a light on these risks, as well as the practical steps that can be taken today to address them.

Getting Ahead of the Problem

The widespread release of generative AI models and applications in 2023 sent shockwaves through popular culture and signaled to world leaders and policymakers that the risks of artificial intelligence (AI) outstripped many of our existing risk management frameworks. It triggered an unprecedented wave of new efforts to plug the gaps, including The Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, The Voluntary Commitments from Leading Artificial Intelligence Companies, The Bletchley Declaration, The Hiroshima Process, and the UN Advisory Body on AI Interim Report on Governing AI for Humanity, the NIST AI Risk Management Framework, and the EU AI Act, as well as the forthcoming Council of Europe’s Convention on Artificial Intelligence, Human Rights, Democracy and the Rule of Law.

Stakeholders point out that the fourth-quarter rush to better govern AI parallels the pace of efforts to govern social media and the digital economy. They continue to urge policymakers to act with greater speed to safeguard against AI risks, including stronger application of existing human rights frameworks to manage AI risks. The wait-and-see approach to regulation is only justifiable when the benefits of innovation are clear and the risks are low, ill-defined or under examined. However, quantum computing, particularly in conjunction with AI, has many foreseeable dangers. Hard won lessons from recent tech policy history show us how critical it is for policymakers to safeguard quantum technologies before they are more widely deployed and accessible.

The risks that over-regulation can stifle innovation and cause technological leaders among nations, like the US, to be less competitive in a complicated geopolitical environment are real, and policy recommendations must balance these considerations. Considering quantum regulation now provides an opportunity to develop forward-looking, intentional policy frameworks that better balance the need for innovation with the need to safeguard human rights. Now is the time to begin these conversations before yet another Pandora opens a box of societal ills.

IBM, the United States’ foremost quantum developer, estimates that by 2030 the “full power” of quantum computing will be unlocked. If the company’s estimates are accurate, there could be as little as six years to build the international consensus needed to establish guardrails for responsible and rights-respecting quantum computing, including updated standards for cryptography. If the past is precedent, it will take time for the global community to coalesce around approaches for integrating key human rights principles into innovation-friendly risk management frameworks for quantum, and it will take even longer for new and updated standards to be implemented. For example, in 2022 the Biden Administration’s National Security Memorandum 10 on Promoting United States Leadership in Quantum Computing While Mitigating Risks to Vulnerable Cryptographic Systems, establishes 2035 as the date by which US Government entities should achieve a timely and equitable transition to quantum resistant cryptography to mitigate as much risk as possible. The time to start building consensus is now. What risks should policymakers and companies prioritize and what can be done to manage them?

Imagining what’s at stake

To underscore the urgency of preventative policy action, we present three concrete examples of the potential dangers posed by quantum computing if we fail to take precautionary steps now. These three risks are among the most nearterm issues the world will confront as quantum technologies are deployed for everyday use: encryption breaking quantum computing, the pairing of quantum technologies with artificial intelligence for digital repression, and the application of quantum technologies to make thoughts legible to external observers (also known as mind reading).

First, a quick overview of what “quantum technologies” mean at this moment in time. In their groundbreaking 2021 book Law and Policy for the Quantum Age, Chris Jay Hoofnagle and Simson L. Garfinkel outline three areas in which quantum information science (QIS) will have the biggest nearterm impacts on nation states, decisionmakers (including investors), and individuals’ lives. Those areas are: quantum sensing, quantum computing, and quantum communications, which are defined below. The authors highlight that the nexus of these QIS sectors present a number of potential civil and political rights implications that existing policy frameworks do not yet address. Fundamental human rights standards can and will eventually be applied to prevent and address the application of QIS technologies in harmful ways. However, the slow, halting application of such standards in the social media and AI spaces, often in the wake of avoidable tragedies, teaches us that additional international consensus is required to better define and guide how human rights shape technology governance. The absence of fit-for-purpose frameworks enables bad or negligent actors to take advantage of the gray space to society’s collective detriment.

For many readers, QIS technologies are likely not yet well known. Here are some basics:

- Quantum computing works in a way that leverages quantum mechanics to solve complex problems that classical computers are incapable of solving. Rather than storing information as zeros and ones, quantum computers can store zeros and ones simultaneously, enabling the computers to process and store vastly more information, much more quickly, than a classical computer.

- Quantum sensing is defined by Hoofnagle and Garfinkel as using “quantum properties and effects to measure or sense physical things.” In general terms, this is the use of quantum sensors to measure changes to equilibrium at the atomic and subatomic levels. For purposes of this article we focus on magnetic field sensing and quantum sonar which could be used to detect subtle electrical signals in the brain that can be used for what Hoofnagle and Garfinkel refer to as “brain wiretapping.” Quantum sensing provides an excellent example of potential risks that are not specifically addressed by existing regulation. As Hoofnagle and Garfinkle point out, “With the power to see through roofs and walls, or as sensing peers into the body and possibly the human mind, society will have to reconsider boundaries and rules on what may be observed.”

- Quantum communication uses quantum mechanics to encrypt and transmit data in a way that is undetectable and undecipherable by classical computers. Quantum communication via quantum satellites is already in use, and increased uptake will greatly impact international relations. According to reports, Russia and China recently established a quantum communication link. The emergence of siloed communication channels among trusted allies stands to shape the nature of geopolitical alignment in the near future.

While we are focused on the potential human rights risks that could result from more generally accessible quantum technologies, human-rights based risk frameworks can and should be developed to consider the broader range of risks relating to the application of QIS technologies across the tech stack and across all sectors of society, industry and national defense. This article outlines some of the most troubling risks, largely outside of the national security context, and suggests potential policy approaches that policymakers can prioritize in the coming decade.

The End of Data Encryption as We Know It?

In this age of hyper-connectivity, the sanctity of personal information underpins not only individual privacy but also the pillars of national security and global diplomacy. This sanctity is often secured by RSA encryption. In basic terms, RSA encryption involves two keys: a public key, which can be shared with everyone, and a private key, which is kept secret. When a message is sent, it is encrypted using the recipient's public key. This encrypted message can only be decrypted with the corresponding private key. The security of RSA stems from the fact that, while it's relatively easy to multiply two large prime numbers together to create a product, it's extremely difficult to do the reverse—that is, to start with the product and find the original prime numbers. This one-way function is what makes RSA encryption among our most robust data privacy protections. The greatest supercomputers on the planet today would take millions of years to break this code. A seemingly invincible algorithm will meet its match, though, in the coming age of quantum computing.

Quantum computers are uniquely advantaged in solving this problem due to their fundamentally different approach to processing information. Qubits within a quantum computer exist in multiple states at once, in stark contrast to the binary nature of traditional bits. Quantum programs such as Shor’s Factoring Algorithm take advantage of this property in order to test an array of potential factors in the public key all at once. This fundamental distinction and other qualities allow these devices to determine the correct factors much faster than traditional computers. A sufficiently powerful quantum computer could cut the time needed to decode RSA encryption from eons to minutes.

Some experts hold that RSA’s demise is a distant problem, given the current capabilities of quantum computers. While we are still jumping the technological hurdle of scaling quantum devices, and although Shor’s algorithm is computationally taxing, recent research such as that by NYU researcher Oded Regev may bring about quantum code-breaking much sooner than we once thought. Given the rapidly changing quantum landscape, with new research constantly being published, the uncertain timeline for these algorithms is all the more reason to be prepared.

The threats that this development poses to our data infrastructure are glaringly obvious. In addition to threatening the security of government secrets and citizens’ private information, an RSA breach could have significant human rights implications. Consider the nature of end to end encryption over messaging services that use RSA encryption such as Skype, Apple iMessage and Telegram. These tools provide human rights defenders and activists with a means of communication that is less vulnerable to unwarranted surveillance practices, enabling them to avoid arrest or detention for exercising protected civil and political rights. As quantum computers extend encryption breaking capabilities to repressive regimes, human rights defenders will become easy targets for government surveillance and repression. Repressive regimes may already be collecting currently uncrackable message contents in hopes they may be readable down the road using a “Harvest Now, Decrypt Later” methodology, a scenario that has already prompted some tech firms to act.

Policy Response: How Can We Quantum-Proof Encryption?

Adopting post-quantum cryptography will be logistically challenging and resource intensive, but it is an issue we must address urgently. The path is clear: establish a more forward-looking quantum policy agenda that mandates the overhaul of our encryption standards and software to elevate the use of algorithms that are safe against classical and quantum computation. The United States has already taken decisive action in this area. In 2022 the Biden Administration’s National Security Memorandum 10 on Promoting United States Leadership in Quantum Computing While Mitigating Risks to Vulnerable Cryptographic Systems established 2035 as the date by which US Government entities should achieve a timely and equitable transition to quantum resistant cryptography to mitigate as much risk as possible. To support implementation of NSM-10, the US is developing standards for post-quantum encryption methods through The National Institute of Standards and Technology (NIST), which has already selected four quantum-proof encryption algorithms.

The development and integration of these standards into software and hardware requires concerted efforts from manufacturers and developers, including rigorous security and interoperability testing. Moreover, the update of critical infrastructure and services must be prioritized to uphold security and trust. Regulatory adjustments by governments to foster or enforce the adoption of these new encryption standards are essential, alongside public education initiatives to highlight the importance of embracing these updates for enhanced security. Continuous research and adaptation are imperative to counteract evolving cyber threats and technological innovations, effectively future-proofing encryption methods. The degree to which new standards are implemented depends upon the availability of sufficient resources to convert encryption systems. Those resources will only be made available if government and private sector stakeholders are sufficiently aware of impending risks and motivated to prioritize often scarce resources.

What Risks Can We Expect from the Marriage of AI and Quantum Computing?

Academics, policymakers and civil society groups have raised alarm bells in recent years to draw attention to the risks posed by the misuse of technology, including artificial intelligence, to repress political opposition, surveil activists and control populations. As authoritarian (and some democratic) regimes increasingly harness technology to repress the public and retain or expand power, threats to fundamental civil and political rights are growing. While policymakers currently have their hands full developing human rights frameworks and safeguarding tools to better identify and manage the risks of artificial intelligence, advances in QIS will not wait. As human rights and technology scholar Vivek Krishnamurthy warns us, “Quantum technologies may not yet be at the level of development where their potential impacts can be examined in detail. Even so, now is the time for the [quantum science and technology] and human rights communities to begin a dialogue to prepare for the deployment and commercialization of these technologies in a rights-respecting manner.”

While many unknowns remain, there are a number of risks that are more foreseeable, as described below. Is there a way to shape evolving AI risk management frameworks to account for the additional impacts of AI combined with quantum technologies? For example, guardrails that mitigate the risks of AI-powered data fusion and social scoring would go a long way to mitigating the compounded impacts when AI is combined with quantum technologies. In addition to building upon the policy roadmap provided by AI governance frameworks in the future, is it possible to embed additional, quantum-facing risk management measures now?

1. Digital Repression through AI-Powered Surveillance Technologies and Big Data Analytics.

AI is already being used by autocratic governments to better track political opposition and activists, and to coerce support for autocratic regimes through denial of needed government services. As noted in the 2020 Senate Foreign Relations Committee report on the use of surveillance and big data analytics in the People’s Republic of China, “artificial intelligence, facial recognition technologies, biometrics, surveillance cameras, and big data analytics [are being used] to profile and categorize individuals quickly, track movements, predict activities, and preemptively take action against those considered a threat in both the real world and online.” Through big data analytics, algorithms conglomerate personal data and surveillance data surrounding one’s behavior, activities, and social interactions in order to track or even score individuals. This process requires the analysis of a huge amount of data, which is challenging for classical computers on a massive scale, but ideal for quantum systems. Quantum computers’ ability to handle vast amounts of data at high speeds will enable disturbingly sophisticated and invasive analysis of personal behaviors and social interactions. This increased computational power allows for the real-time monitoring and scoring of individuals on a more granular level, “super-sizing” tactics for authoritarian control and surveillance.

2. The Death of Anonymity.

As alluded to above, real-time remote biometric surveillance equipment creates the capacity to track individuals. Digital identification and centralized databases for this information create the potential for governments and for-profit enterprises to misuse such systems to monitor individuals through the use of big data analytics. Artificial intelligence can make sense of this data in order to create profiles of citizens which aim to distinguish one person from another based on collected biometric information. The Carnegie Council estimates that over 100 US cities are currently using “data fusion” technologies to track individuals through doorbell cameras, license plate readers, digital utility meters, street cameras, and GPS technologies, in a way that can create extensive individual profiles. Data fusion is defined as bringing data points together to create a “swarm” of information that can reveal a great deal about a traceable individual. The Carnegie Council’s Data Fusion Mapping Tool provides an overview of the impacts of data fusion on the exercise of civil liberties in the US and highlights the risks of allowing data fusion to be used in jurisdictions without adequate due process or other risk mitigation measures.

AI-powered data fusion is not yet universally used. Now is the time to consider the implications of a “super-sized” universal data fusion capacity powered by quantum computing technology. Quantum-powered data fusion could make it impossible for an individual to evade tracking due to the power to process massive amounts of data pulled from unlimited public or private sector sources. Quantum computers will further expand the ability of surveillance systems to recognize your gait across millions of hours of surveillance footage, single out your voice from an audio recording of a crowded room, or identify you from the cadence of your keystrokes, without needing to read the text you send. Whether moving through city streets, participating in protest, or simply enjoying the supposed solitude of open spaces, the shadow of surveillance looms large, with quantum-enhanced systems capable of sifting through the haystack of data to pinpoint the needle of an individual identity with astonishing precision. In short, the birth of quantum computing may signal the death of anonymity.

Due to their ability to analyze huge data sets and recognize patterns or deviations from those patterns, quantum computers detect anomalies far more effectively than do classical computers. When fed surveillance data regarding the behavior of an individual, a future quantum computer would have the power to determine if that behavior deviates from their usual conduct, and ascertain what future actions will likely stem from this abnormality. Human rights concerns arise if and when this technology is applied for the purpose of predictive policing. Detaining or questioning individuals based on predicted future actions blurs the line between potential and actual wrongdoing. If left unchecked, this predictive technology could be used to further erode the line between intent to potentially commit a crime and the criminal act itself.

Policy Response: Approaches to Preventing Quantum-Powered Digital Repression

Lawmakers are working to enact safeguards needed to address risks that can result from the application of artificial intelligence for certain uses and in certain contexts. For example, the EU AI Act will prohibit social scoring, certain applications of predictive policing, and remote biometric identification for law enforcement purposes in public settings. There is not yet global consensus supporting prohibition of these uses of AI, and there are clear concerns that such prohibitions will stifle innovation or constrain law enforcement. The fact remains that international consensus for innovation-friendly AI safeguards are urgently needed before the riskiest use cases outlined above become commonly accepted practice. Such guidelines, many of which are under development by the United Nations, OECD (in multiple papers), and other international bodies, will provide an invaluable roadmap for launching similar efforts to constrain the misuse of quantum-based technologies for digital repression.

Beyond the policy realm, are companies taking up the challenge to design, develop and deploy QIS in ways that protects us from extreme “misuse” cases? If QIS is deployed in tandem with data-driven AI technologies, then the biases and inaccuracies that can emerge from AI applications would be substantially scaled beyond what we see today. How will existing algorithmic bias audits or similar safeguards be tweaked to consider the potential impacts of the quantum age? What role can regulation play in prompting companies to take such steps without stifling innovation or hampering law enforcement? How can we advance such efforts now, before pandora opens the box? And perhaps most urgently, can we apply a quantum lens to the development of AI governance frameworks today that may help us mitigate tomorrow’s risks?

Brain Legibility

We are already living in a time when machines are capable of translating your brain activity, as seen through an MRI, into words. Your very thoughts are now legible. Surveillance cameras are similarly trained to register your emotions–this is a form of emotional AI, which companies are already using to improve targeted sales. Do you have the right not to have your mind or emotions read? This is a question we will need to resolve before quantum computing amplifies the capabilities of mentally intrusive technologies.

Quantum computers are likely to further amplify the power that classical computers already have to identify patterns and correlations in MRI brain scan images that classical computers cannot. Consider again the question of arrests made possible by quantum computing. Is a quantum powered lie detector test—one using an MRI machine and a sufficiently powerful quantum AI algorithm, instead of a heart rate monitor—admissible in court? To take it a step further, is intent to commit a crime, if recognized through the power of a quantum mind-reader, grounds for legal intervention? And what guardrails would be required to ensure that the data sets upon which such algorithms are based are free from bias and inaccuracy? While these applications of quantum computing are more speculative than the inferences made above, they are potentially more urgent given the degree of possible harm and the absence of targeted human-rights frameworks or safeguards.

Policy Response: Establishing Guardrails to Protect the Legible Brain

Critical questions about the limits of brain legibility do not appear to be at the forefront of most AI policy conversations, which leads one to conclude they will be similarly sidelined in future engagements on the intersection of human rights and quantum computing. Policies that establish human rights-based neurological safeguards are still underdeveloped. Now is the time to better define them. While we are far from an international consensus, one initial effort to define “neurorights” identified five categories that could be helpful in considering the impact of quantum-powered brain legibility. Those rights are: “the right to mental privacy so that our brain data cannot be used without our consent; the right to free will, so we can make decisions without neuro technological influence, the right to personal identity so that technology cannot change our sense of self, the right to protection from discrimination based on brain data, and not least, the right to equal access to neural augmentation.” International policy conversations outlining the application of human rights in this context are urgently needed and long overdue. It is unclear whether the “neurorights” discussion will attract global attention. Fortunately, policymakers have a wealth of existing human rights to consider in connection with emerging quantum mind-reading risks, including the right to bodily integrity that protects autonomy over one’s body.

Conclusion: The Sprint to Regulate AI Provides a Critical Policy Roadmap for Quantum

It is too early to identify the full range of potential impacts that QIS technologies may have on individuals and societies. However, experience establishing safeguards in connection with the internet, social media and artificial intelligence shows how difficult it can be to erect risk management efforts after economic models are entrenched or unregulated behaviors coalesce into accepted practice, regardless of their impacts. Now is the time to raise awareness of the foreseeable risks and increase research on risks that are less well understood. Increased advocacy by stakeholders from civil society, consumer protection organizations and academic institutions will help to justify allocation of the resources needed to achieve the recommendations outlined above. Financial commitments by public and private sector entities will be necessary to support a transition to quantum-ready encryption by 2035. Resources will also be needed to support policy analysts in considering if and how quantum considerations can be accommodated in today’s AI risk management frameworks. And perhaps most importantly, QIS developers must allocate sufficient resources to understand the impacts that brand new capabilities–like quantum sensing–will have on individuals and society as a whole.

The quantum computing community has a great deal to learn from recent efforts to apply the UN Guiding Principles for Business and Human Rights to generative AI models and applications. Such efforts provide a roadmap for better weaving human rights-based enterprise risk management approaches to govern QIS for governments and businesses alike. QIS stakeholders are fortunate to have an opportunity to build upon the evolving international consensus being hammered out now for AI.

The risk quantum computing poses to RSA encryption is already well understood, and NIST has established important guidelines for bringing encryption standards into the quantum age. However, as noted above, this shift will require significant policy support and even public funding to ensure that the pace of transition matches evolving quantum capabilities.

While world leaders and policymakers have their hands full addressing the most urgent AI-related risks, parallel questions in the QIS space will become increasingly urgent as we near 2030. Bandwidth among policymakers in the technology space is more limited than ever, and one can argue that regulating quantum risks should take a backseat when compared with the urgency of present day impacts of AI. While the risks may be years away–they will also be significant. This moment offers an important opportunity for the legions of organizations, think tanks and academics who moved quickly to respond to evolving risks of generative AI to now turn their attention to the QIS horizon. This is the time to prepare the same level of thoroughly researched, insightful and practical recommendations for innovation-friendly QIS risk management that will enable policymakers and companies to take action before global society becomes a real-time testbed for identifying QIS impacts.

This article represents the opinions of the authors and in no way reflects the position of the United States Government or USAID. Thanks go to Stanley Byers, Chris Doten and Paul Nelson for their contributions to this article.

Authors