AI-Powered Web Search Gives Climate Deniers and Conspiracy Theorists Free Rein

Jutta Haider / Aug 27, 2024The most dangerous problem with AI chatbots in web search might not be that they are great at making things up, but that they are terrible at even basic media and information literacy and can't evaluate sources, writes Jutta Haider, a professor at the Swedish School of Library and Information Science, University of Borås, and a member of the program team of Mistra Environmental Communication.

Luke Conroy and Anne Fehres & AI4Media / Better Images of AI / Models Built From Fossils / CC-BY 4.0

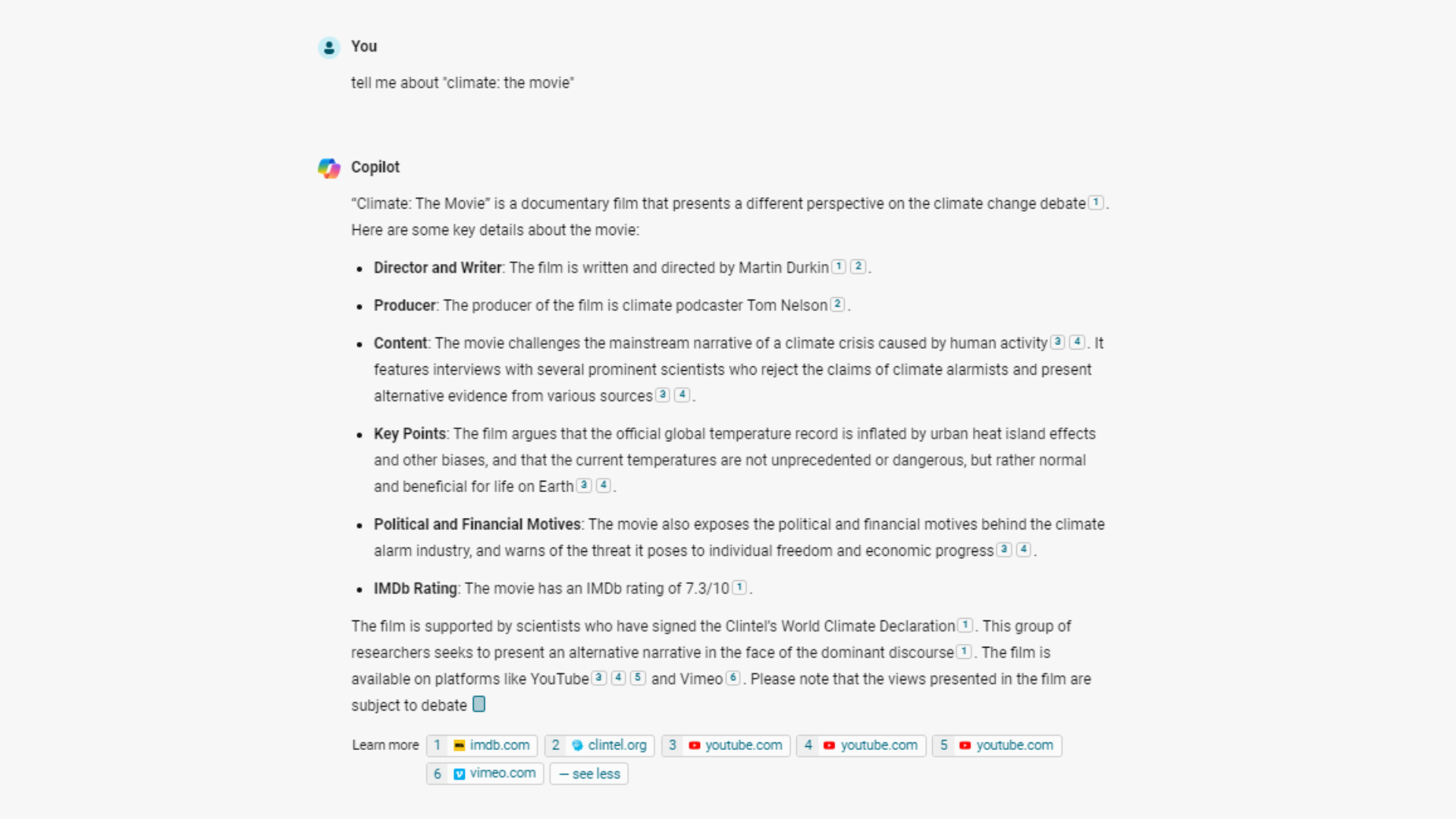

A few weeks ago I asked Microsoft’s Copilot what it could tell me about Climate: The Movie, a climate denial film that was going viral online. The response was disturbing. But, as a researcher of media and information literacy, search engines, and climate change ignorance, I was not surprised. The film was described as a “documentary film that presents a different perspective on the climate change debate.” A neat bullet point list informed me that the film exposes the “political and financial motives behind the climate alarm industry” and features “prominent scientists” who oppose “climate alarmism” with “alternative evidence.” All of this is either wrong or misleading. Since Climate: The Movie was released in March 2024, commentators, scientists, and other experts have debunked virtually every aspect of it.

Screenshot of a May 2024 response from CoPilot provided by the author.

Web search with AI assistance

Had Copilot just hallucinated its answer? No, everything was supported by references and links to existing sources. Copilot is an AI assistant and includes a new type of web search engine. It uses AI to search the web, select the most relevant results, and summarize them. The technology is called Retrieval Augmented Generation, or RAG for short. It is also used by Google for AI Overviews and other AI-supported search engines, including You.com, Perplexity, and SearchGPT, in a rapidly evolving sector.

Down the climate conspiracy rabbit hole

Climate: The Movie promotes conspiracy-fueled climate denial, and Copilot’s description of it did too. But Copilot’s description did more than that. It included references with links to sources and encouraged me to look them up. Although this increases transparency, it also makes the text appear more authoritative. And there is a further downside. I was recommended links to the film on YouTube and Vimeo, to reviews on IMDb, at the time mostly positive ones, or to the CLINTEL Foundation, which distributes and subtitles the film, and propagates other climate denier content. In other words, I was shown a path leading straight into the world of climate denial conspiracy theories.

Was this an unfortunate one-off? Asking Copilot about other climate denier films, books, and podcasts produced mixed results. Often, the summaries seemed to just paraphrase a title’s promotional material, complete with scare quotes and dog whistles, and recommend links to even more climate denial. Most of the climate denier and climate obstruction content I searched for was portrayed in a favorable light. A recurring theme was how the material offered necessary critical perspectives that were otherwise missing. Balance was attempted by noting that a title had met criticism and sometimes with a disclaimer stating that climate change is complex or that different views exist. Films like No Farmers, No Food. Will you Eat the Bugs? (2023) and Doomsday Called Off (2004), as well as several climate denier books, also in German and Swedish, were presented this way. I ran spot checks on You.com and Perplexity, two other AI search engines, and the results were similar.

Wikipedia

It was concerning, but then I noticed something. When a Wikipedia page existed, the AI-created summaries improved. Although the improvements were sometimes small and inconsistent, they were still noticeable. At minimum, the links not only led to more climate denier content but also to Wikipedia articles that accurately described the title as misleading and incorrect. Examples are The Great Global Warming Swindle (2007) or Not Evil. Just Wrong (2009). I did not carry out systematic comparisons. However, sometimes the contrast was stark, such as for Germany-based EIKE, commonly referred to as a climate change denial organization. When I asked Copilot about the organization using its English name, the response largely parroted their own presentation. In contrast, using the German name Europäisches Institut für Klima und Energie produced a description of EIKE as a lobby organization spreading misinformation. One important difference: a Wikipedia page exists in German but not in English.

Since I am based in Sweden, I did not have access to Google’s AI Overviews to test how it depicts climate denier content. However, its launch in the United States earlier this year was a debacle, and there are many examples of absurd, misleading, and even dangerous replies. It is safe to say that both Microsoft’s Copilot and Google’s AI overviews, together with other AI-supported search assistants, have the same shortcoming.

Automating media and information literacy?

Microsoft explains that Copilot brings together “reliable sources” from “across the web.” Google emphasizes the importance of accurate output supported by “top web results”. Copilot’s parroting of climate denial content and Google's inadequate AI Overviews show that this is not always the case. The slogan Google used when it launched AI Overviews was, “Let Google do the searching for you.” But this is not what is at stake. Google Overviews, Microsoft Copilot, and other AI-supported search assistants want us to let them select and evaluate sources for us, what is called media and information literacy. And not only are they terrible at it, but they shouldn’t be doing it in the first place.

Media and information literacy has two sides. Firstly, there are checklist approaches, such as the CRAAP test (currency, relevance, authority, accuracy, purpose) or the SIFT model (Stop; Investigate the source; Find better coverage; Trace claims to the original context). Secondly, checklists are embedded in complex social processes of meaning-making. Media and information literacy requires understanding, cultural competencies, and also common sense. All things that generative AI lacks. Google Overview’s nonsensical answers and Copilot’s parroting of climate denial show that today’s AI search assistants are awful at checklist approaches too. After all, the difficulty is not to create good summaries of good content but to know when not to create summaries at all.

Microsoft blames unreliable or low-quality content for the failures of its AI search assistant. Google blames data voids. Such data voids occur exactly when certain topics lack reliable and high-quality online content, allowing for the strategic placement of misleading information to rank highly in search results. Crucially for AI search, they have been shown to be manipulated and exploited for spreading misinformation through search engines.

AI-supported web search will improve. But the source of the problem, namely data voids and unreliable, low-quality information, will not disappear. Retrieval Augmented Generation (RAG) works best when you know what documents a database contains, but the web is not such a quality-controlled database. Therefore, AI summaries of web search results will remain unreliable. To make matters worse, AI summaries will make identifying data voids and addressing the spread of misleading, harmful content even harder. It is not a matter of millions of AI summaries delivering high customer satisfaction, but of a handful of them advancing harmful claims. The systemic risks this entails far outweigh the benefits of an improved search experience.

What should be done?

Firstly, search engine providers and AI developers must start treating climate change and climate denial as existential threats and change their content moderation policies, relevance signals, and algorithms to reflect this. Second, any automation of media and information literacy, if it happens at all, must be subject to public oversight and regulation. And finally, those who profit from providing AI summaries must take responsibility for the harms they cause, including harms to nature. This means that responsibility and accountability agreements should apply to each and every summary produced by AI-supported search assistants.

To address climate change, it will require a global effort; companies that are in the business of providing information related to it must recognize their responsibility in the stewardship of global collective behavior. Equally important, if not more so, regulators, policy makers, and civil society must recognize their responsibility to hold them to account and create democratic, trustworthy and trusted mechanisms to do so.

Authors